The Watch For group within Microsoft’s XBox division runs a large scale media AI platform that analyzes images, videos and live streams in real-time, processing millions of hours of video content and billions of frames every month. Watch For services several large vertical organizations within Microsoft such as XBox, Bing and LinkedIn across various use cases such as digital safety and media analytics, ultimately reaching hundreds of millions of end users. However, AI inference at such a massive scale is very expensive.

Today, we’re excited to announce the results from our second phase of partnership with Microsoft to continue driving down AI inference compute costs by reducing inference latencies. Over the past year, OctoML engineers worked closely with Watch For to design and implement the TVM Execution Provider (EP) for ONNX Runtime - bringing the model optimization potential of Apache TVM to all ONNX Runtime users. This builds upon the collaboration we began in 2021, to bring the benefits of TVM’s code generation and flexible quantization support to production scale at Microsoft.

The OctoML platform has always provided automation for exploring multiple model acceleration techniques. By integrating the new TVM EP into OctoML, now all OctoML customers have even more flexibility and choice to use ONNX Runtime and TVM together.

OctoML model acceleration with ONNX Runtime - TVM EP

Model optimization for Xbox Safety

The Watch For platform uses the ONNX Runtime inference engine internally to optimize and accelerate the prediction times for their large scale deep learning models. ONNX Runtime’s pluggable architecture brings significant flexibility to machine learning deployments by supporting all the leading ML frameworks such as PyTorch, TensorFlow/Keras, TFLite and Scikit-Learn on diverse hardware from NVIDIA, Intel, Qualcomm, AMD and Apple using an extensible execution providers (EPs) framework. However, existing ONNX Runtime EPs map ONNX graphs to proprietary engines or a fixed set of hand written operator libraries. But these can sometimes leave performance on the table or lead to model coverage gaps, especially for novel models and use cases.

Last year, we helped Microsoft run inference benchmarks that showed Apache TVM’s model compilation approach could deliver significant performance improvements over the existing execution provider backends for some critical models. But the engineering cost of switching some models from ONNX Runtime to TVM was prohibitive because of several pragmatic requirements of their platform. Primarily, adding a second inference engine API beyond ONNX Runtime would require re-architecting core parts of the platform, and increase the engineering burden of supporting two APIs.

Two insights led us naturally to the solution. First, ONNX Runtime’s pluggable architecture makes it easy to add new execution providers, enabling different hardware acceleration libraries. Second, Apache TVM’s runtime and generated code was designed from the beginning to be embeddable with a minimum amount of dependencies and environment assumptions. Integrating Apache TVM with ONNX Runtime was the obvious choice.

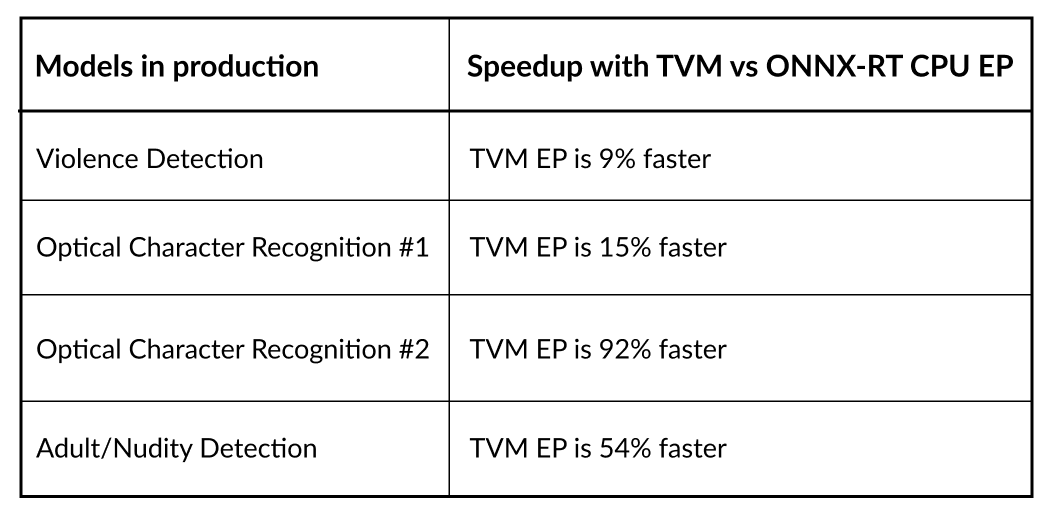

The new TVM Execution Provider for ONNX Runtime is now running in production at Microsoft for accelerating four key models with speedups between 9% - 92% compared to the ONNX Runtime Default CPU EP configuration used in production. Watch For identified these models by running several of their deep learning models through OctoML’s automated benchmarking process to identify those that most benefited from TVM acceleration. These four computer vision convolution based models are a mixture of image classifiers and object detection models.

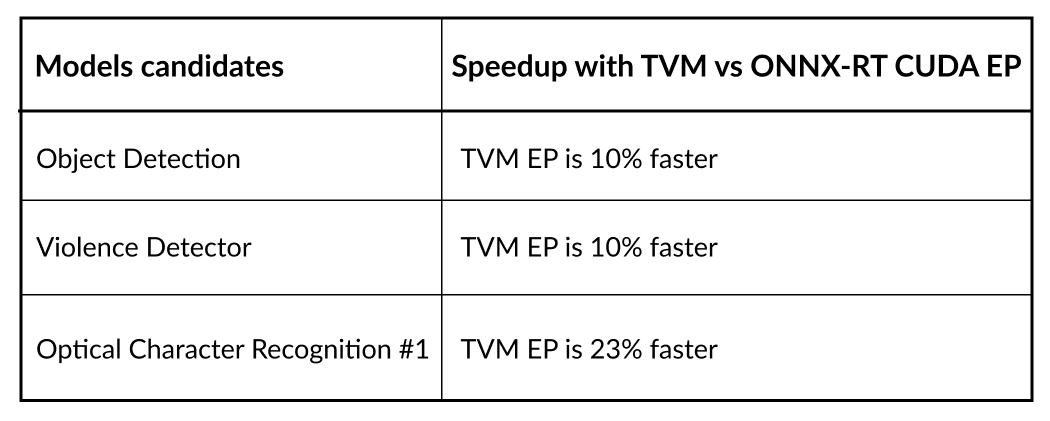

Additionally, OctoML and Watch For have identified three more models that run on GPUs where TVM acceleration outperforms other execution providers. Microsoft is exploring adding these models on GPUs:

TVM Execution Provider is unlike other EPs

The Apache TVM Execution Provider (and Apache TVM in general) takes a different approach to model acceleration compared to many other execution providers. Most execution providers in ONNX Runtime apply pattern matching, where each deep learning compute node is mapped to a pre-optimized operator. In some cases these patterns might map multiple nodes to a single operator, this optimization is called fusion, but this only happens heuristically in pre-defined cases.

Apache TVM, on the other hand, does not rely on traditional operator mapping. TVM uses code generation to explore thousands to millions of generated kernels for each function and then automatically selects the best performing kernel. TVM can be used to search for the fastest optimizations for all deep learning models. And especially when novel models are used, TVM can often outperform traditional libraries which may not have coverage for the newer kernels. Another pattern we discovered is that hand tuned libraries often optimize for specific input shapes for a kernel - if a model deviates from the assumptions, performance can suffer. Since TVM makes no input shape assumptions, it can result in significantly faster inference for such models. But as a consequence, this specialization on specific shapes can result in certain scenarios.

Another distinction of the TVM EP is when the model acceleration takes place. For most execution providers, optimizations are done on the graph based on the capabilities of the acceleration library before the model is executed, and subsequent runs use the same optimized graph. However, with TVM the model acceleration takes place at build time (e.g. during CI) to emit a final compiled model. This model is then used for each inference, without the need to re-run the acceleration path at session start-up.

OctoML & TVM now in Microsoft Azure

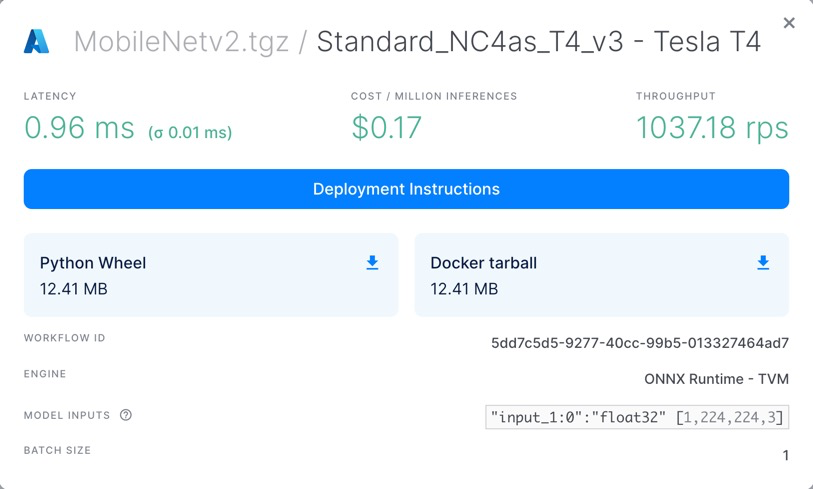

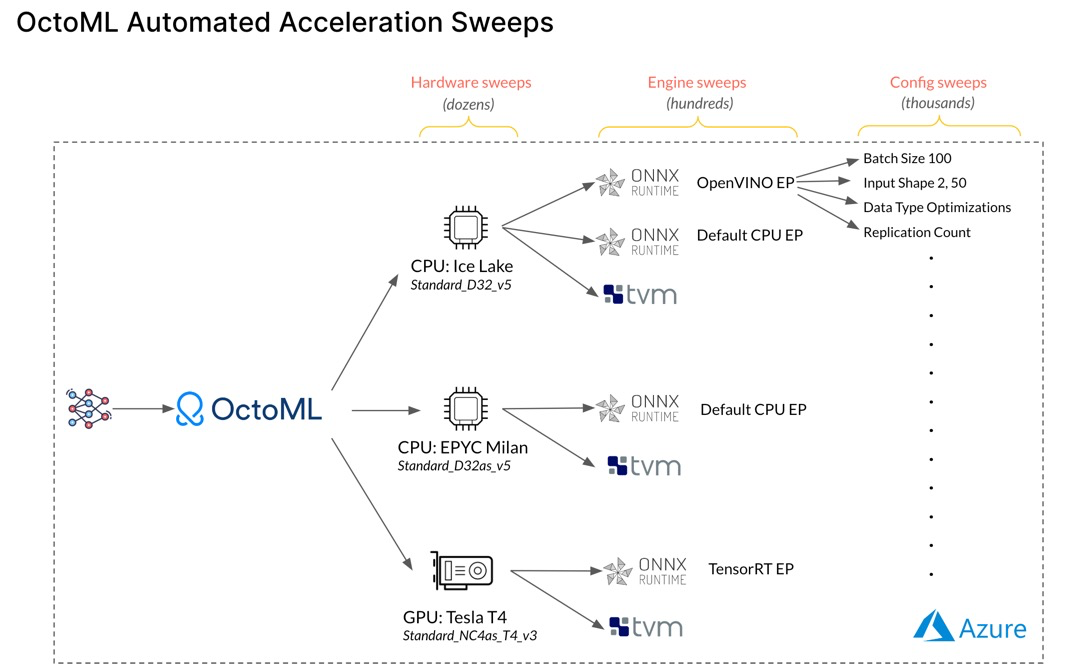

As part of our collaboration, we have added back our innovations to the OctoML platform in Azure’s Marketplace, so all Azure ML customers can take advantage of TVM’s model optimization. If you currently use ONNX Runtime to optimize models, you can now use OctoML to sweep through various EPs such as Default CPU, OpenVino, TensorRT and the new TVM EP to find the best performing acceleration library for your model.

Beyond selecting the ideal acceleration library, OctoML also sweeps through multiple candidate hardwares and library configurations to find the best combination of hardware, software and configuration for each model. OctoML’s built-in automation slices through the combinatorial explosion of potentially thousands of final configs and bubbles up the most promising optimization paths. By leaving no stone unturned, OctoML ensures you’re getting the best performance and highest cost savings possible.

The OctoML machine learning deployment platform is now available in Azure. Contact us to get a free trial and see for yourself how OctoML gets you the fastest and most cost effective models into production.

Related Posts

Model optimizations can save you millions of dollars over the life of your application. Additional savings can be realized by finding the lowest cost hardware to run AI/ML workloads. Companies building AI-powered apps will need to do both if they want a fighting chance at building a sustainable business.

2022 will go down as the year that the general public awakened to the power and potential of AI. Apps for chat, copywriting, coding and art dominated the media conversation and took off at warp speed. But the rapid pace of adoption is a blessing and a curse for technology companies and startups who must now reckon with the staggering cost of deploying and running AI in production.