In this article

In this article

Authors: Jason Knight (OctoML), Josh Fromm (OctoML), Matthai Philipose (Microsoft), Loc Huynh (Microsoft)

Pushing quantization to new extremes

OctoML engineering collaborated with Microsoft Research on the “Watch For” project, an AI system for analyzing live video streams and identifying specified events within the streams. The collaboration sped up inference for the deep learning algorithms that analyze video streams, demonstrating an inference throughput increase of more than 2x with ongoing implementation work to realize those throughput advances as reductions in production costs. The recent engineering developments that made the inference speedups possible are now available for the entire TVM community via Auto-scheduler and INT4 quantization.

Use case background

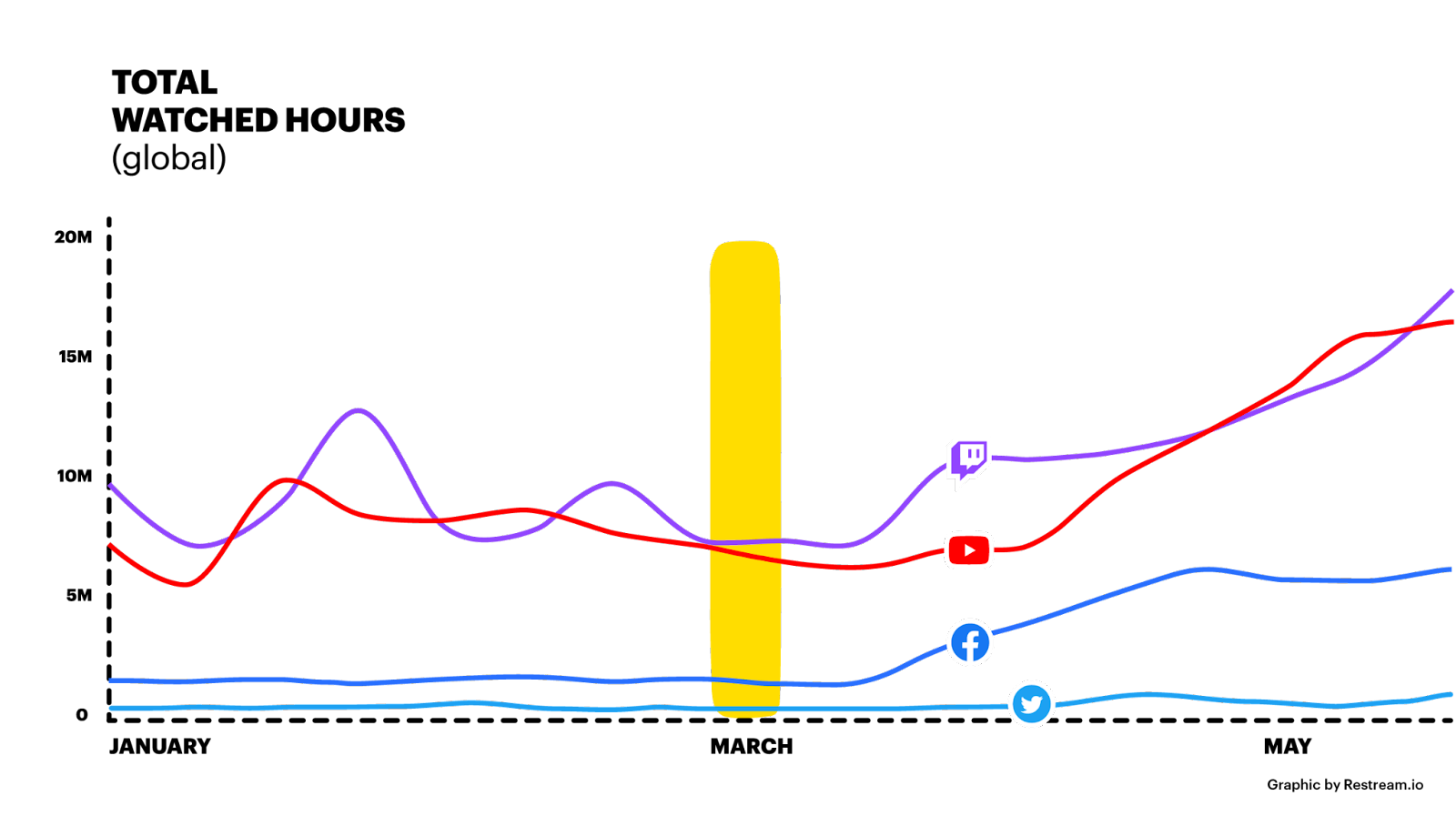

The video game streaming industry has seen phenomenal growth recently, with gaming and Esports driving 54% of all live streaming broadcasts. With much of the world’s population forced to spend more time at home because of the pandemic, total hours watched accelerated globally:

Creating infrastructure for processing these massive amounts of live video is computationally demanding and a challenging distributed systems problem. Similarly, analyzing the video for insights with efficient deep learning is an emerging computer vision research discipline.

Recently OctoML collaborated with Microsoft Research to speed up deep learning algorithms on video. All deep learning algorithms, including video analysis, are composed of tensor computations, also called operators, such as matrix multiplication or convolutions. Apache TVM’s new auto-scheduler generates high performance code for each operator automatically while exploring more optimization combinations and picking the best one via evolutionary search and a learned cost model. This means that engineers using Apache TVM no longer have to rely on potentially outdated manual templates or have to write new templates for new operators. Auto-scheduler significantly simplifies the process of generating tensor programs with up to 9x faster performance without relying on vendor-provided kernel libraries or manually written templates.

Another recent TVM feature that we used in partnership with Microsoft Research is INT4 quantization for inference. Post-training quantization can reduce the production model size and reduce inference latency while preserving the model’s accuracy. However, requantizing from INT32 to INT4 is a relatively new technique and we’re excited to share our developments with the ML community.

Watch For

Processing insights from massive streams in near real time is the responsibility of the Watch For team within Microsoft Research. The group is a blend of distributed systems, AI engineers and researchers who push the boundaries of both.

The Watch For team provides end-to-end (research to production) media AI solutions for several organizations within Microsoft, with applications ranging from digital safety to gaming analytics. Utilizing deep-learning based computer vision algorithms, the system identifies text, symbols, and objects in both live and stored video content.

A sample of Watch For’s production releases includes:

Digital Safety solutions (identifying adult, violent and other content) for several Microsoft organizations including Xbox, Bing and LinkedIn

- Bing’s live stream search over games

AI-powered experiences in MSN Esports Hub, including Search, Spotlight and Highlights

- HypeZone, surfacing the most exciting gaming moments streamed over Microsoft’s former Mixer game streaming service

The business challenge: Efficient video analysis to allow massive scaling

Given the large quantity of video it analyzes, efficiency of inference on video is critical for Watch For. Microsoft Research engaged OctoML to understand the potential for model compilation (a la TVM) to contribute to efficiency. Our goal was to maximize the frames per second that a given processor (CPU or GPU) could process.

Here are examples of analyses performed by Watch For:

Detecting icons and scores on gaming frames

Detecting gore or nudity in consumer contributed video

Recognizing particular screens and scenes

Detecting objects that may have implications for safety

Estimating whether individuals are underage

Automatically localizing a player on a gaming map

How OctoML helped: Auto-scheduler and pushing quantization to the extreme

As part of the engineering engagement with Microsoft Research, we simplified using Apache TVM with Auto-Scheduler and sped up inference with INT4 quantization.

Auto-scheduler

Apache TVM optimizes tensor programs via a sequence of transformations, like operator fusion to combine multiple operators into a single kernel or reordering loops for better locality. These predefined transformations are invoked via a process called scheduling and transformation is considered a schedule primitive. The search space or design space is the set of all possible schedules a tensor program can be subjected to.

Unlike the first generation AutoTVM, the second generation auto-scheduler (available in TVM since summer 2020) does not require manually written schedule templates to define the search space. Users only write the operator they want to use and auto-scheduler automatically generates a large search space and selects the ideal schedule template for the operator based on predefined search rules.

Microsoft Research leveraged OctoML’s expertise in TVM to determine what blend of features to use from AutoTVM, Auto-Scheduler and various quantization passes to get the best performance, batch size section and data memory layout (like column major or row major). Our research showed that for models amenable to tensorcore on NVIDIA GPUs, leveraging AutoTVM with tensorcore enabled schedules was beneficial, while for models with parameter sizes, channels, widths or depths not amenable to tensor cores, auto-scheduler, with its larger search space, provided better performance.

Ready to use Auto-scheduler? Check out the Apache TVM docs for template-free Auto-scheduling tutorials.

What’s next? Work is now underway in the Apache TVM community on Meta Schedule, our third generation automatic scheduling system, stay tuned for more announcements shortly.

INT4 quantization

Models deployed today in the Nexus cluster are a combination of FP32, FP16 and INT8. By using quantization to reduce the size of the parameters in a neural network while preserving accuracy, inference can run faster, with a lower memory footprint. Quantization approximates the larger numbers like FP32 used in the network with lower bit numbers, such as INT8. However, as part of our collaboration we wanted to push quantization much further.

Microsoft Research was interested in how much further we could exploit quantization to accelerate their models while sacrificing some accuracy for speed. Generally extremely low quantization bits like INT4 are not used as they could affect accuracy, but the team was interested in what the inference speedups could be when pushed to these extremes.

Our collaboration found that using TVM we could transform, optimize, and compile models in INT4 and leverage the NVIDIA T4 TensorCore accelerations for INT4 arithmetic. This led to more than 6x speedups over the best results available previously without INT4 support. We’re excited to continue working with Microsoft Research to translate these performance gains into cost savings of the same order -- potentially up to 80% savings. If you want to dive in to learn more, the Apache TVM RFC for INT4 contains some further details and micro-benchmark performance numbers for conv2d schedules.

What’s next? TVM adoption at Microsoft Research

We are excited that based on these initial results, Microsoft Research is currently integrating the Apache TVM runtime into their Nexus video production analysis framework to realize these cost savings in production. OctoML and Microsoft Research continue our collaboration to speed up deep learning algorithms throughout Microsoft.