We enable users to harness value fromAI innovations

To get there, OctoAI delivers efficient, reliable, and customizable AI systems.

We built GenAI optimization into each layer of the stack. We know building with your models and serving them on-the-fly is key to your experiences. And, we provide a broad range of hardware options, or run our stack in your environment.

Our Story

OctoAI was spun out of the University of Washington by the original creators of Apache TVM, an open source stack for ML portability and performance. TVM enables ML models to run efficiently on any hardware backend, and has quickly become a key part of the architecture of popular consumer devices like Amazon Alexa.

Recognizing the potential for TVM and technologies like it to transform the full scope of the ML lifecycle, OctoAI was born.

OctoAI by the numbers

2019

Founded in

100+

Global employees

$132m

In funding (seed, A, B,C)

12,000+

TVM commits

What's in a Name?

Thinking about the type of company we wanted to build, we took inspiration from the playful, clever, curious octopus. These unconventional thinkers have a unique, distributed intelligence that spans their entire body.

They are adaptive enough to camouflage at a moment’s notice, and creative enough to complete puzzles, build gardens, and use tools. Plus, like any good engineer, they love to take things apart.

OctoAI Leadership

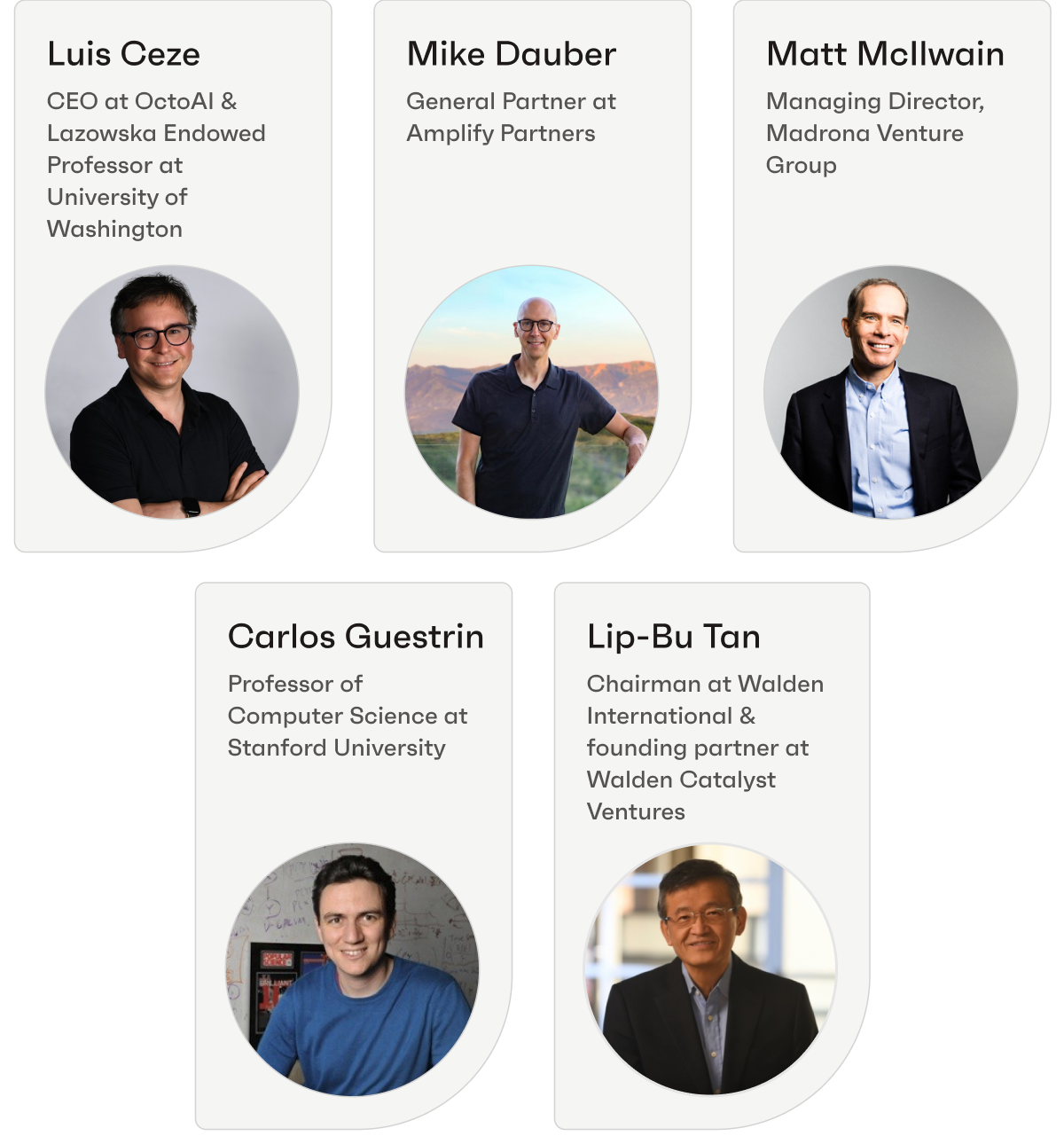

Our Board of Directors

Our Investors

Read about our work

Your choice of models on our SaaS or in your environment

Run any model or checkpoint on our efficient, reliable, and customizable API endpoints. Sign up and start building in minutes.