Generative AI is having a moment. Modern large language models (LLMs) like ChatGPT have the ability to generate complex text responses that can be creative, funny, insightful, and sound remarkably human (factual errors and all). It’s this magical quality that is inspiring app developers across all industries to put generative models to use to improve existing products and build whole new businesses around them.

Whether using generative AI as the backbone of a new application, upgrading classical ML models in production, or incorporating it into sales or customer service workflows, be warned: production compute costs are going to eat up a huge chunk of the operating budget.

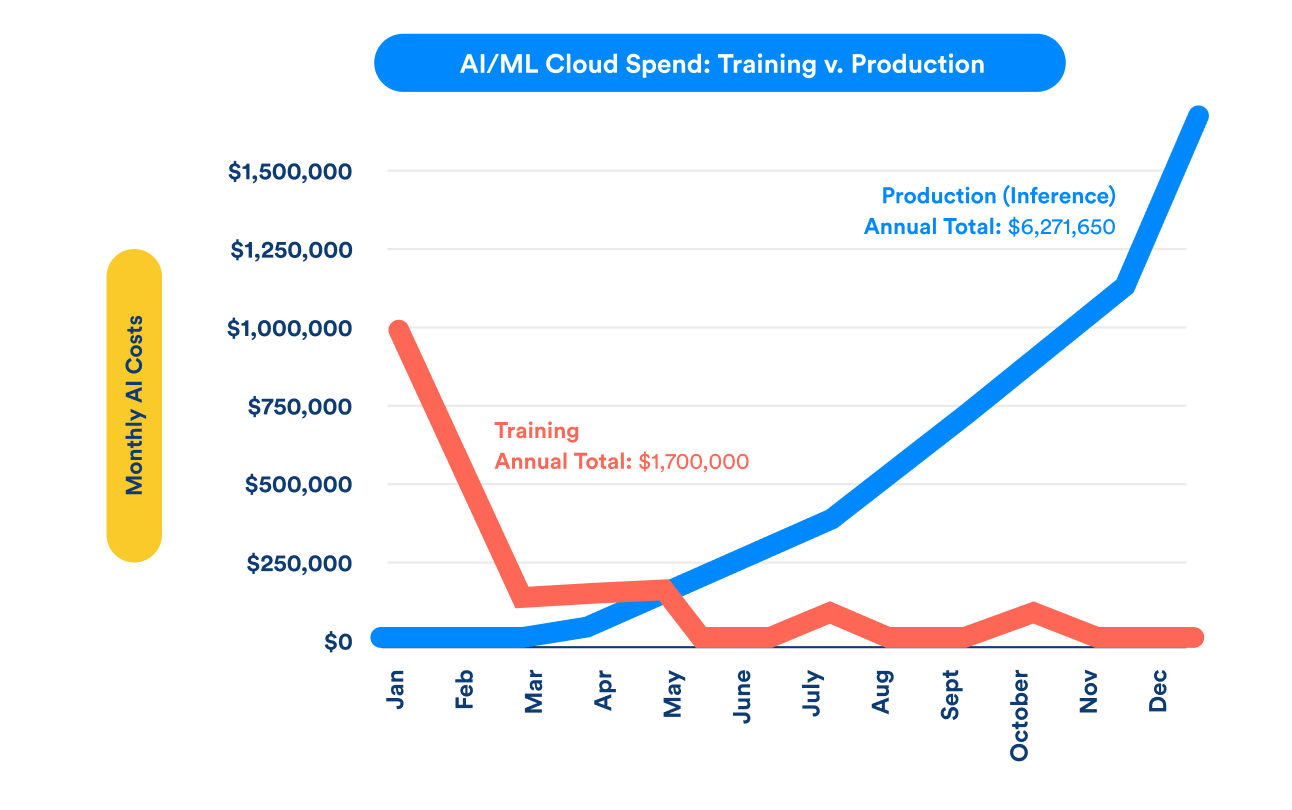

Inference Costs Dwarf Training Costs Over Time

For any useful generative model, it is likely that total inference costs will quickly exceed training costs by a wide and fast-growing margin.

Unlike training, which is a periodic exercise, ML models deployed in production are forever running inference—turning inputs or prompts into predictions, generated text, images, classifications, etc. Because generative models are exceptionally large and complex, this process is highly computationally intensive, making it far more expensive to run than other kinds of deep learning models.

Imagine that a company uses generative AI to create a chatbot that helps job seekers prepare for interviews. The app goes viral, and at the end of the year, there are a million monthly active users, generating about 1 billion inferences. Depending on your deployment configuration, those inference costs could run $3,662,197 or $43,946,366 in cloud compute costs. Understanding the implications of model optimizations, latency SLAs, and hardware availability is the key to unlocking these savings.

DIY GenAI

While it may be tempting to access a GenAI model from a large vendor via API, you could end up paying for capabilities you don’t really need. Their cloud costs simply get passed on to you, and you sacrifice control over your deployment options.

So, if generative AI is on your roadmap, and the idea of paying markup on an API you’ll never control is not your cup of tea, there are a number of open source LLMs that you can deploy on your own. Using an open-source model gives you full control over the source code, allowing you to modify or train the model for specific use cases.

One such model, GPT-J, is a 6 billion parameter open-source model released by a group called Eleuther AI. While GPT-J isn’t as powerful as ChatGPT, it is suitable for many use cases, and nimble enough to deploy on your own infrastructure.

GPT-J Deployment Options

Because we’re mildly obsessed with the ML deployment space, the OctoML team ran a series of benchmarking experiments with GPT-J to evaluate which model/hardware configurations yielded the best cost-per-inference while maintaining acceptable latencies.

While your mileage may vary according to your SLAs, here’s the TL;DR on deployment options:

Path 1: Big, Bad GPUs

NVIDIA A100s are the intuitive choice for low latency, and our experiments bore that out.

It might have been the fastest, but it was not the cheapest option we ran.

Super GPUs are in super short supply; you will need a backup if scaling is in the cards

Path 2: High-Memory CPUs

Using a CPU instance for a model the size of GPT-J is nobody's first choice, but it could be done in a pinch

If latency isn't a significant concern, there's a world where using a CPU could make sense if you do not have access to the GPUs you need

Path 3: Commodity GPUs

NVIDIA Tesla T4s and A10s will not run LLMs like GPT-J out-of-the-box, but with a few modifications it can be done.

With additional optimizations, GPT-J on an A10 instance is nearly as fast as on the A100, and by far the cheapest option.

Read on for a deep dive into our GPT-J benchmarking results

Path 1: Deploy to Big, Bad GPUs

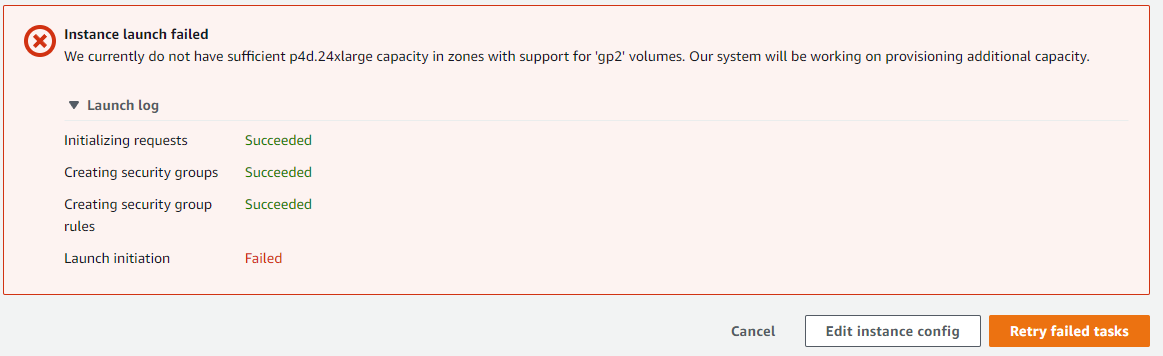

The obvious hardware choice for a model this size is to run it on an NVIDIA A100 GPU. There may be a lucky few with unfettered access to abundant cloud A100 instances, but most of us will come up against availability constraints at some point. We certainly did:

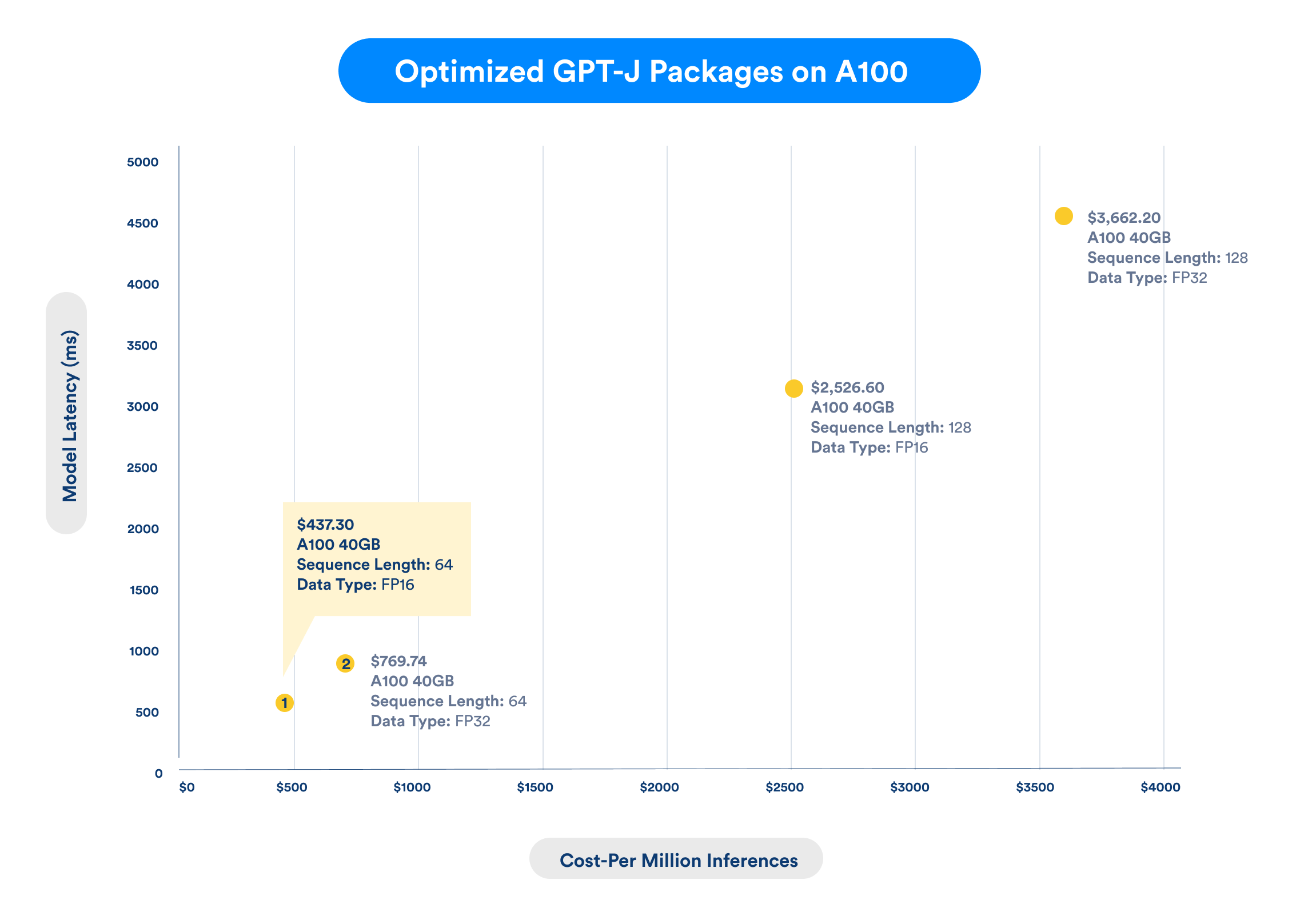

If you’re lucky enough to land an A100, the path of least resistance is to deploy your LLM there. We nabbed a 40GB single GPU A100 after a few provisioning attempts, and benchmarked several configurations of GPT-J. The model was exported to ONNX via the Optimum library and executed using the CUDA provider in ONNX Runtime. What we found was a huge variance in latency and resulting cost depending on the deployment package configuration:

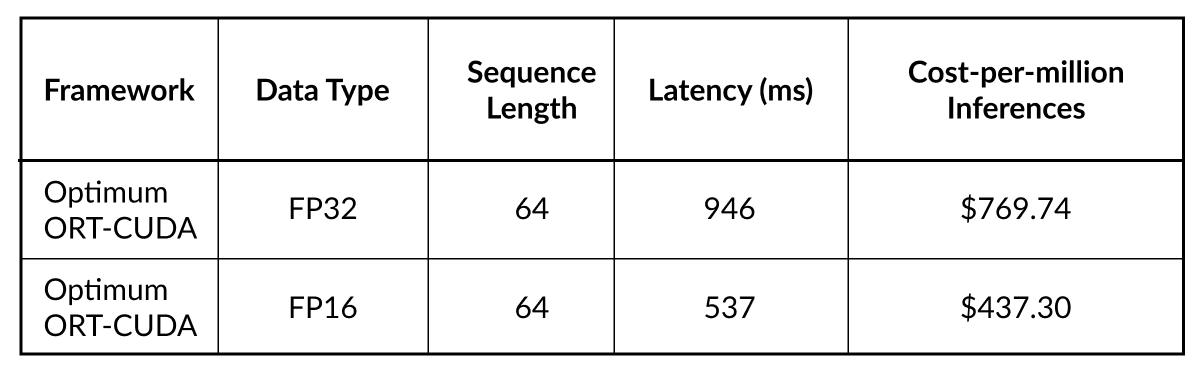

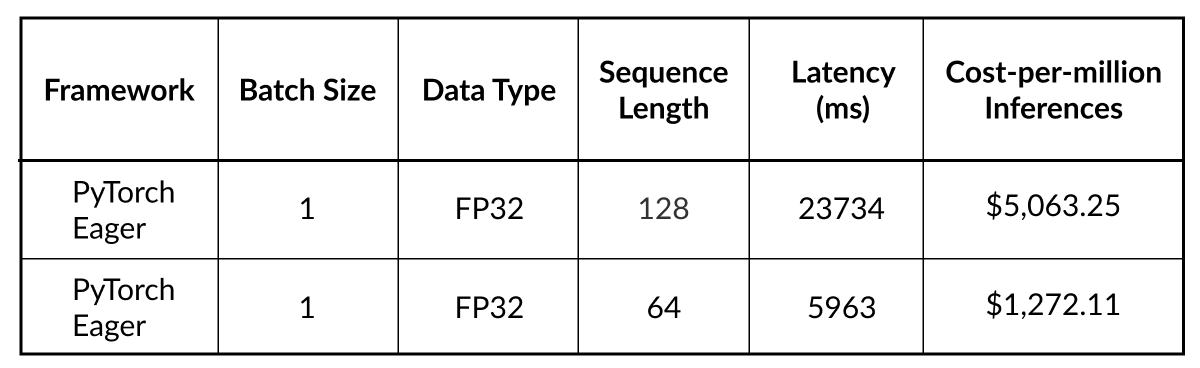

We tested FP16, FP32 and Autocast data types, at sequence lengths of 64 and 128. A batch size of 1 was for all benchmarks. A100 40GB GPU, GCP on-demand instance.

The best performing Optimum-ORT-CUDA package (dot 1) is 1.76X faster than the next best deployment package (dot 2).

While both configurations deliver inference in <1 sec, if you’re running the model at super scale a little boost goes a long way in terms of cost. At 1 billion inferences per month, that 409 ms speedup represents $3,989,280 in annual savings.

Annual inference costs based on 1 billion inferences / month. Model configurations in Optimum ORT-CUDA.

You might get the A100s you need today in the US, but how about Europe, Asia? A year from now, with 3x the users? Because models are optimized for the hardware they run on, switching will almost certainly cost more and cause your application to slow down.

If (when?) you run into this reality, you’ll be left with a few choices:

Run distributed GPU inference

This requires breaking your model into smaller subgraphs and farming them out to multiple machines. This process is painful, complicated, and will consume many hours of valuable developer time. If time is of the essence, going down this path is probably not the right choice, though in some cases, it may be unavoidable.

Path 2: Run the model on high-memory CPU hardware

Unless you’re on a desert island where the only option is a CPU, this likely isn’t going to be your first choice. However, the OctoML team ran the numbers to see what the baseline looked like in PyTorch eager mode just for fun:

We didn’t do a comparison run using PyTorch Graph Mode or Optimum ORT-CUDA because we assumed that the baseline PyTorch would be so poor that even with additional optimizations it would be more or less unusable. For many use cases, that’s probably true. Based on the PyTorch eager latency of six seconds per inference, there are signs it could be in the realm of acceptability in some cases. For example, a customer service chatbot that only needs to mimic “human speed” of response.

Overshooting latency requirements just to be "as fast as possible" has marginal benefit and could come at a high cost. If there were significant speedups in graph mode, or a CPU-optimized version, running the workload on a CPU could be feasible for certain production use cases.

Path 3: Get your model running fast on a single commodity GPU

One of the first things you’ll discover is that large models like GPT-J are too big to run out-of-the-box on most commodity GPU hardware. That rules out NVIDIA V100, A10G, and Tesla T4s you would typically reach for.

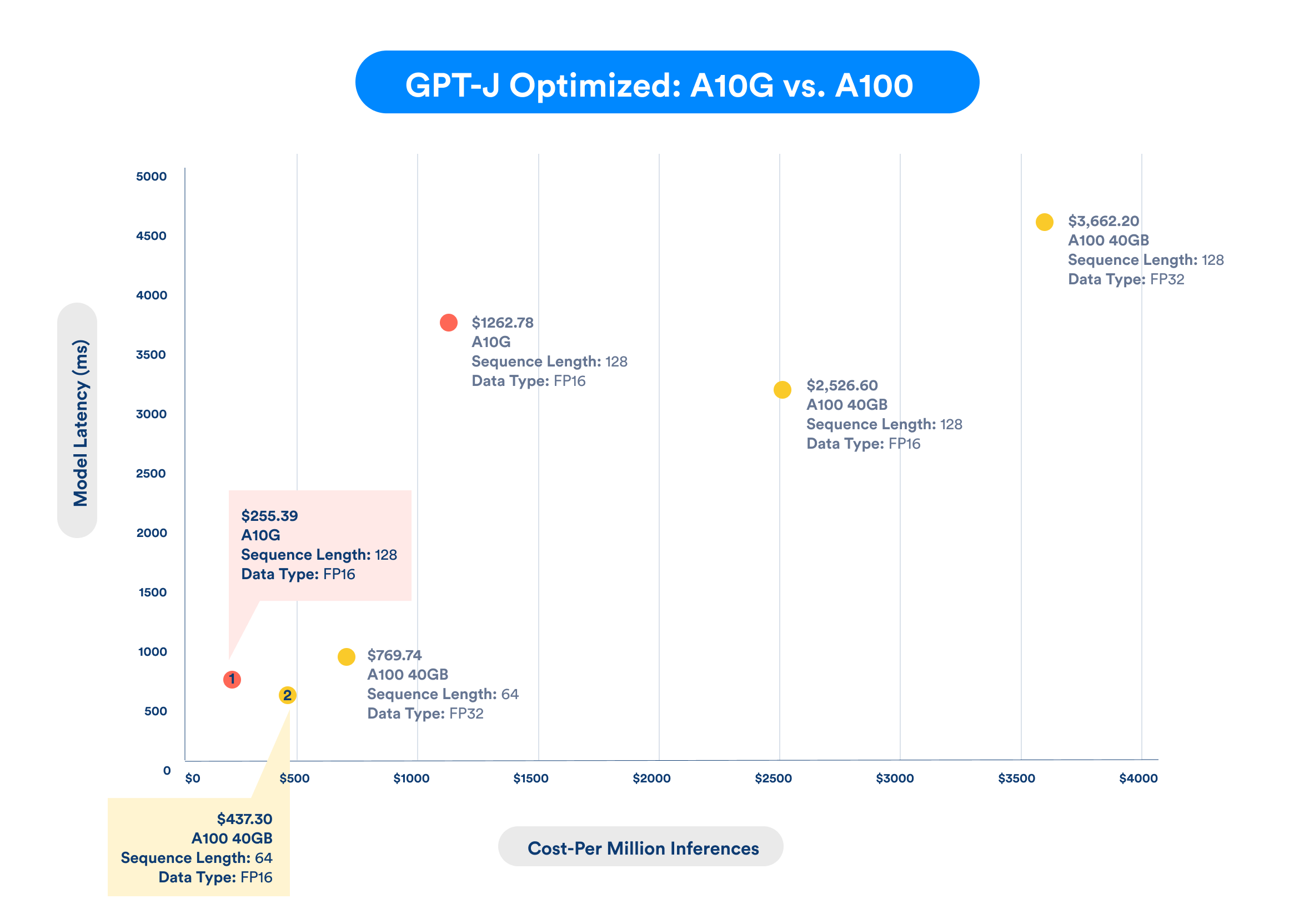

By modifying your model's data type to FP16, which generally preserves accuracy but lowers memory footprint, you can then accelerate it with efficient compilation, using CUDA, TensorRT, or TVM. That’s just what we did here: converted the model to FP16 and compared the cost and latency to the optimized versions of GPT-J that we tried on the A100.

GPT-J configurations for A10G and A100 accelerated using Optimum ORT-CUDA.

Taking the steps to get it running proves worthwhile. The highlighted configurations in the chart above represent the two cheapest packages on the A10G and the A100. At $255.39 per million inferences, the A10G yields the most affordable of all the deployment packages we benchmarked. It isn’t the fastest – that honor stays with the A100 at 537 ms – but the A10G gives it a run for its money at an impressive 739 ms.

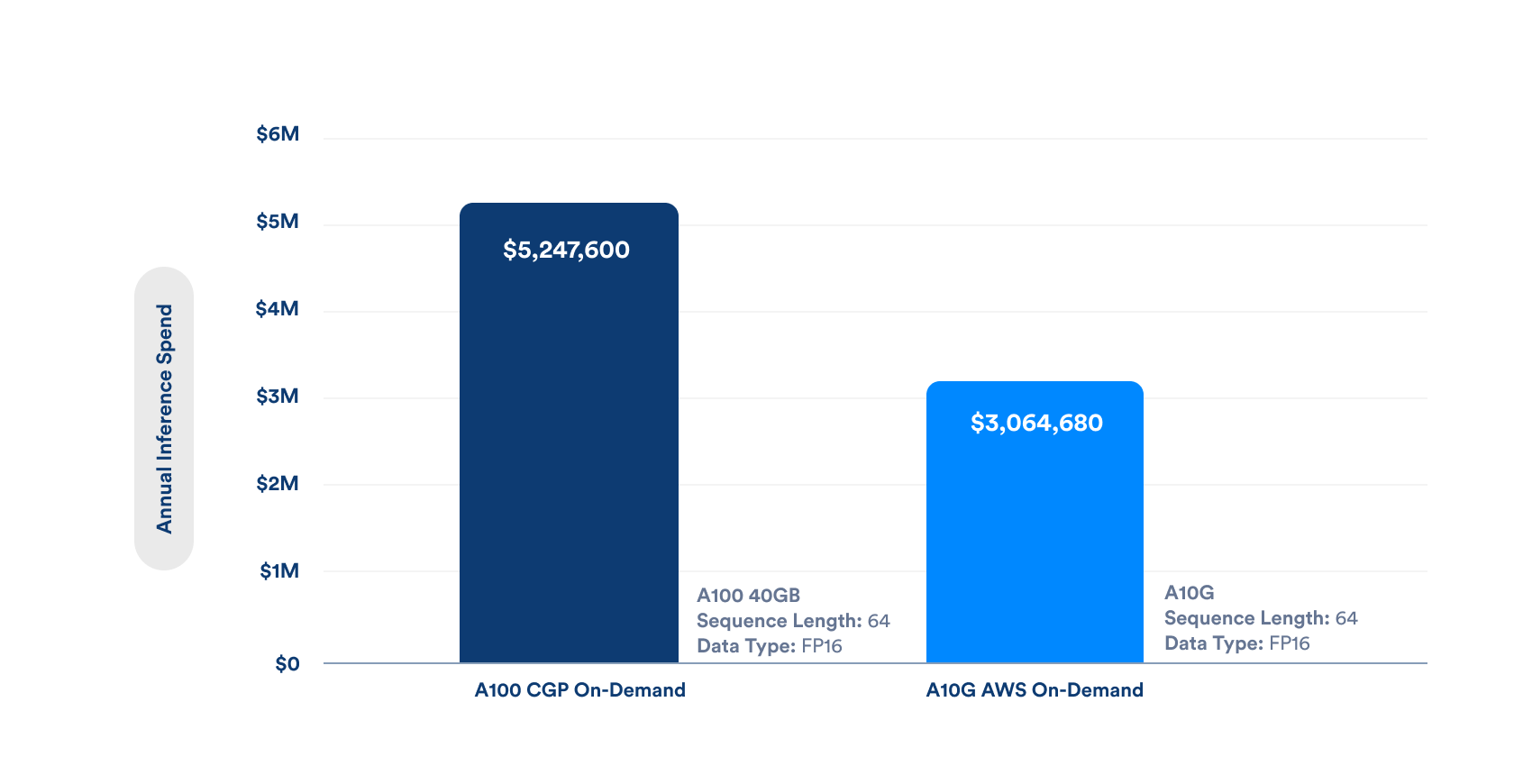

Every team has different SLAs to hit, so if a slightly higher latency is acceptable, then the A10G package is your best option. At a billion inferences per month, this would save $2,182,920 in annual inference costs vs. running the same workload on an A100:

Annual inference costs based on 1 billion inferences / month. Lowest latency model configurations on GCP A100 40GB and AWS A10G.

Takeaways

Model optimizations can save you millions of dollars over the life of your application.

Additional savings can be realized by finding the lowest cost hardware to run AI/ML workloads.

Companies building AI-powered apps will need to do both if they want a fighting chance at building a sustainable business.