How OctoML is designed to deliver faster and lower cost inferencing

2022 will go down as the year that the general public awakened to the power and potential of AI. Apps for chat, copywriting, coding and art dominated the media conversation and took off at warp speed. But the rapid pace of adoption is a blessing and a curse for technology companies and startups who must now reckon with the staggering cost of deploying and running AI in production.

These costs mostly break down into two buckets: engineering and compute. Within the latter group, the price tag of training machine learning models has received most of the attention. While it’s true that model training can incur massive cloud bills, it is a one-time, pre-production cost. And one that is sidestepped increasingly often through the use of foundation models.

But once models reach production, costs are harder to control. Each time a model creates an image or replies to a chat, it runs one or more ‘inferences’ to generate the final output. Each inference incurs a compute cost. Individually they may be small, but taken together they add up quickly when usage skyrockets.

Under these conditions, there are three things that companies can do right now to make the economics of production AI work in their favor:

Reduce human engineering time and effort required to deploy models to production

Optimize models for lower cost-per-inference (lower single prediction latency and higher batch throughput)

Identify and run workloads on the lowest cost hardware

In this post, we’ll dive into the components of the OctoML SaaS Platform that enable customers to achieve these three goals.

Inside the OctoML Platform

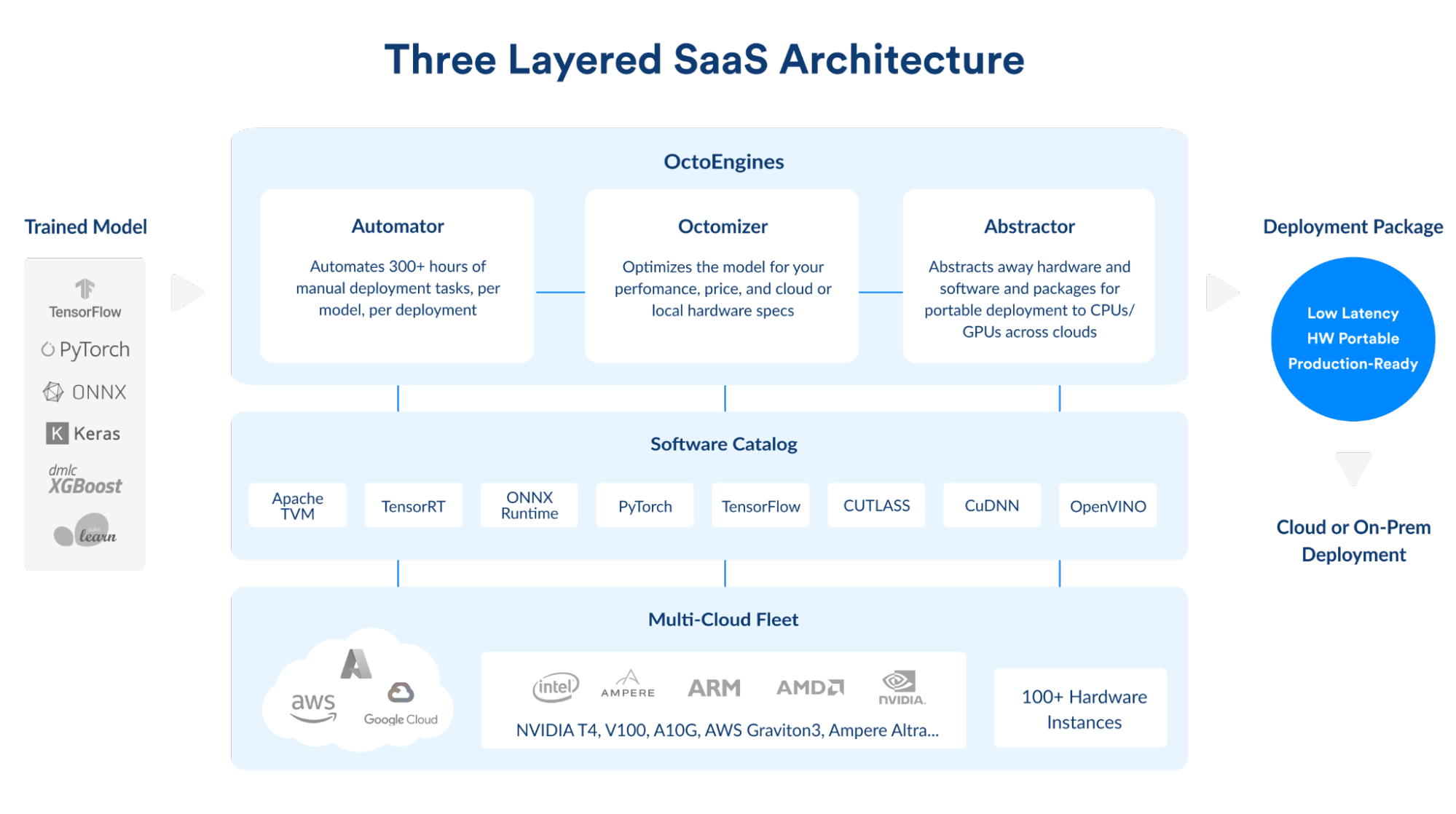

The OctoML platform consists of three layers: the OctoEngines (Automator, Octomizer and Abstractor), Software Catalog, and the Multi-Cloud Fleet.

But before we dive into those, let’s talk about where it all begins: with a trained model.

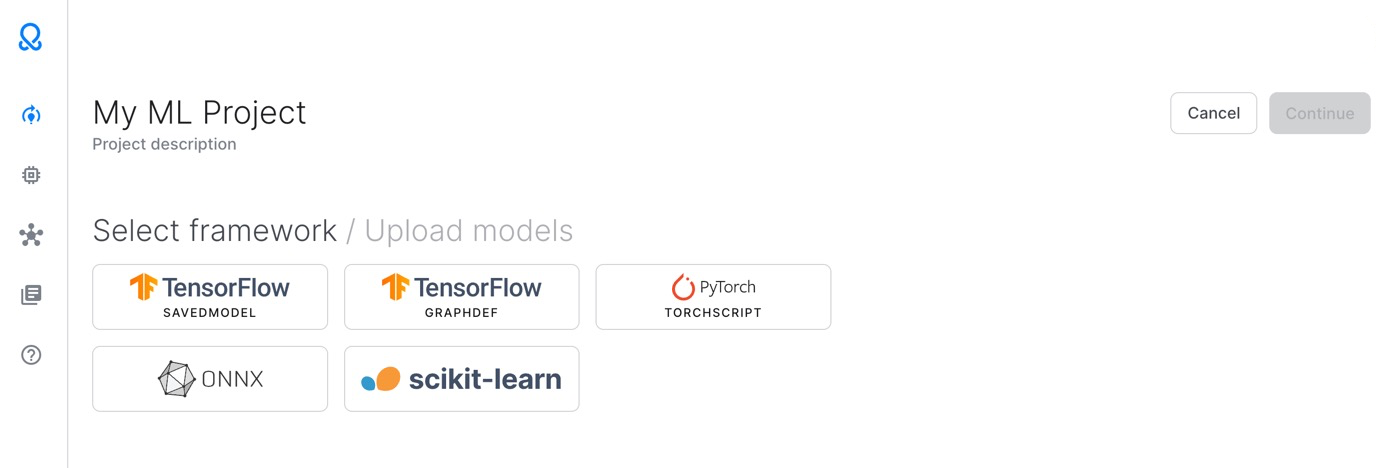

Imagine a team of data scientists and ML engineers have fine-tuned the perfect model for a specific use case. Now it’s time to deploy it to an app or service. Using OctoML, anyone can upload a trained model and in a few steps, download an optimized deployment package that can run on any cloud instance. OctoML supports all the popular deep learning model formats such as PyTorch and TensorFlow, classical ML such as scikit-learn and XGBoost, and the universal ONNX model serialization format.

OctoML’s supported model import formats

The OctoEngines

Next, the OctoEngines get to work reducing your engineering workload, optimizing the model for cost and speed, and helping you identify the best hardware for deployment.

Automator

The team that builds and deploys ML models is one of your most valuable resources. OctoML’s automation process saves them critical engineering time and effort.

Getting a modern generative model into production can take over 300 hours of non-business related and sometimes esoteric tasks. Once a trained model is handed off by the data scientists, a typical first task is gathering single inference and batch inference baseline performance data on a handful of CPUs and GPUs. This process may take between 8 and 24 hours of work.

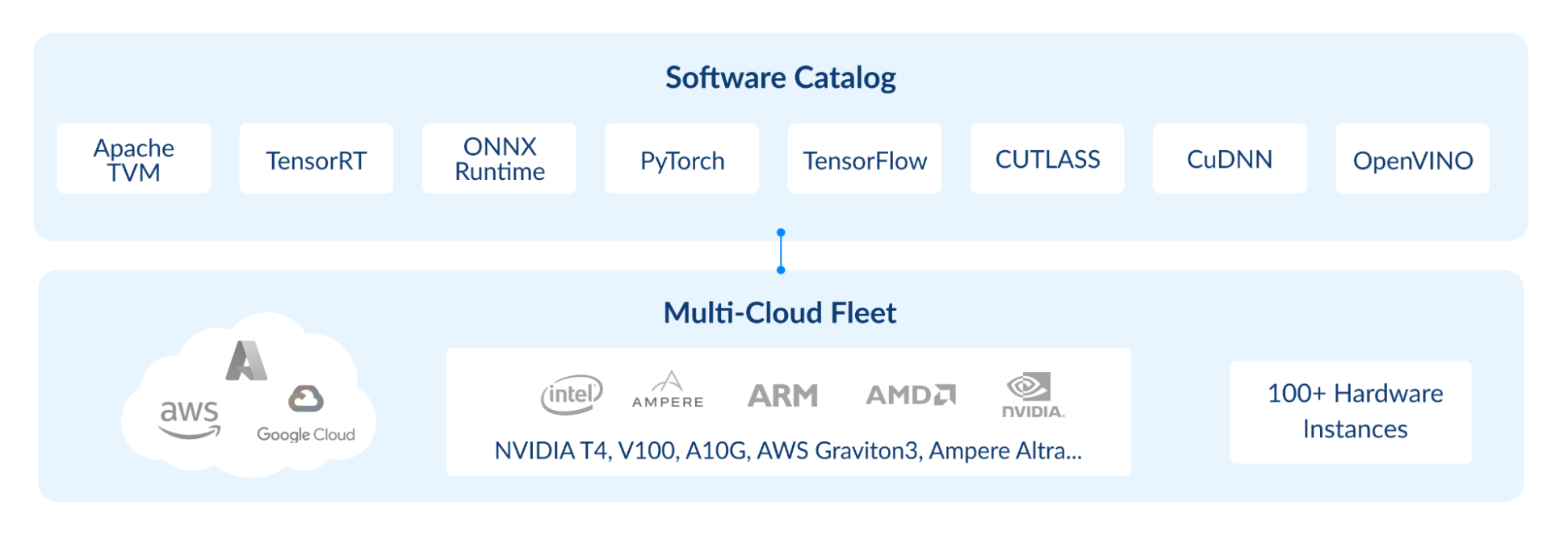

Next, the difficult work on model acceleration starts with each hardware vendor providing different optimization libraries for their specific hardwares, such as TensorRT from NVIDIA or OpenVINO from Intel. Each library contains different configuration flags and optimal settings. Sometimes several libraries can potentially compile or optimize models for the same hardware, so further time investment may be needed to explore several libraries.

In reality, setting up test environments and carefully calibrated benchmark harnesses for different hardwares with various software libraries can be so overwhelming that many companies simply forgo this step. But going with a default configuration for all your ML models can be a costly mistake in the long term.

The OctoML Software Catalog leverages an array of vendor-specific libraries, acceleration engines, training framework runtimes, and compilers in various combinations to get you the fastest possible "recipe" for running your model on a specific hardware target.

OctoML automates all of these tasks, which can save hundreds of hours of engineering time. Once your trained model is uploaded, OctoML takes over and starts running many experiments with different libraries from the Software Catalog on various cloud hardware instances to automatically find the most cost effective combination of library, config flags and hardware instance.

Octomizer

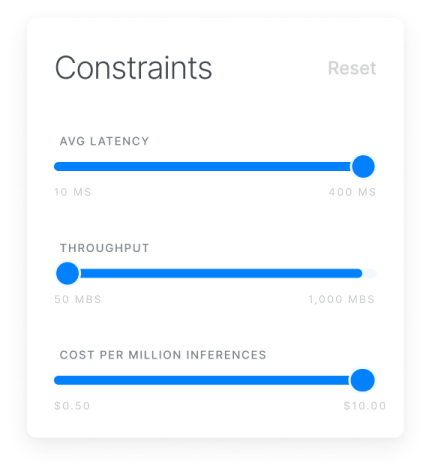

The Octomizer is where the sophisticated work of model acceleration happens. By minimizing the time and cost for each inference, your end users can experience a hyper-responsive and more affordable application. OctoML allows you to choose your constraints for latency and cost. Do you prefer a slightly slower, but much more affordable ML costs (such as for free tier users) or the absolute fastest and perhaps more costly inference (for paying customers)?

The Octomizer calls the libraries housed in the Software Catalog, including Apache TVM, ONNX Runtime, TensorRT, OpenVINO, CuDNN, and others to accelerate the model on different CPUs and GPUs. You can select from over a hundred specific instance types in AWS, Azure or GCP that you are interested in exploring or let our intelligent automated process recommend instance types independently.

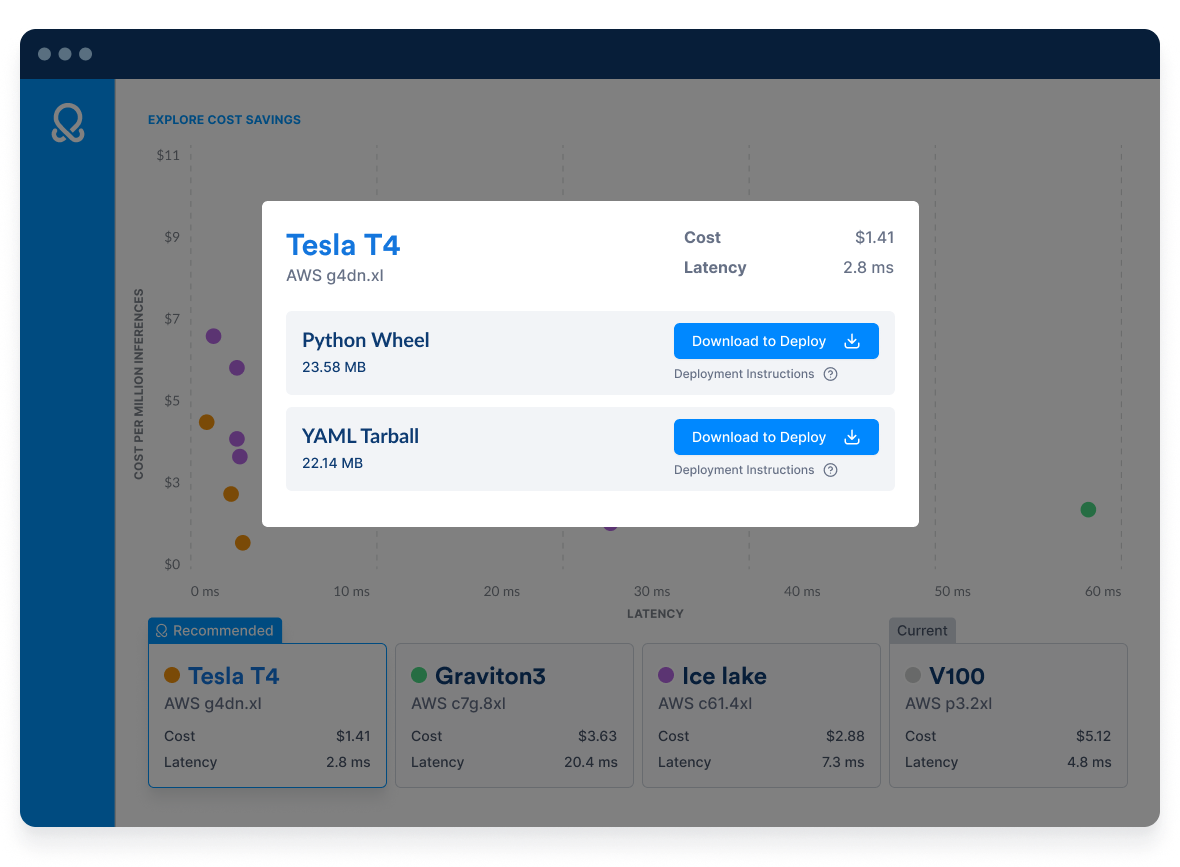

OctoML runs different scenarios with real-world data, leveraging multiple optimization libraries for each hardware instance type. An ideal result from this experimentation process would be a dot in the lower left corner, with a low inference time and a low cost per million inferences.

In the example above, NVIDIA’s V100 GPU on AWS p3.2xl was selected as the current hardware where the model is run. OctoML benchmarked the baseline model on this hardware showing latency of 4.8 ms per inference and a cost of $5.12 per hour. The OctoML-optimized model not only runs faster (3.2 ms vs. 4.8 ms) but is also significantly less expensive ($2.93 vs. $5.12/hour).

There are even greater savings to be realized if you are willing and able to consider alternative hardware. In the example above, the orange dots represent different engine or configuration settings that were used to accelerate the model on NVIDIA Tesla T4 hardware in AWS.

The baseline running on the NVIDIA Tesla T4 runs faster but is only slightly less expensive than the baseline v100. However, the highest performing package is both faster (2.8 ms) and significantly less costly, running at $1.41 per hour, making it a compelling choice.

Once you’ve identified the ideal deployment scenario, it’s time to deploy to the hardware of your choice.

Abstractor

Packaging a model for deployment is no trivial task. Because models must be accelerated for the nuances of specific hardware, that optimized model cannot be transferred to different hardware without re-running a new acceleration process.

Compared to the portability that virtual machines, containers and cross platform frameworks such as React Native or Electron offer software developers, the current state of machine learning deployment leaves ML engineers with a desire for hardware portability but no easy path to accomplish it.

OctoML’s Abstractor makes model portability a reality by allowing you to decouple your ML application from the hardware. This provides you the benefit of switching hardware types as new hardware is released or switching hardware or even clouds as the requirements of your application shift.

After the optimization and benchmarking process has completed, OctoML presents you with multiple model packaging options such as a YAML tarball or a python wheel:

If you’d like to switch hardware types, simply click on the new hardware and download the different set of packages optimized for that hardware type.

OctoML’s Multi-Cloud Fleet provides insights across 100+ deployment targets in the cloud (AWS, Azure and GCP) with accelerated computing including GPU, CPU, NPU from NVIDIA, Intel, AMD, ARM and AWS Graviton. The fleet is used for automated compatibility testing, performance analysis and optimizations on actual hardware. As new hardware products are launched, OctoML adds them to the Multi-Cloud Fleet so customers can stay current on the latest and greatest.

Test drive OctoML to explore cost savings

Now you can achieve the same performance gains as the leading technology companies, while knowing that you are running on the optimal hardware for your model - all without the need to employ a specialized ML staff. Don’t take our word for it, request a demo to see how your specific ML model can be accelerated and deployed using OctoML.