Introducing TVM's MetaSchedule: The Convergence of Performance and User Experience

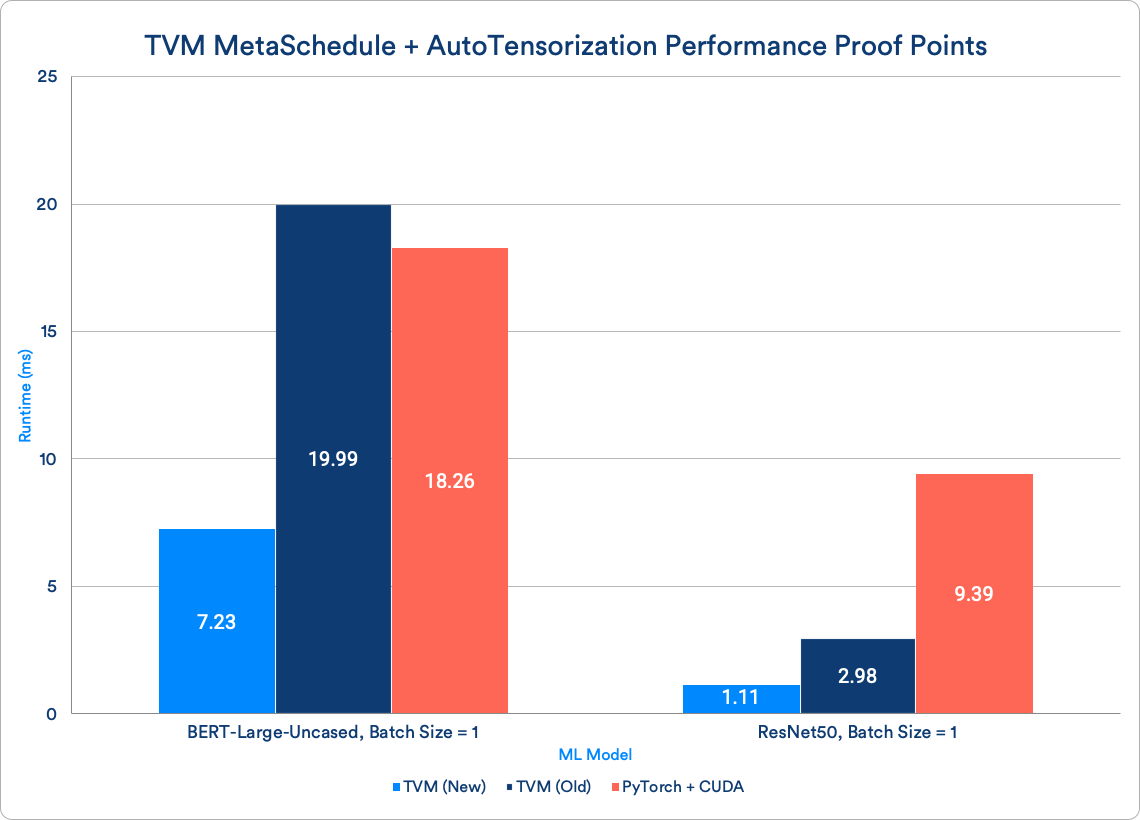

Today, we’re excited to share some early results with the Apache TVM community about two new features that have recently been upstreamed and are showing exciting results: MetaSchedule and AutoTensorization. These results are particularly impressive on lower-precision 8-bit integer (INT8) and 16-bit floating-point (FP16) models: as shown in the figure below, MetaSchedule and AutoTensorization are achieving >2x speedups over existing TVM and PyTorch baselines on ResNet50 and Bert-Large-Uncased in FP16.

Additional Details: 1. TVM (New): TVM with MetaSchedule and AutoTensorization enabled, 2. TVM (Old): TVM with AutoScheduler (which is the predecessor of MetaSchedule), 3. PyTorch + CUDA: Measured via the PyTorch AMP (Automatic Mixed Precision) package. Versions: tvm=5d15428994fee, cuda=11.6, pytorch=1.13

These options for optimizing ML models each have their own sets of tradeoffs. For instance, while custom models may achieve the best accuracy and performance on a certain hardware target, training and hand optimizing bespoke custom models requires building up a team of ML Engineers with domain expertise in the hardware target that the model is deployed on. On the other hand, even though vendor optimized libraries are more approachable for ML Engineers with less domain expertise, they have limited operator, shape, and datatype support which may differ across hardware targets, which constrains data science team’s ability to experiment with customization.

These tradeoffs can be less than ideal for many organizations, which is where Apache TVM comes in. TVM enables access to high-performance machine learning anywhere for everyone. As TVM’s diverse community of hardware vendors, compiler engineers and ML researchers work together to build a unified, programmable software stack, they enrich the entire ML technology ecosystem and make it accessible to the wider ML community. TVM empowers users to leverage community-driven ML-based optimizations to push the limits and amplify the reach of their research and development, which in turn raises the collective performance of all ML, while driving its costs down.

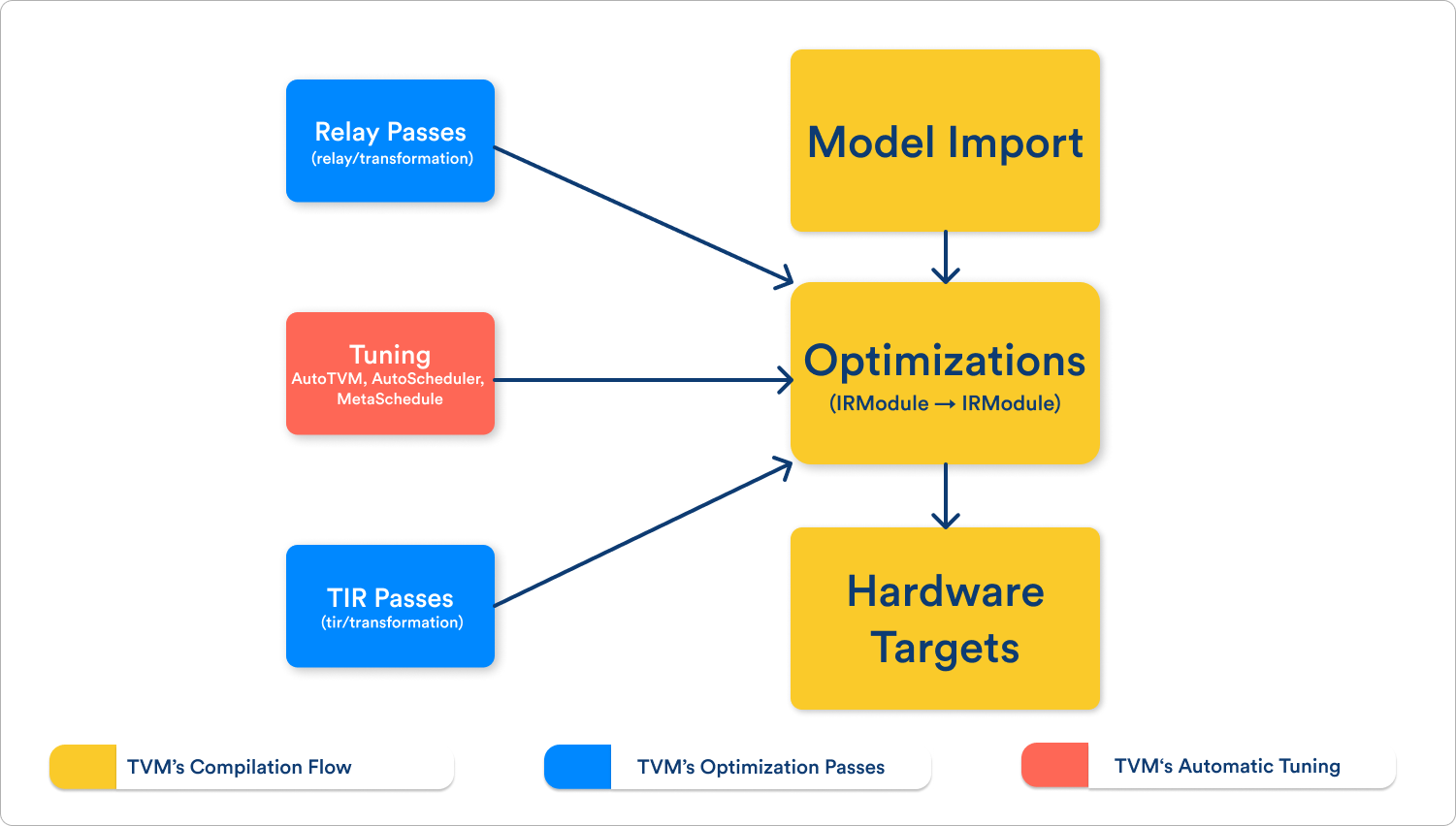

If you’re unfamiliar with TVM, at a high level it works like this:

You start with a model in a common used format, such as PyTorch or ONNX.

This model is imported into TVM, which parses the model and transforms it into a high-level format called Relay.

Relay is an intermediate representation of your model. By itself it can’t run any computations, but it does make it possible to reason about your model at a high level. You can combine operations, move operations around, and break work apart without disrupting the computation the the model performs.

Relay itself is built on an even lower-level Tensor Intermediate Representation (TIR). TIR sits very close to the hardware model, giving you the opportunity to reorder operations with fine precision to match the particular requirements and performance features of a broad set of hardware.

At the lowest level, TVM can compile TIR to machine code, targeting any hardware you want to deploy your model to.

High-level Apache TVM architectural diagram

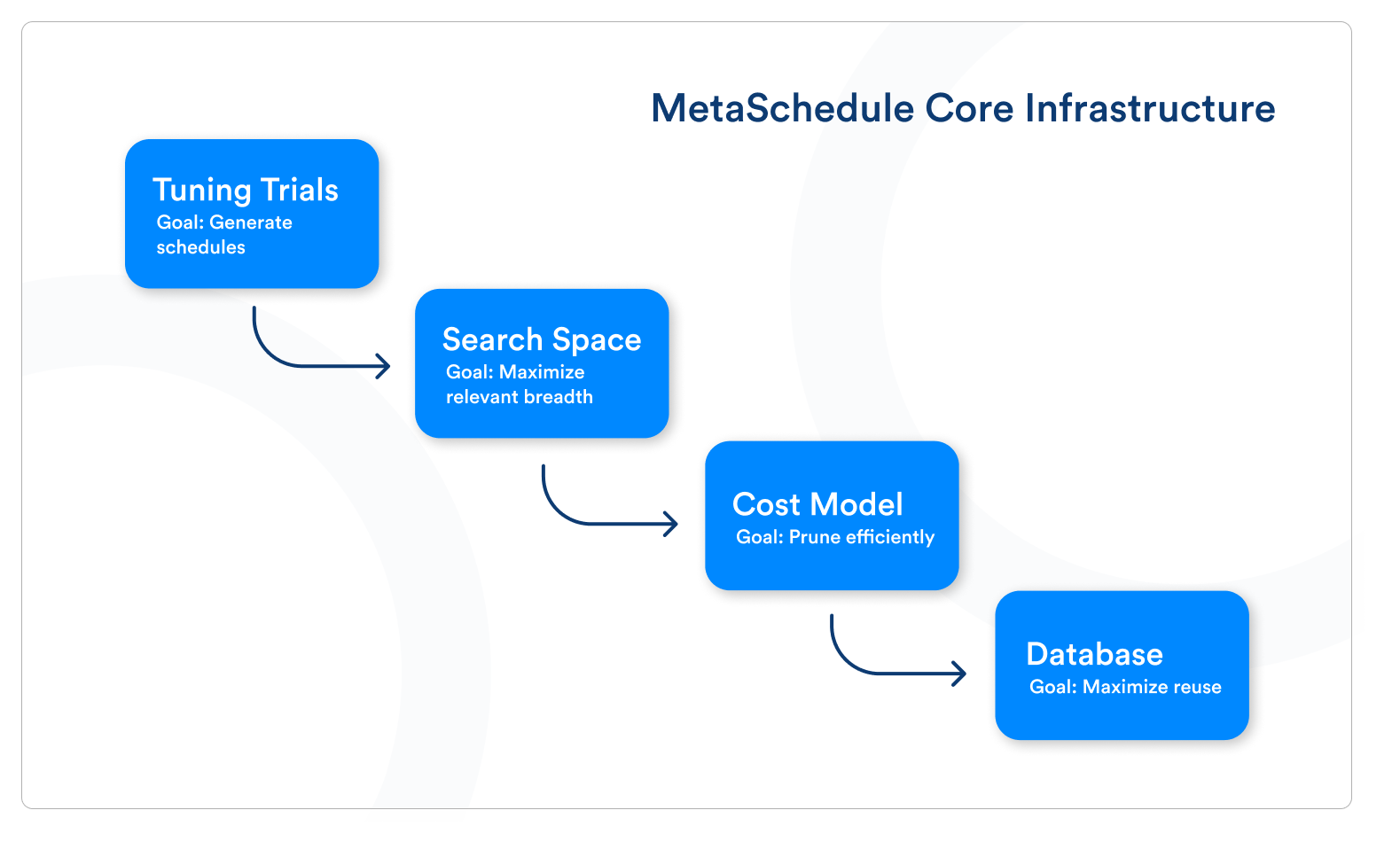

MetaSchedule is a natural evolution of TVM’s optimization process, which uses ML to optimize ML (also known as autotuning). It builds upon the foundations of TIR, with a powerful templating system that brings human expertise into guiding an ML-based search process to produce highly optimized models. It captures the domain knowledge of the ML Systems experts in a way that previously only be applied in bespoke, hand-written lowerings.

Apache TVM MetaSchedule core infrastructure diagram

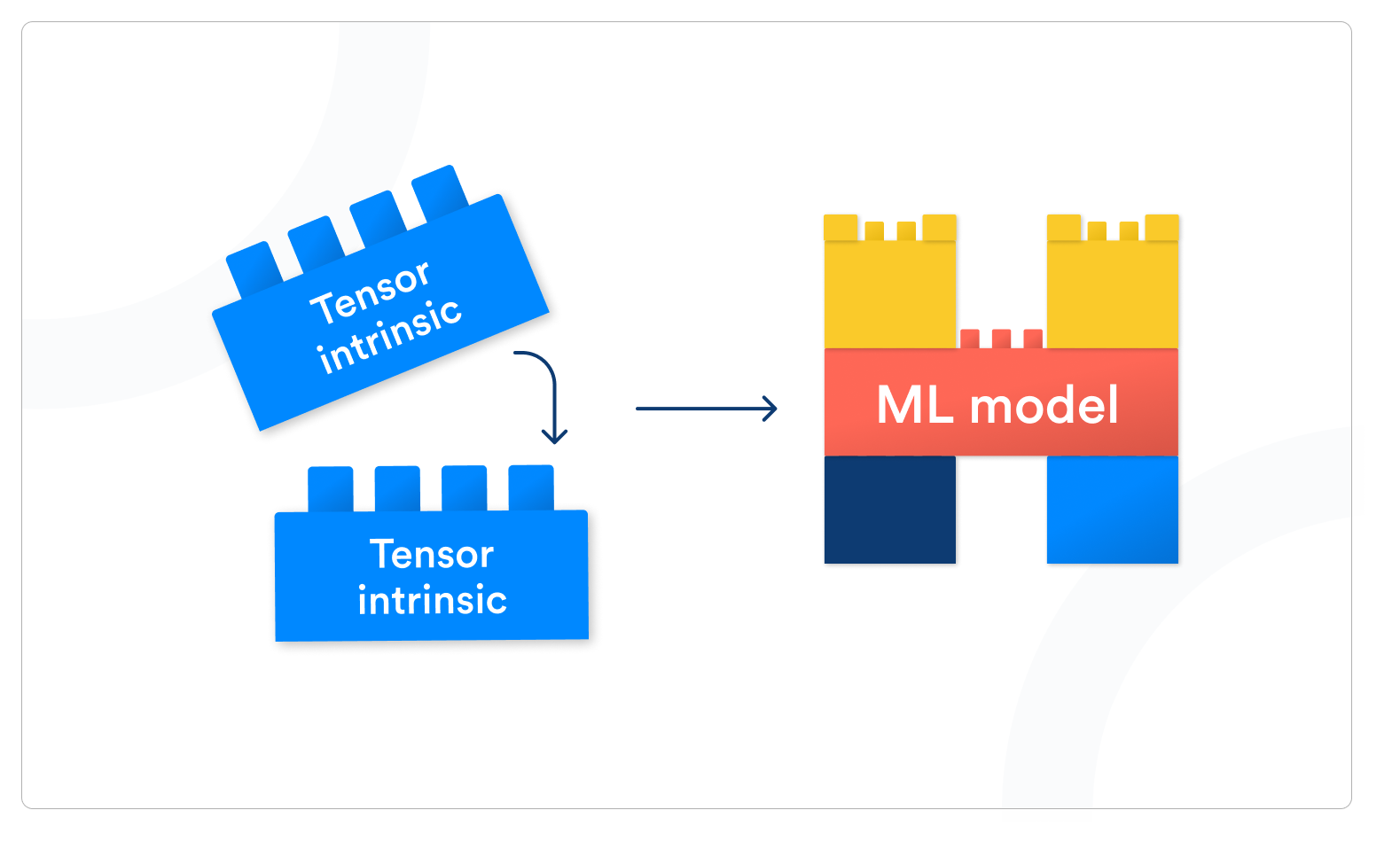

AutoTensorization takes a lower-level approach to optimization. It reuses MetaSchedule’s pattern matching technology to identify small TIR subgraphs, also known as tensor intrinsics, which can map to lower-level microkernels which are highly optimized within vendor libraries. It allows TVM to take advantage of hardware-specific, high-performance optimizations that are well understood and tailor those optimizations to be applicable across model architectures and end user applications.

Autotensorization Diagram: tensor intrinsics for different operators/shapes can be pieced together to form an ML Model (kind of like how you can use lego blocks to build a lego castle).

If you’d like to learn more about MetaSchedule and AutoTensorization, come view the TVM Community’s poster at NeurIPS 2022in New Orleans, or read the pre-print of their paper. Additionally, the OctoML team is currently working on building out robust documentation, tutorials, and demos for MetaSchedule and AutoTensorization. Stay tuned for more details!