Reduce LLM costs for text summarization by over 50% with Mixtral on OctoAI

Large language models (LLMs) are emerging as a go-to tool for productivity solutions, and text summarization is a domain that truly illustrates this value. The ability of LLMs to extract semantic information from text and to generate readable text based on this info make them particularly well suited to address text generation tasks. But text summarization solutions can quickly get unwieldy, as the text volumes start hitting scalability and cost constraints.

This blog demonstrates the cost benefits of using Mixtral on OctoAI over GPT 3.5 (including the recently announced lower cost 0125 version). We demonstrate here how switching to Mixtral on OctoAI can deliver a 1.5x to 3x lower LLM usage bill for your solution compared to GPT 3.5 on OpenAI and over an order of magnitude lower costs compared to GPT 4.

Get started with OctoAI on Mixtral by signing up for the OctoAI Text Gen Solution.

Sample application and LLM benchmark setup

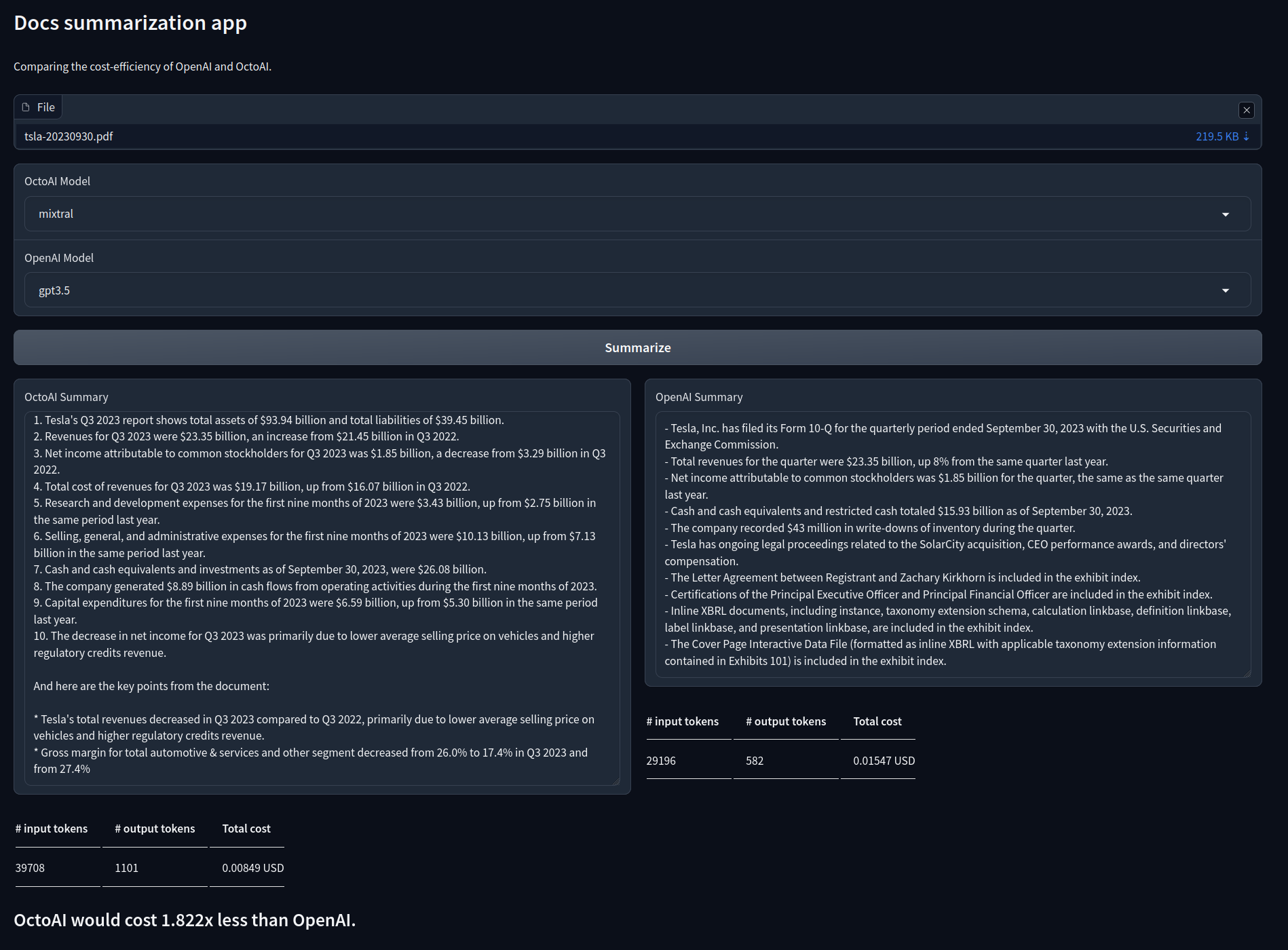

For this exercise, we will use a purpose built application designed to extract text from a PDF file and generate a summary. The application uses commonly used libraries like UnstructuredPDFLoader through the LangChain wrapper for PDF text extraction, text chunking to support easy scaling from thousands to millions of word sized documents, and the OpenAI and OctoAI client libraries for inferences. The application and the approach are designed to mimic typical text/document summarization needs at enterprises.

The primary goal of this application is to run text summarization using OpenAI GPT 3.5 and Mixtral and compare results. You can also easily modify this to compare other open source models in OctoAI. The application can be downloaded, run, and modified for your needs from the OctoAI Text Gen cookbook repository on our github.

Two things to mention at this stage. Firstly — this benchmark is not intended to be a comparison of quality. We have seen in leaderboards (including the popularly referenced LMSys Chatbot Arena Leaderboard that Mixtral outperforms the current/latest GPT 3.5 in quality, and that this results in on par performance for general purpose natural language processing (NLP) tasks like text summarization. Second — further optimizations will be possible with using fine tuned versions of Mixtral specialized for summarization, or for the specific types of documents being summarized (e.g. healthcare, finance, etc.). These are not considered for the purposes of this evaluation.

OctoAI and OpenAI Python SDKs share the same interface, so we will obtain the counts for input and output tokens from the respective SDKs. Both return the token usage per request in their responses. Based on the latest OpenAI pricing change and OctoAI Cloud pricing, here are the relevant costs for input and output tokens for the models being evaluated.

1K Input Tokens (USD) 1K Output Tokens (USD) mixtral-8x7b-instruct

0.0003

0.0005

gpt-3.5-turbo-0125 (preview)

0.0005

0.0015

gpt-3.5-turbo-1106

0.0010

0.0020

| 1K Input Tokens (USD) | 1K Output Tokens (USD) | |

|---|---|---|

mixtral-8x7b-instruct | 0.0003 | 0.0005 |

gpt-3.5-turbo-0125 (preview) | 0.0005 | 0.0015 |

gpt-3.5-turbo-1106 | 0.0010 | 0.0020 |

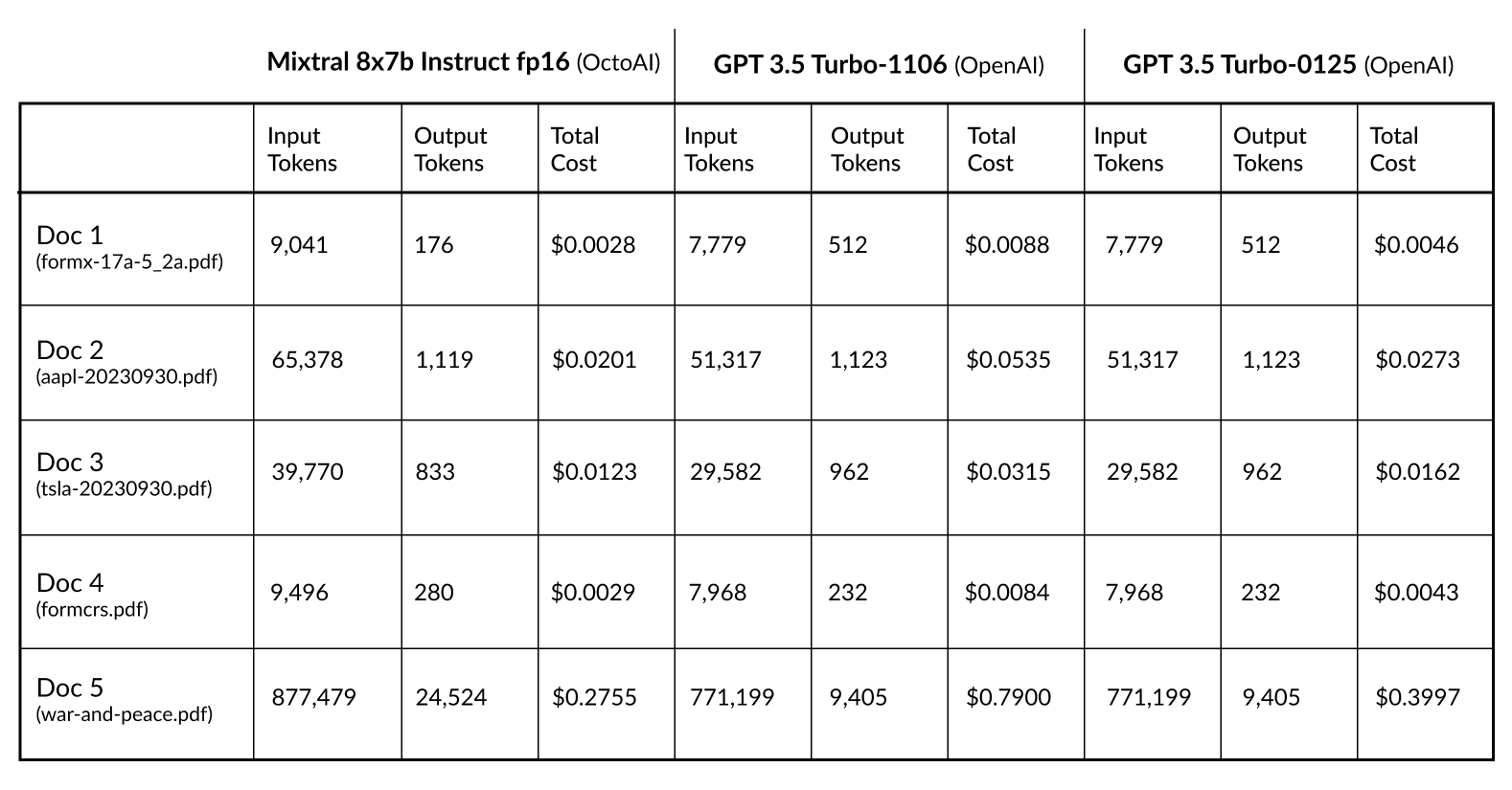

Because we’re interested in document summarization, we’ll use a few PDFs of various sizes. We’ll use two guidelines from the SEC that fit into the context window of both models, we’ll also use a Tesla quarterly report and an Apple yearly report, also from the SEC. These documents contain over 25 and 40 thousand words, respectively. These won’t fit in the context window of both. Finally, there’s the last document — War and Peace, by Fyodor Dostoevsky. Now, this book has almost 600 thousand words. For the latter three documents, we’ll use a MapReduce summarization approach, and we’ll split the documents into chunks of approximately 15k tokens for GPT-3.5-Turbo and 32k tokens for Mixtral. If modifying the application for other models, please do keep in mind that you need to set aside some of the token allowance for the system prompt and the output.

Evaluation results: 3x cheaper Mixtral

The table below captures the results of our evaluations across a range of document sizes from around ten thousand to over half a million words.

As you can see, we have achieved almost 3x, cheaper summarizations, even after the most recent OpenAI pricing changes. Summarization is very input-heavy, so although you’d expect it to be up to 3.3x cheaper, given the cost per token differences with OpenAI, the differences in the tokenization schemes for Mixtral vs GPT-3.5 reduce the cost efficiency to roughly 3x. Notice that Mixtral needs about 15%-30% more input tokens to represent the same text as GPT-3.5-Turbo, but this is far outweighed by the efficiencies in cost. 1.5x to 3x cheaper inference with the same quality is a huge improvement.

These benchmarks show the result of fairly simplistic swap of models, and are just indicative of the cost and quality improvements possible — as applications tap into the power of custom-tuned models for topic and domain specific summarization.

Try the OctoAI Text Gen Solution today

You can get started with the OctoAI Text Gen Solution today at no cost, and easily get started with models including Llama 2, Code Llama 70B and Mixtral. The application used for the benchmarking in this blog is available in the OctoAI Text Gen cookbook repository on our github.

If you are currently using a closed source LLM like GPT-3.5-Turbo or GPT-4 from OpenAI, we also have a new promotion to help you accelerate integration of open source LLMs in your applications. Read more about this in the OctoAI Model Remix Program introduction.