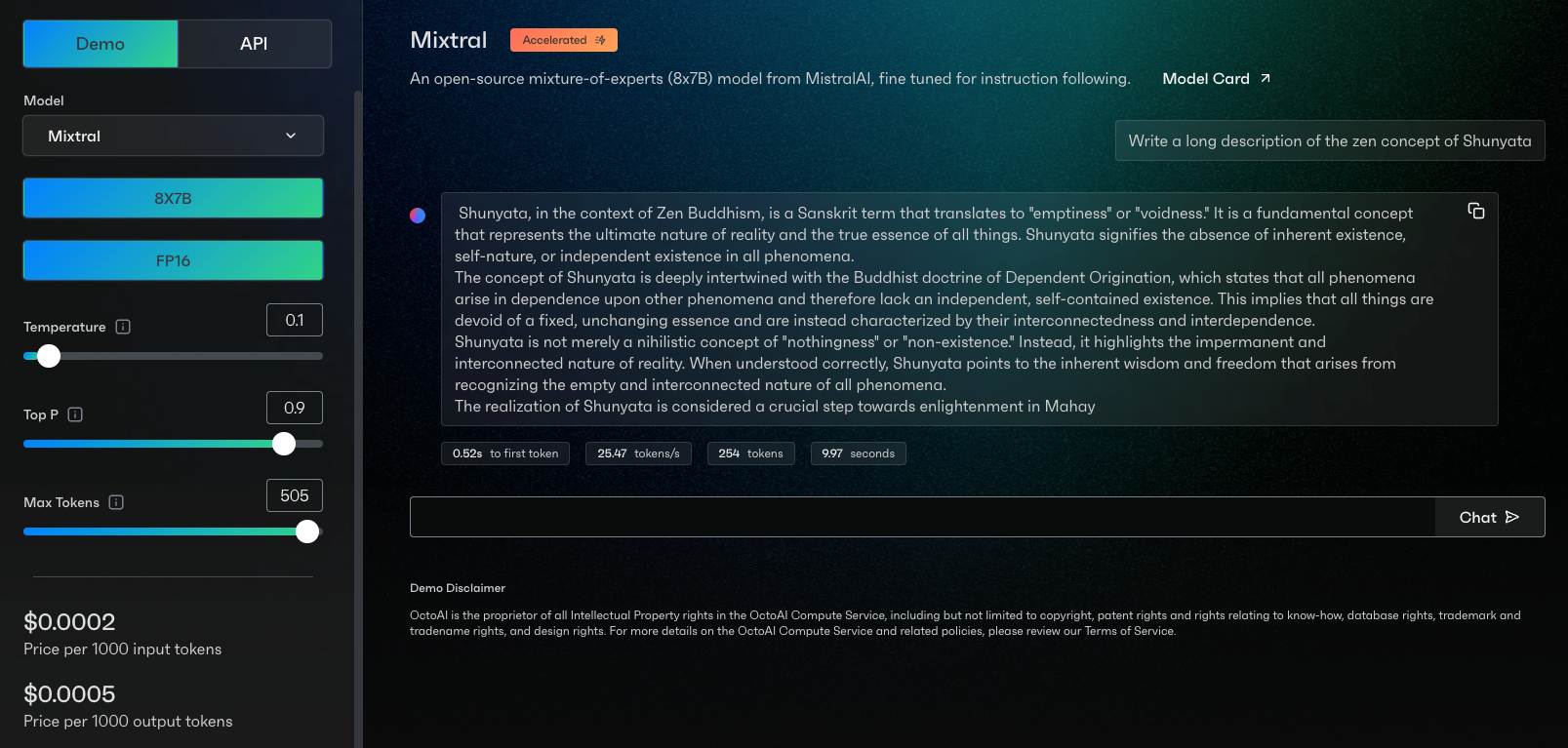

We’re excited today to announce that Mistral’s Mixtral 8x7B Instruct large language model (LLM) is now available on the OctoAI Text Gen Solution. Customers can get started with Mixtral on OctoAI today and benefit from

Quality that is competitive to GPT 3.5 with the flexibility of open source software (OSS),

One unified OpenAI compatible API to consume Mixtral and other OSS models , and

- 4x lower price per token than GPT 3.5.

Mixtral 8x7B brings Mixture of Experts (MoE) to open source LLMs

Since the “drop” of the Mixtral model last week (Dec 12, 2023), the LLM community has been buzzing with speculation about the new model. Details released by Mistral AI this week provided confirmation that Mixtral implements a sparse Mixture of Experts (MoE) architecture, and provided competitive comparisons that showed Mixtral outperforming both Llama 2 70B and GPT 3.5 in a number of LLM benchmarks.

Competitive benchmark comparisons shared in the Mistral release blog seem to live up to the hype and expectations. Mixtral 8x7B outperforms both GPT 3.5 and Llama 2 70B on a number of popular benchmarks including the GSM-8K, MMLU and the ARC benchmark and is now among the top 10 in the Hugging Face open LLM leaderboard. All of these make Mixtral a compelling option for builders looking for high quality open source LLM alternatives to OpenAI.

Faster, Cheaper Mixtral on OctoAI

The Mixtral 8x7B Instruct v0.1 model is available alongside the current Llama 2, Code Llama and Mistral models on the OctoAI Text Gen Solution. Mixtral on OctoAI brings to customers the same efficiencies and ease of use of the broader OctoAI Text Gen Solution, including:

The cost and speed benefits of OctoAI’s compute service and model acceleration

The ability to easily start using Mixtral while still using the same unified API endpoint and OpenAI consistent APIs as all LLMs on OctoAI

The reliability and scalability of the proven OctoAI platform, which already processes millions of customer inferences every day

The prioritization and inclusion of Mixtral is primarily a response to the questions and requests we’ve received from our community, and a reflection of the spirit of collaborative curation we use to expand the OctoAI model library.

Within days of Mixtral’s release, we have been able to try it alongside our other models on OctoAI. The unified API simplified this adoption, and made it easy to try the new model as soon as it was added. This speed and flexibility is exactly why our engineers enjoy working with OctoAI.

Matt Shumer, CEO & Co-Founder @ Otherside AI

Try Mixtral 8x7B Instruct on OctoAI today

You can get started with the Mixtral 8x7B Instruct model today at no cost, by signing up for the OctoAI Text Gen Solution.

If you are currently using a closed source LLM like GPT-3.5-Turbo or GPT-4 from OpenAI, we have a new promotion to help you accelerate integration of open source LLMs in your applications. Read more about this in the OctoAI Model Remix Program introduction.

You can also learn more about Mixtral on its release announcement from Mistral and engage with the broader OctoAI team and community by signing up on our Discord.