Making the Llama 2 Herd Work for You on OctoAI

OpenAI deserves its flowers for creating the “iPhone opportunity” for the AI industry with ChatGPT. Thanks to the magnanimity of Meta, the incredible power of world-class LLMs will be accessible to everyone. Meta has catalyzed an infinite opportunity with its Android moment in releasing Llama 2. Sharing a high quality commercially viable open source Large Language Model (LLM) allows every company and developer to shine, not just one.

Llama 2 unlocks open source innovation around text generation

In conversations with our users, we’ve heard that the availability of high-quality open source LLMs has been an obstacle to innovation and momentum around text generation use cases. Application developers have thrived in the open source world, and the feeling that they have no alternative to dominant closed models has been unsettling. ChatGPT is a staggering piece of ingenuity — and something they love tinkering and prototyping with. But locking into a single vendor’s API just didn't feel right. That’s why our customer conversations about Llama 2 over the past month have been so delightful.

When we delivered Llama2 7B Chat in July, our customers were excited. But they wanted to better understand and explore the full Llama herd (7B, 13B, 70B), and choose a model that was right for them. Instead of imposing our point of view, we’re offering developers a myriad of options around the entire Llama 2 herd: 7B, 13B, and 70B. We are the only GenAI infrastructure platform that runs all of these options. Moreover, we are transparently sharing with developers the additional choices they can make.

Flexibility and options within the fastest Llama 2 herd

Llama 2 offers developers a range of models, and the optimal choice of model is not always the largest model on the most powerful GPU. A big part of the power introduced by Llama 2 is the flexibility and control it offers to developers to make tradeoffs and build on the right model for the right need. Depending on the use case, the right choice may vary from the small Llama 2 7B Chat for targeted cost-sensitive applications like product Q&A chatbots, to larger models for less bounded use cases like event data summarization.

OctoAI delivers all of these options to developers, allowing the transparency and visibility to evaluate the options available and adopt the one that best fits the use case.

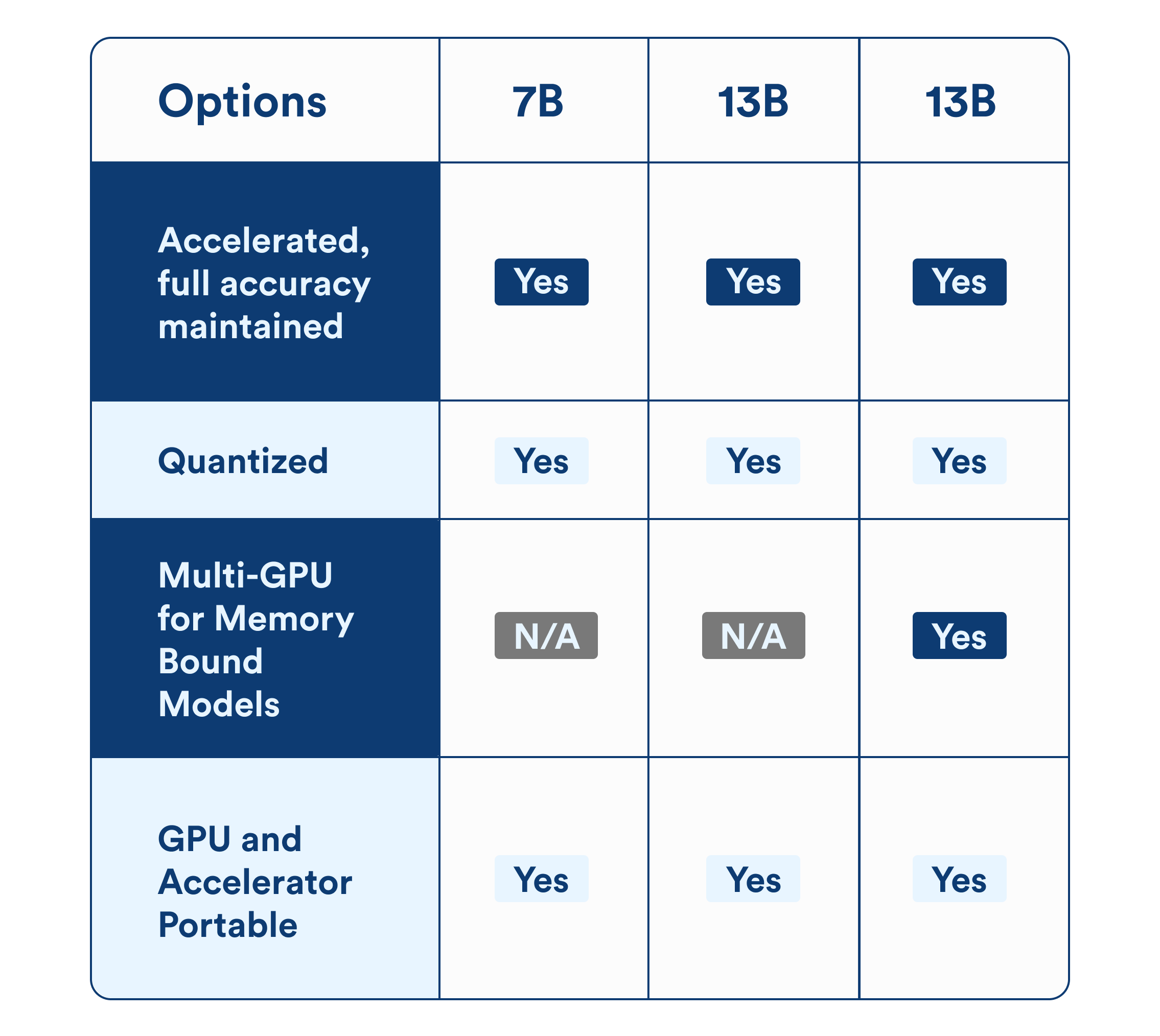

Let’s start by looking at the largest Llamas in the herd: our accelerated (non-quantized) 7B, 13B, and 70B. Full-accuracy configurations leverage compilation and runtime environment level techniques built on OctoAI’s machine learning compilation (MLC) expertise and Apache TVM IP. With these, OctoAI delivers to developers the fastest Llama 2 available in the market today, without a loss in quality or model parameter accuracy.

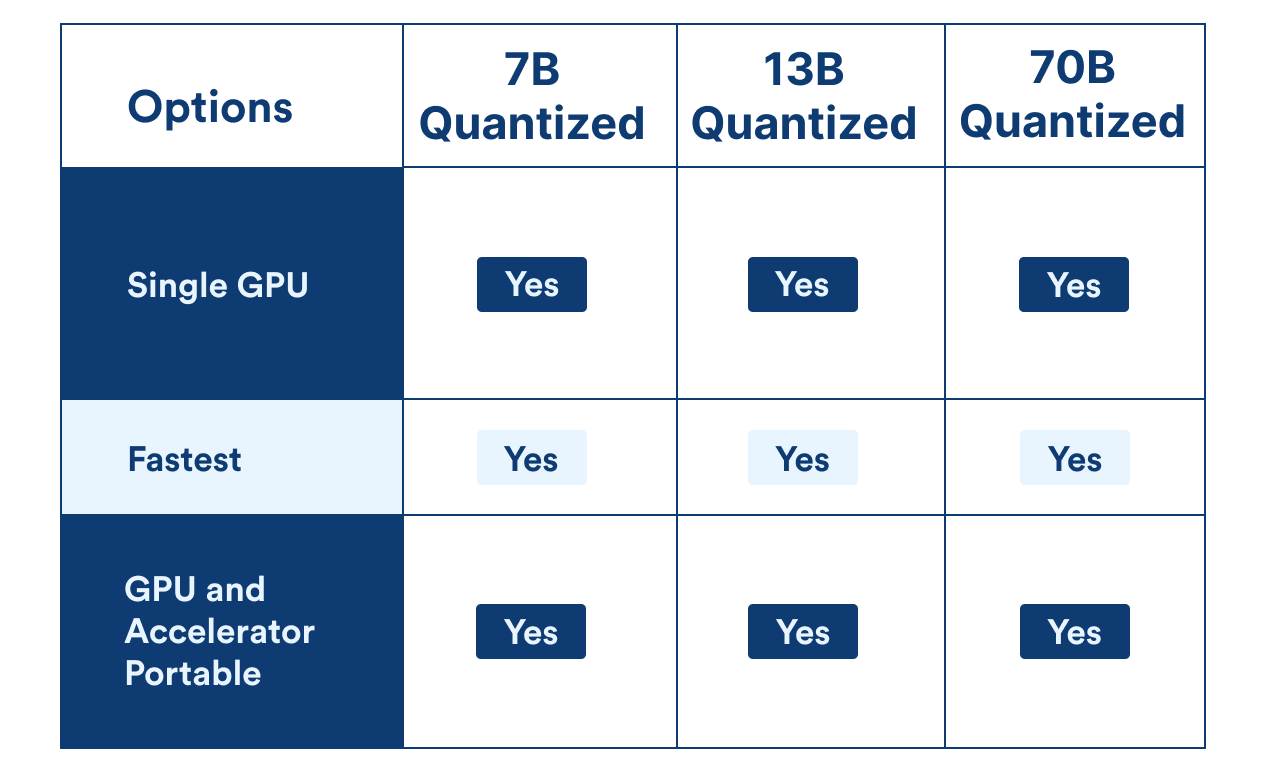

Quantization of model parameters adds another layer to this optionality, letting developers choose between larger models with quantized parameters versus a smaller model with non-quantized parameters. Quantized models, available for the 7B, 13B and 70B sizes, bring incremental speed and memory footprint improvements to these accelerated models for use cases that can take advantage of this configuration.

Quantization involves the reduction in size and memory footprint of a model through use of smaller footprint weights (eg. using of int4 or int8 variables rather than higher resolution fp16 variables) for model parameters. Early tests and evaluations (like exlamma) show the potential for applying this technique for a great many use cases, especially when fine tuned for the specific use case, like in the case of highly task specific automated agents. While the real impact of quantization varies by use case, the general concern and drawback with quantization is that the loss of resolution comes with a potential drop in quality.

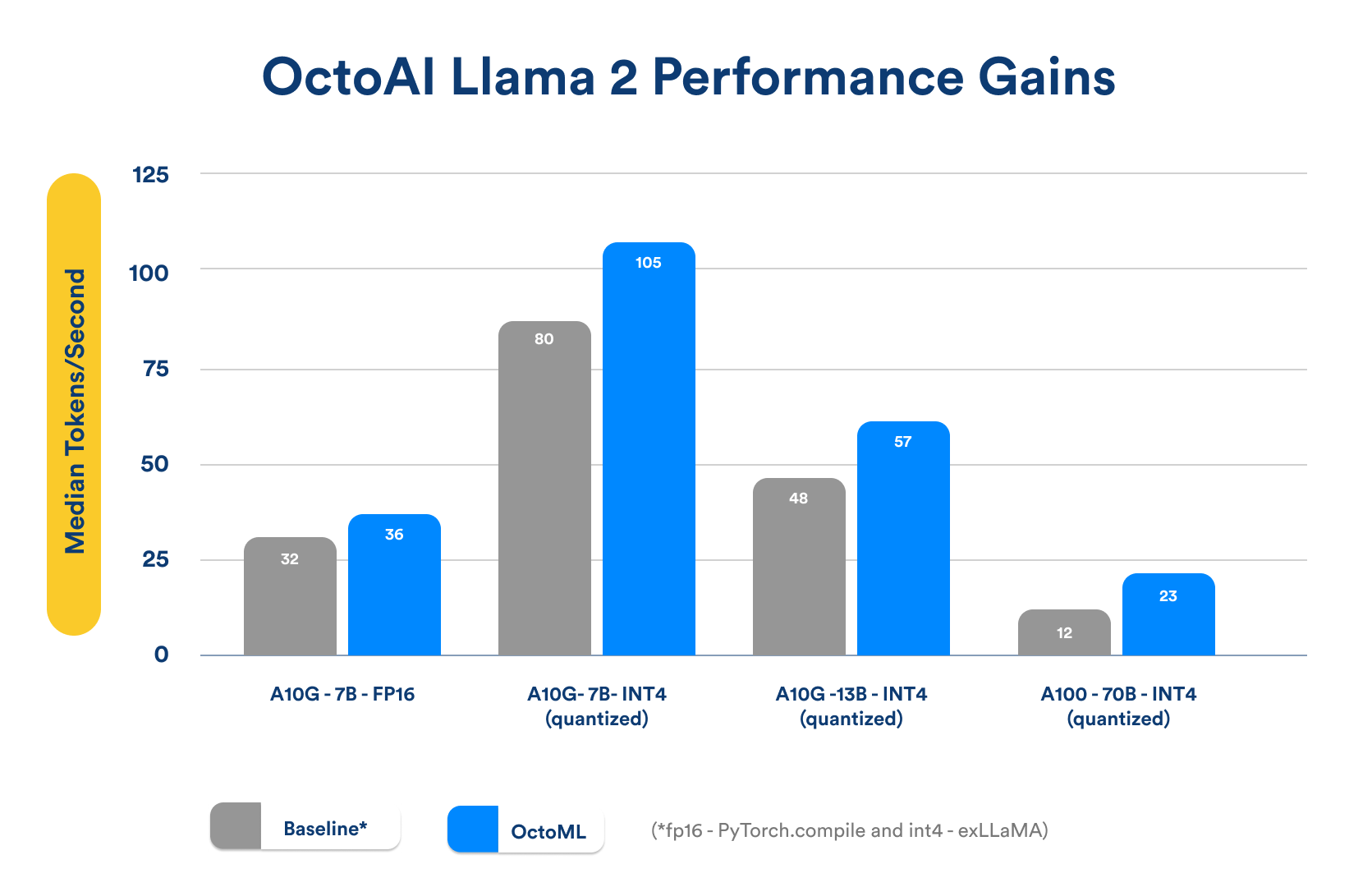

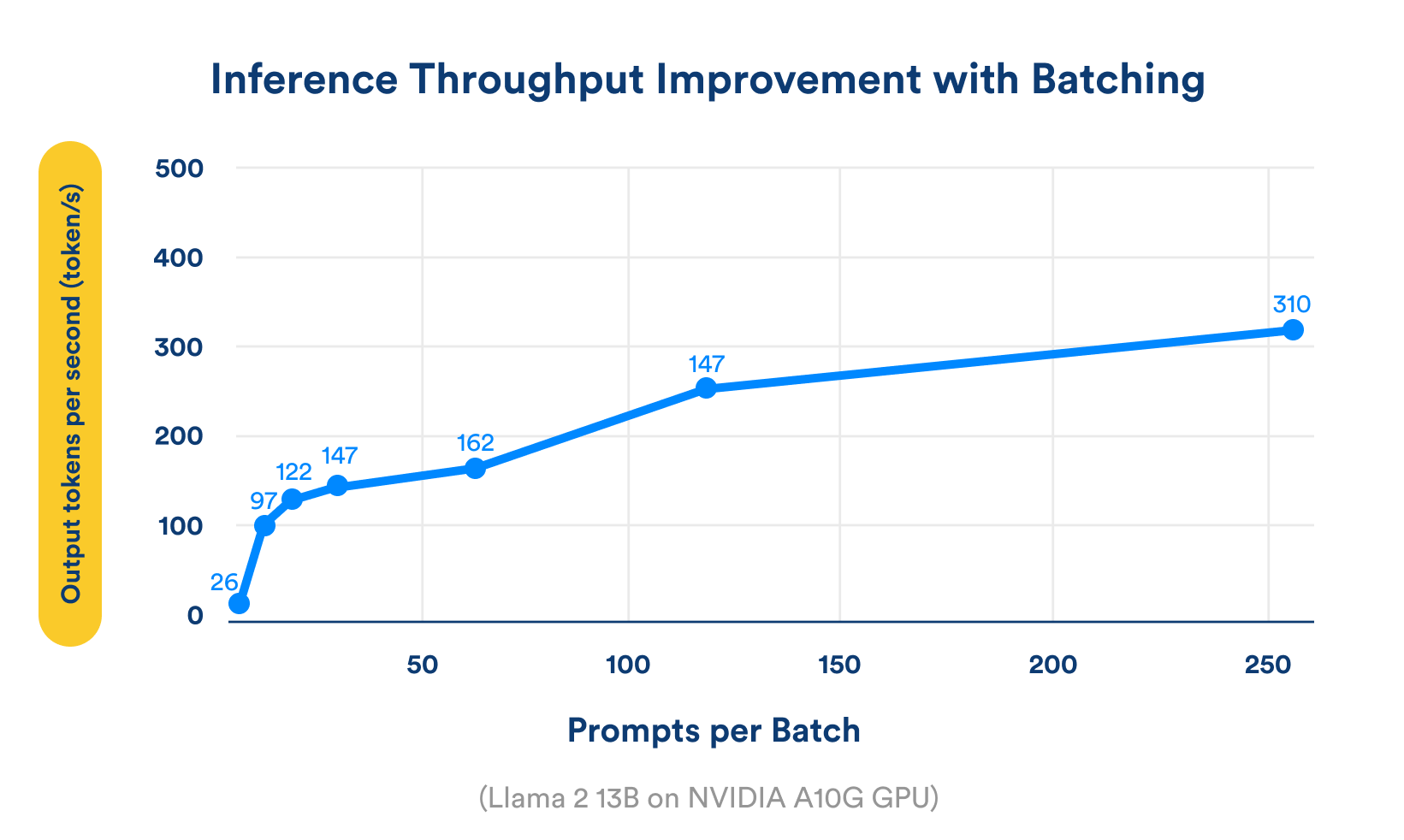

The chart below highlights results of initial internal benchmarking of inference processing speed improvements currently available with Llama 2 on OctoAI.

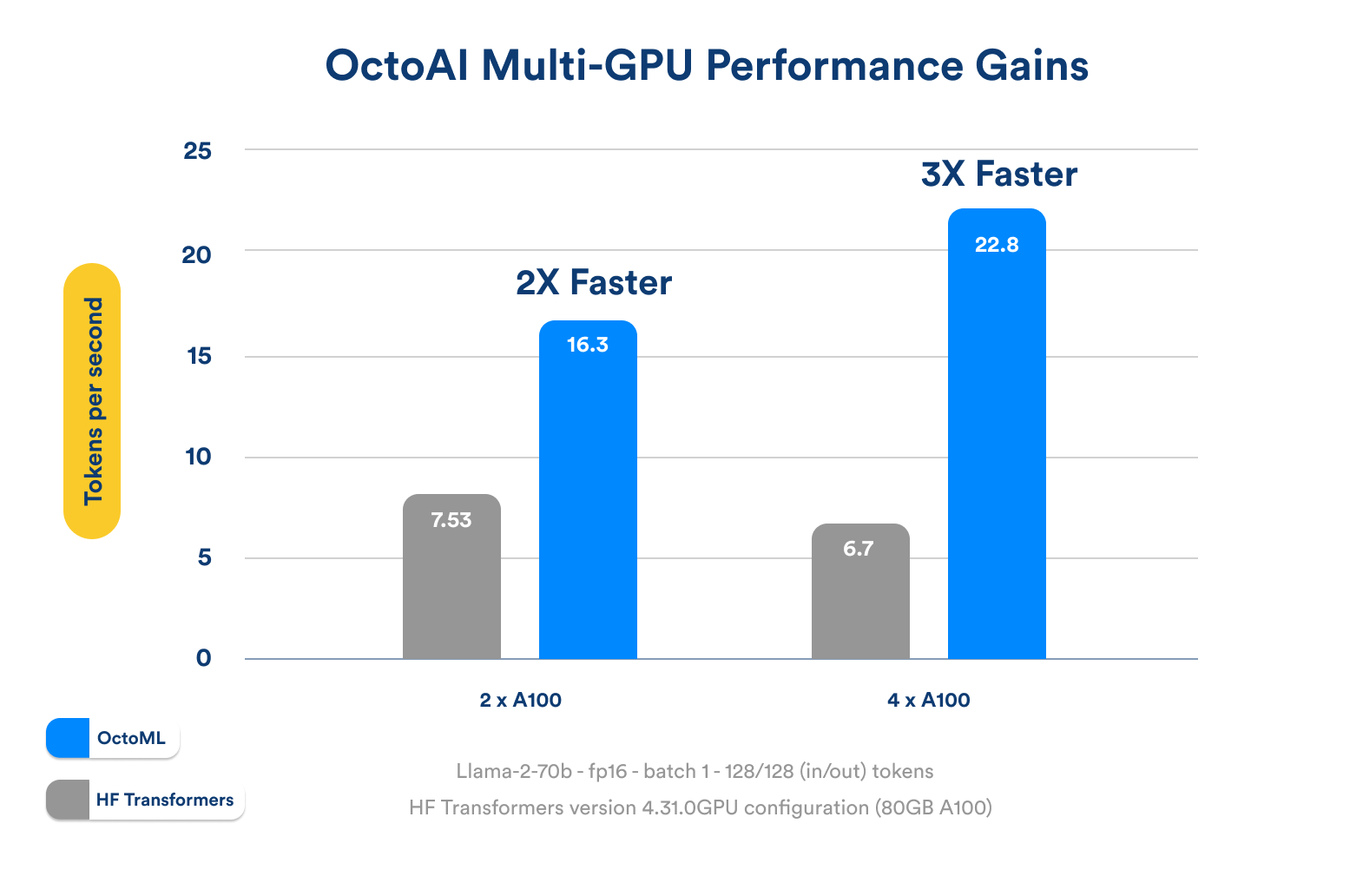

Highest quality and 3X performance gains using multi-GPU deployments

With the Llama 2 70B model, a critical constraint that we need to address is GPU memory. Running the non-quantized Llama 2 70B model with fp16 parameters requires more resources than available even on the 80GB variant of the A100 GPU, and requires ML codegen and runtime changes to make it possible to effectively run it across multiple GPUs. These changes include automatic code generation for efficient tensor parallel compute and management of the KV cache across the sharded multi-GPU runtime. And while modern weight quantization techniques have minimized quality effects for large models like Llama 70B, this multi-GPU runtime is a must for applications that require full quality benefits of a model like Llama 70B. OctoAI allows customers to run Llama 2 70B on OctoAI across multiple GPUs in the options of 2, 4 or more parallel NVIDIA A100 GPUs (with multi-A10G options to come soon). These build on the MLC-powered model acceleration, delivering 2x to 3x improvements in speed and throughput as captured in the results below.

Batching and the opportunity for unparalleled economics for profitable LLM-based services.

The chart above captures results from internal testing of batched inputs with Llama 2 13B Chat model running on NVIDIA A10 GPUs in OctoAI. The results show a 10x improvement in tokens per second possible, as you go from non batched requests to 100s of batched requests. Additionally, OctoAI’s accelerated models showed over 2x improvements in batched performance compared to open source vLLM. Internal tests with dynamically batched inferences resulted in over 3x savings on cost compared to GPT 3.5 when running at scale, highlighting the ability for Llama 2 based services to better price performance over closed API services, with the flexibility and freedom of running on a high quality OSS model. These also underscore the optionality available for builders of LLM powered services to exercise more control over unit economics while building on OSS models — without a drop in user experience or quality of outcomes. If this is interesting to you, reach out and we’d love to help you explore how you can use batching to improve your unit economics with Llama 2.

Try Llama 2 on OctoAI today

Get started with Llama 2 on OctoAI, with demo endpoints of select variants available for free use in the product today. You can also request for trial access to the complete Llama 2 herd on OctoAI — including the largest and most performant full-accuracy Llama 70B Chat running across multiple GPUs.

Your choice of models on our SaaS or in your environment

Run any model or checkpoint on our efficient, reliable, and customizable API endpoints. Sign up and start building in minutes.