OctoAI Model Remix Program Shrinks Your Dependency on OpenAI

In this article

In this article

OctoAI Model Remix gives you the tools to adopt open source models alongside OpenAI

At AWS re:Invent this week, we are excited to introduce the OctoAI Model Remix program — a set of tools and capabilities designed to accelerate adoption of large language models (LLMs) alongside OpenAI for your applications. Whether you are deep in your LLM adoption journey with OpenAI, or early in the process with your first pilot applications — the program brings you multiple tiers of the open source acceleration kits that can work for your scale and usage.

Growing adoption of open source LLMs alongside OpenAI

In the generative AI community, there’s a growing movement towards open source models in an effort to reduce dependence on OpenAI — and the recent corporate governance blow up is only a small part of why. Many OpenAI customers are finding it’s not the best-fit for every use-case. They are looking to supplement — not rip and replace — their OpenAI use with open source alternatives. Here’s why:

Ballooning costs, especially as production use begins to scale up, in areas where GPT 4 is overkill

Poor latency and unpredictable service quality for customers without large upfront commitments

Need for control and stronger governance of their data and AI roadmap

In response, teams want to balance risk and build scalable performance through relying on a mix of models — a sort of "model cocktail" with multiple ingredients. For those mixologist readers among us, that might look like a base liquor and key mixers from open source models like Llama 2, with the spice of the thing, perhaps the bitters or a tincture, from OpenAI. OctoAI is focused on helping customers achieve these goals, with the fastest, most scalable platform for open source LLM models.

Today, we're seeing developers struggle to create that ideal cocktail because of how difficult it is to reliably run and scale open source models from scratch. Open source software (OSS) can rival the performance of closed source models like GPT, but this requires an infrastructure layer that allows these models to be set-up, tuned and operated effectively. The reality is that organizations are not able to expend their precious resources on building and operating this OpenAI equivalent infrastructure to run, tune, and scale these OSS models. And this need isn't limited to advanced LLM applications deep in their OpenAI journey, we’re hearing these same themes from teams early in the adoption process, where developers want to be more intentional in incorporating these model cocktails early in their pilot projects.

OctoAI Text Gen Solution: Fast, flexible and cost-effective open source LLMs

This is where OctoAI comes in. We have already built this infrastructure layer, and are already serving millions of customer inferences every day on this infrastructure. The OctoAI Text Gen Solution brings to customers a turnkey solution that addresses everything you need to start building on your choice of OSS LLMs, addresses challenges that we’ve heard from DIY implementers, and delivers outcomes that, in many ways, are better than OpenAI’s service (or any other closed source proprietary model). OctoAI accelerates the path to successful OSS LLM adoption alongside OpenAI, and even end-to-end OpenAI to OSS migration, without the risks and complexities of DIY.

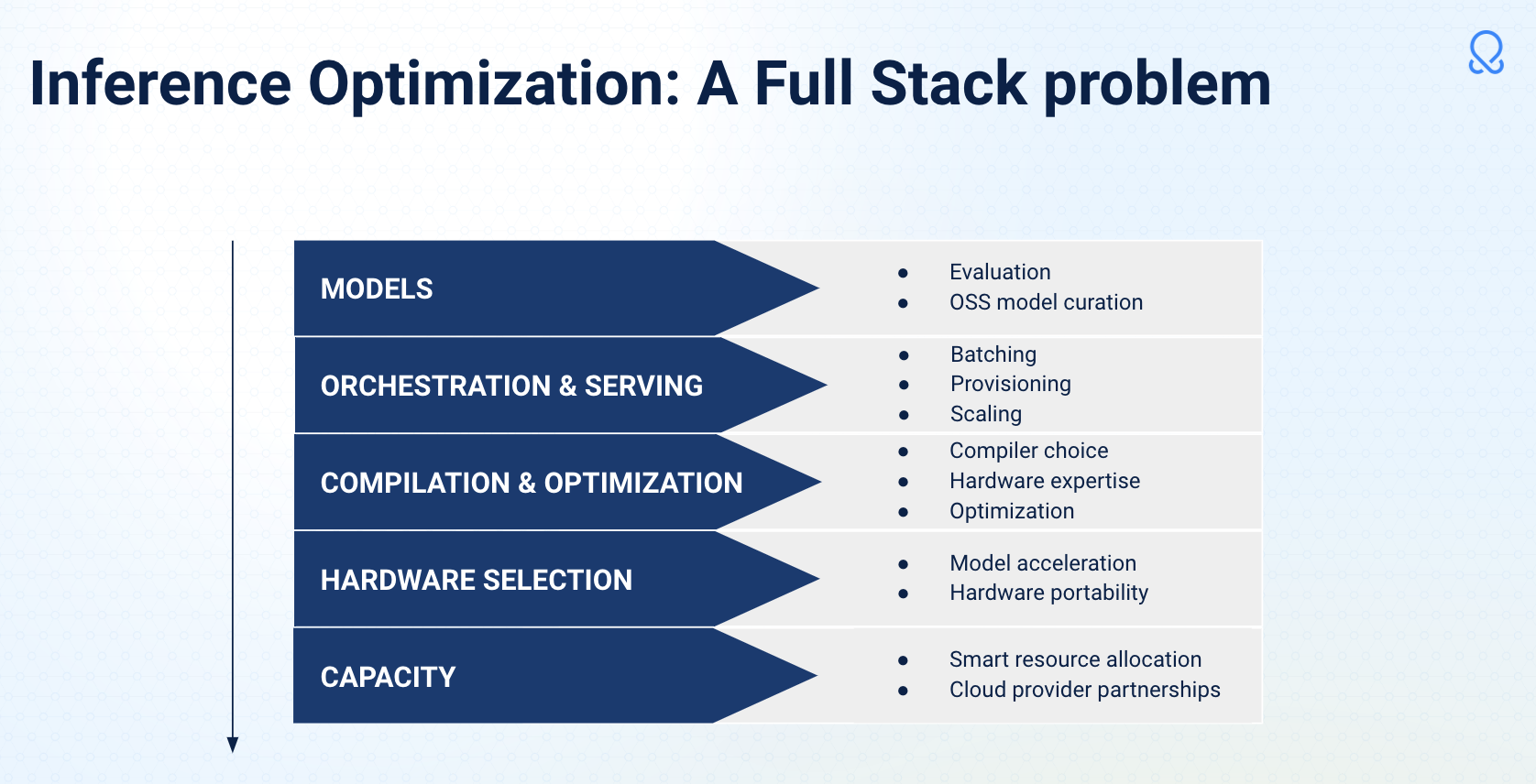

OctoAI — our GenAI infrastructure platform — provides the broadest array of Llama2 options, at the best price points, and with the best latency and tokens per second on the market. OctoAI implements a full-stack set of optimizations that ensure that you are automatically getting the best performance possible. Through these, complemented with our relationship with AWS, we bring you the best price-performance possible for open source LLMs today.

OctoAI’s AI systems expertise — a critical enabler in delivering full stack optimization

We support the OpenAI API and give you an OpenAI-consistent experience with one unified LLM API endpoint. With these, you can seamlessly try out each and every one of our OSS models with minimal changes in your application. And when you’ve identified which variant of Llama2, Code Llama, and/or Mistral works for you, you can either run that model natively in our platform or circle back with your own fine-tuned version of that model. If you have an existing GPT based application for which you would like to evaluate OSS models like Llama 2, we also have python code samples at our github repository to help jumpstart this integration and evaluation.

Three tiers of open source acceleration kits, available through the Model Remix Program

Below is an overview of the open source acceleration kits we’re making available today, to accelerate OSS adoption in your environment.

- Small: $500, for $750 in OctoAI LLM tokens

- Large: Higher commitments get up to 100% dollar matching in free bonus tokens

All at industry leading per token prices.

The ability to run any combination of OSS LLM models on OctoAI, which is an OpenAI equivalent GenAI infrastructure for OSS models.

The ability to run your fine-tuned checkpoint of a supported OSS model on OctoAI with a secure and dedicated endpoint that is SOC II compliant.

Business level SLAs around latency on premium NVIDIA H100 hardware and a production grade inference service.

Customer Success Managers to help your optimization approach and strategies as you identify areas for optimization in your OpenAI (or other closed source LLM) usage.

The OSS LLM models discussed here are available today on the OctoAI Text Gen Solution. These kits are made available by leveraging the accelerated computing infrastructure provided through our close relationship with AWS, and there are a limited number of these available; so these will be delivered to qualified customers on a first come first serve basis.