OctoML showcases modern machine learning performance with AMD EPYC™ CPUs in the cloud

Modern deep learning models can give businesses a powerful and versatile way to fuel innovation. Yet the path to value with machine-learning (ML) isn't always easy, especially when it comes to optimizing and deploying custom models as well as choosing the right cloud infrastructure for a specific ML workload.

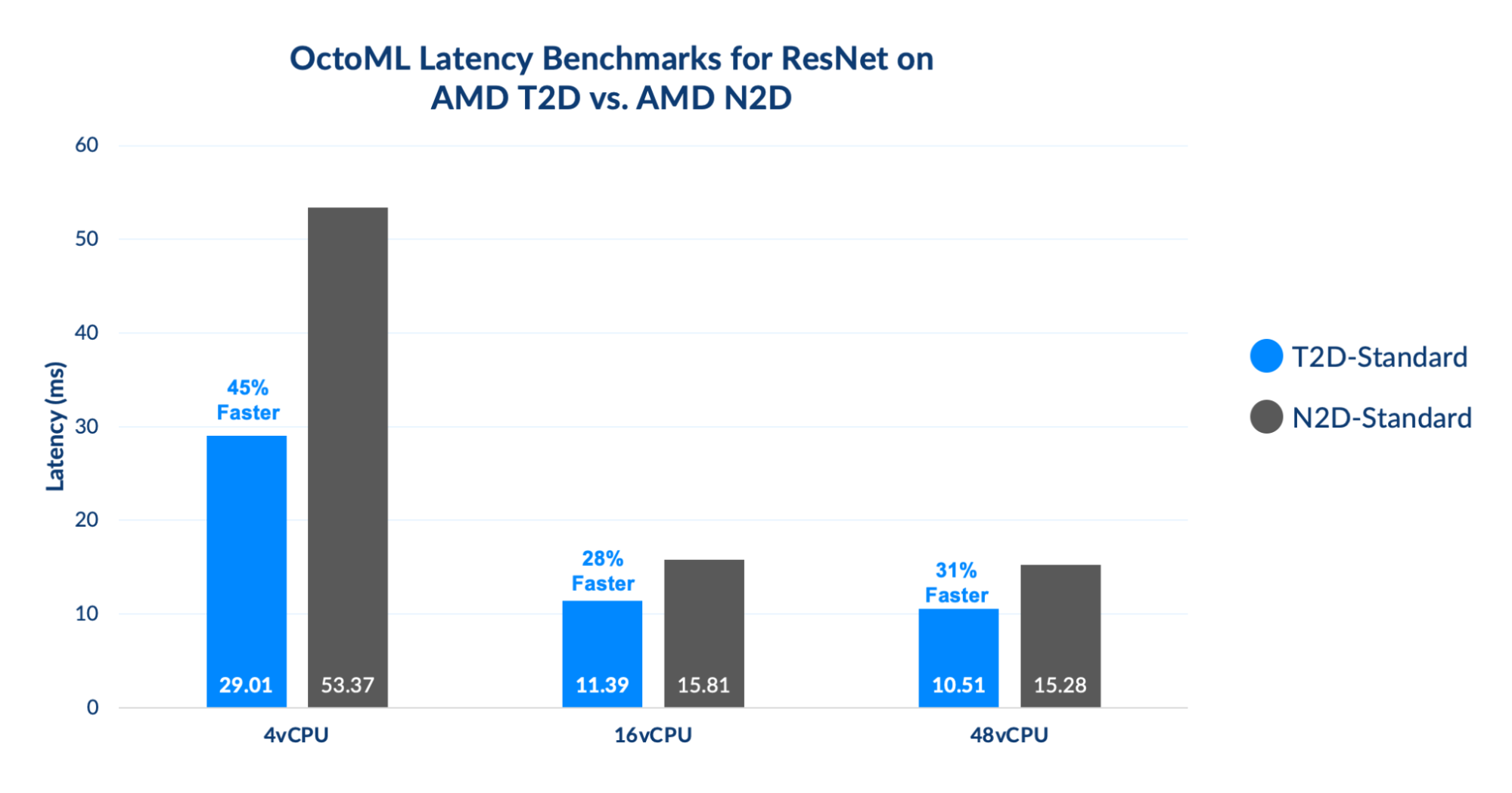

OctoML accelerated three popular deep learning models, with a focus on highly demanding ML inference workloads, and compared performance on T2D and N2D cloud compute instances on Google Cloud powered by AMD EPYC™ processors. The benchmarking exercise confirmed that Google Cloud T2D compute instances offered a clear cost and performance advantage compared to N2D for scaling out workloads.

The OctoML Platform uncovered compelling cost and performance benefits:

Up to 45% speedup of ResNet-50 on T2D

Latency reductions of 28% and above on all T2D instance types for ResNet-50

Additional 40% speedup on AMD pruned ResNet vs ResNet-50

Since T2D is a newer and often less familiar option for many teams that deploy and manage deep learning models, it's worth taking a closer look at the benchmarking results and at what they mean for businesses that run ML workloads in the cloud.

Making the case for a new cloud compute option

Many ML engineering teams already rely on AMD powered N2D compute instances running on Google Cloud to deploy their custom ML models. However, there's a newer option available that offers better cost/performance value: the Google Cloud T2D machine instance, which runs third-generation AMD EPYC processors and implements Google Cloud's Tau VM series architecture.

AMD and Google Cloud have optimized T2D for scale-out, virtualized environments. The goal is to deliver the best performance at the lowest cost for enterprise workloads.

Without building out a dedicated team and infrastructure to benchmark ML models with the constraints of real-world scenarios, it can be very difficult to determine what advantages each new generation of machines can provide. AMD can look at machine characteristics to predict which instances are better suited for various ML scenarios, but real-world scenarios and ML models are often much harder to predict.

Evaluating deployment options with real-world data

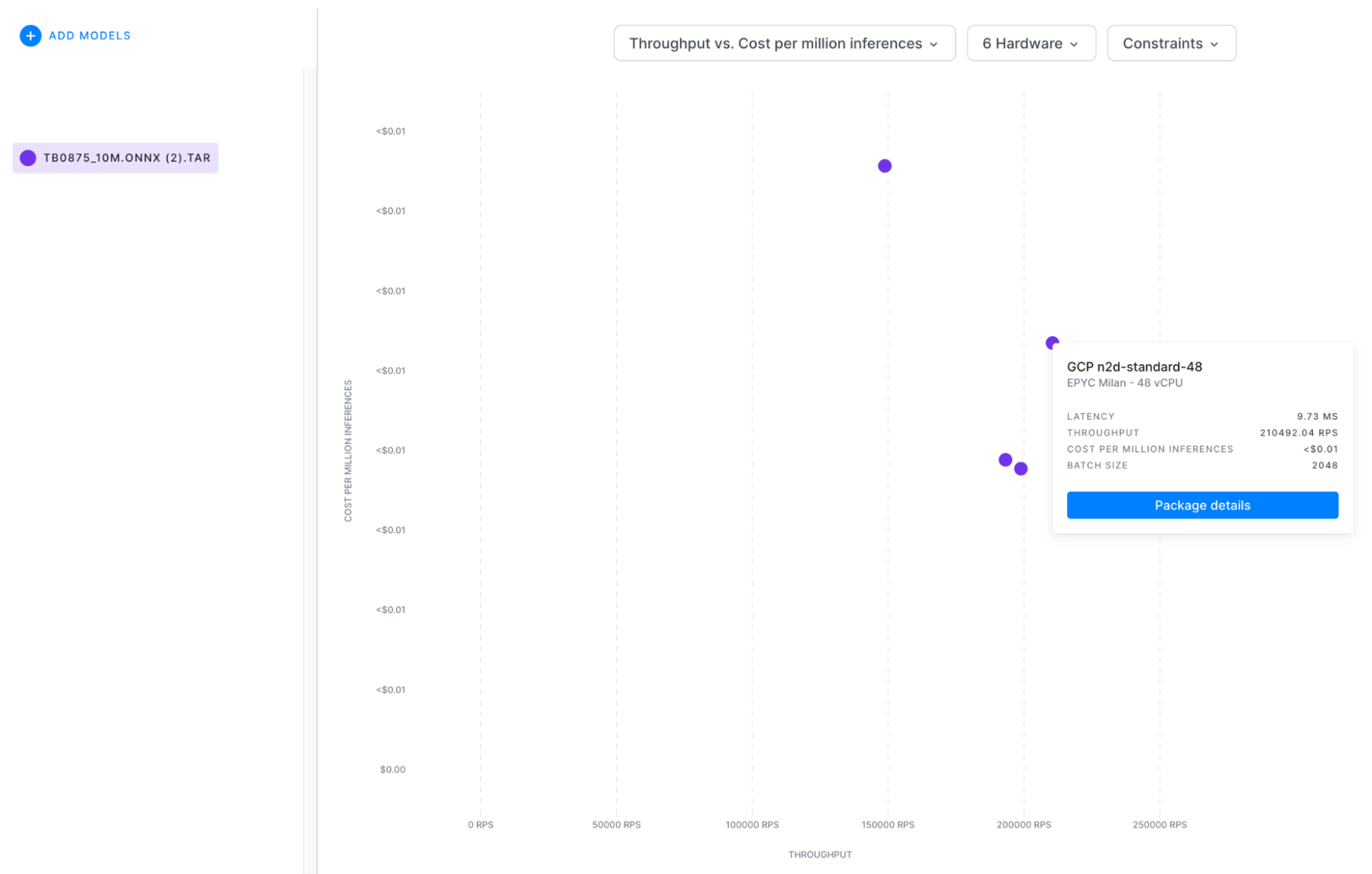

The OctoML platform makes this kind of analysis easy. First, upload the model to the OctoML platform and select one or more target instances for deployment. Then, OctoML uses a variety of acceleration approaches to optimize the model specific to each hardware target. OctoML then creates a production-ready deployment package — enabling a simpler, faster, less costly, and more scalable ML development process.

OctoML enabled AMD developers with no specialized ML deployment expertise to spin up hundreds of pre-optimized models for different hardware instances. OctoML’s live cost and performance data made it easy to evaluate which hardware and software configurations met the SLAs for the use case. The OctoML platform measures both latency and throughput (key ML performance metrics), and compares its performance calculations against current cloud cost data. It then generates interactive graphs that make it easy for a user to select benchmarking data for specific hardware targets.

Each point represents a production-ready model deployment package. Users can select the one that best meets their SLAs and download a Docker Tarball or Python Wheel

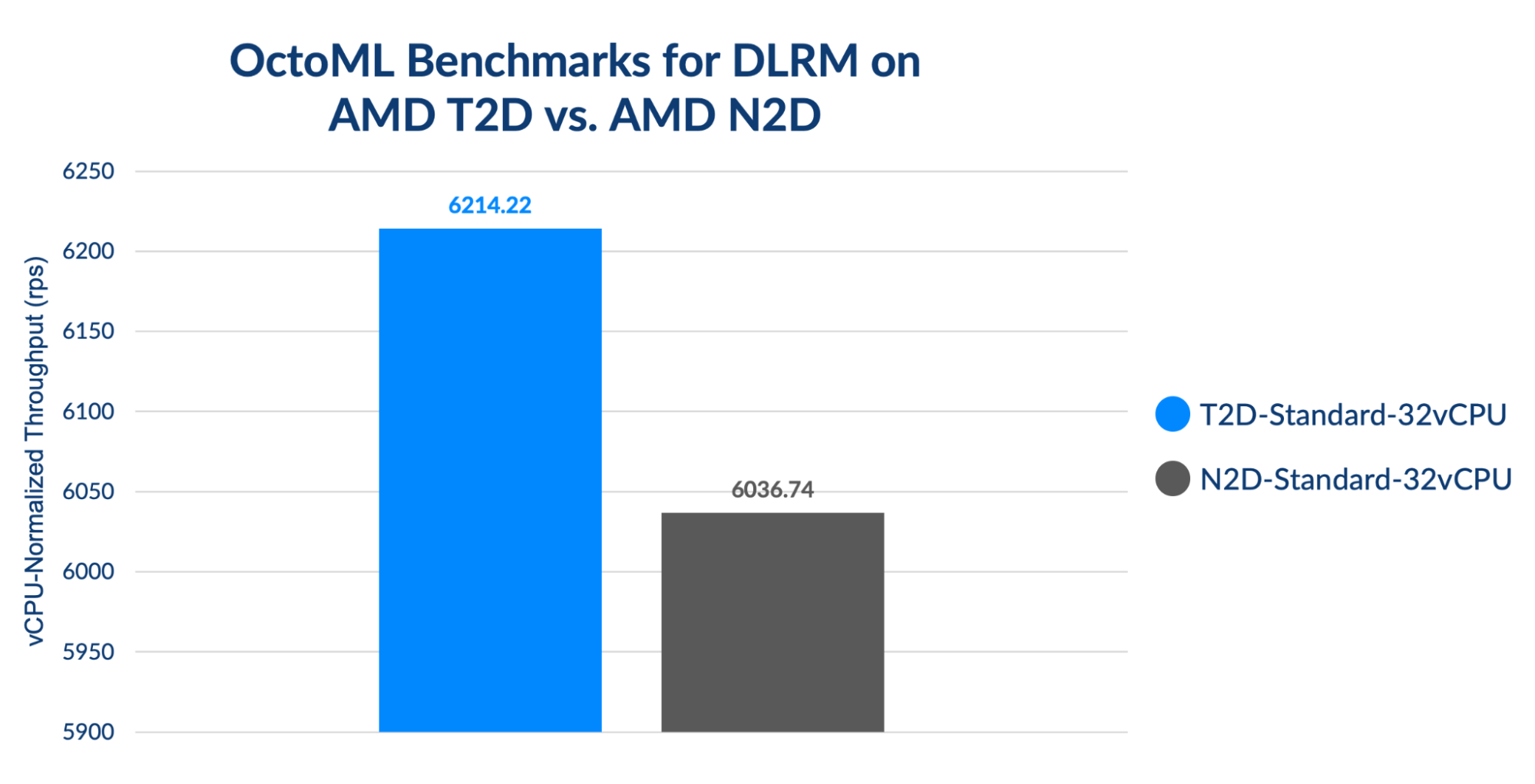

OctoML and AMD set up two benchmarking exercises comparing N2D and T2D – one using ResNet50, a popular computer vision model, and another using DLRM, a well-known recommendation model first developed by Facebook Research.

The experiment compared OctoML-optimized versions of each model against multiple N2D and T2D instances in Google Cloud. It's also important to note that the benchmarking focused exclusively on ML inference workloads, which applies an ML model to incoming customer data and generates an output or set of predictions. Inference workloads are typically far more diverse in performance requirements than training workloads, as throughput versus latency requirements depend on the specific scenario and service level agreement (SLA) expectations. By choosing to benchmark both on a smaller ResNet-50 model as well as a larger DLRM model, this exercise offers a more robust test of cloud computing performance.

The T2D performance advantage

OctoML revealed that the Tau instances (T2D) showed huge improvements in performance and cost effectiveness over the N-series instance (N2D) in nearly every scenario it tested using the OctoML platform. The models being compared are accelerated using OctoML, which reveals the full benefits of the latency and throughput improvements. Let's drill down into some important details for each model:

Even with a very different emphasis for this benchmarking exercise, T2D left no room for doubt — showing superior performance (up to 13% higher throughput) and much greater cost effectiveness compared to N2D.

Of course, some variations are expected in these results using custom ML models. But the benchmarking results in this case make one thing clear: T2D will deliver a major performance and cost advantage over N2D no matter what type of ML workload you choose to run, furthering inference performance on T2D using custom models.

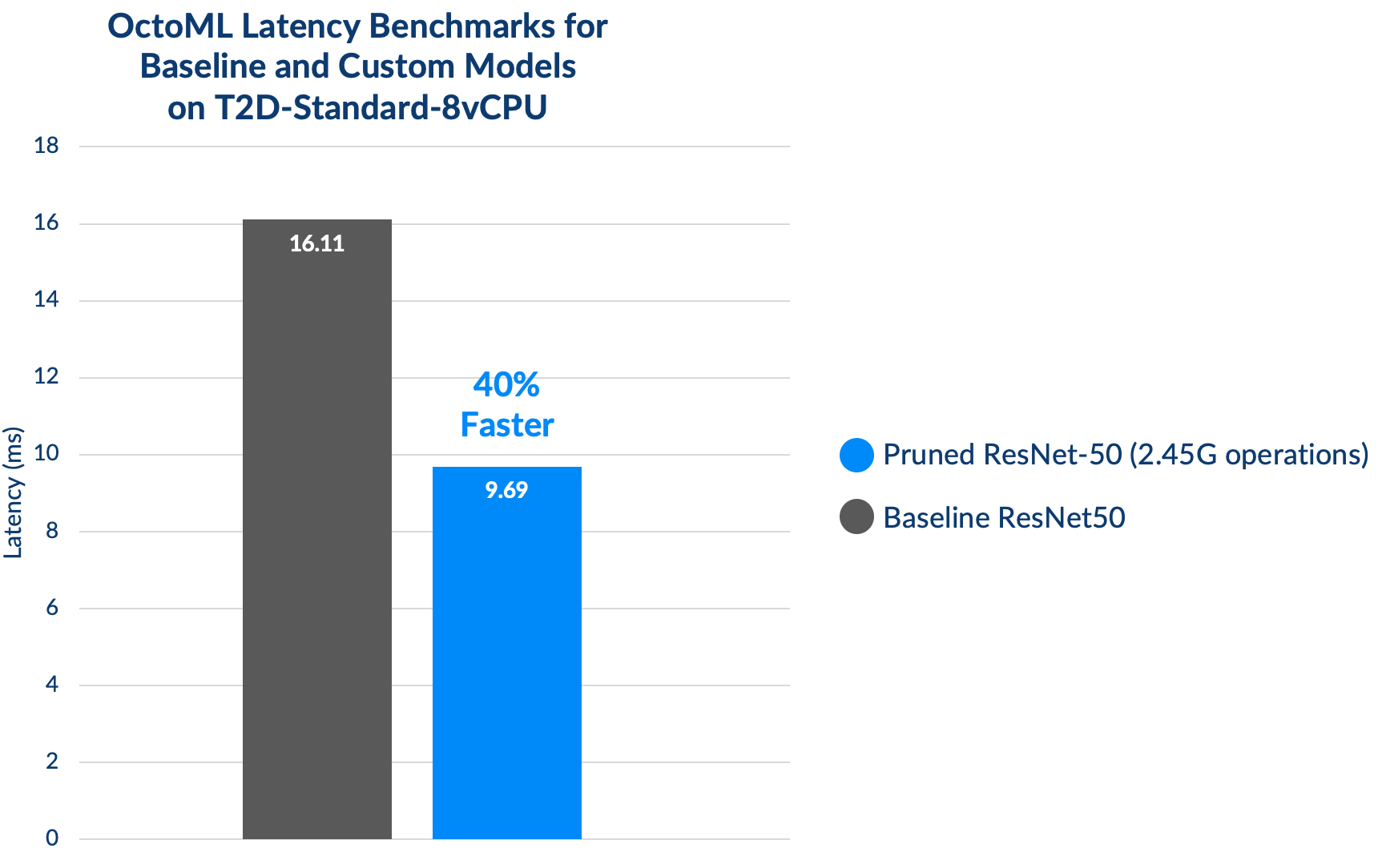

The above benchmarks highlight the performance uplift that T2D offers compared to N2D, but there’s another way to incrementally improve inference performance, and that’s by using optimized models. With AMD’s recent acquisition of Xilinx, there are now new optimized models from the AMD Vitis™ AI Model Zoo that can be leveraged on AMD EPYC processor-based instances, such as T2D.

When benchmarking the optimized, 65% pruned ResNet-50 TensorFlow model against the baseline ResNet-50 model, there are significant latency gains observed. The pruned ResNet-50 model, which requires less compute than the baseline ResNet-50 model, achieves up to 40% better latency than the baseline version of the ResNet-50 model using a batch size of 1 to best represent real-time use cases.

Why it's time to explore ML cloud compute options

There's a strong case to make for moving workloads to T2D on Google Cloud using OctoML if you currently use N2D. It's also worth exploring the advantages of using T2D if you currently run your workloads on another platform or a different hyper-scale cloud vendor.

The capabilities built into the OctoML platform make it possible for almost anyone to use OctoML to optimize any workload quickly and easily for any target hardware platform.

That's great news, because it means your team never needs to feel tied to a particular platform for any reason besides the ones that really matter: performance, cost, and efficiency. OctoML, AMD, and T2D make a strong case for where to find those advantages when you're running ML workloads.

Learn more about Google Cloud’s T2D machine series here. For more information on AMD Vitis AI Model Zoo, visit the Github repository.

To set up a consultation with OctoML’s engineers to see how your model performs in the cloud, click here.

Related Posts

2022 will go down as the year that the general public awakened to the power and potential of AI. Apps for chat, copywriting, coding and art dominated the media conversation and took off at warp speed. But the rapid pace of adoption is a blessing and a curse for technology companies and startups who must now reckon with the staggering cost of deploying and running AI in production.

In the current economic climate, our customer conversations have begun to shift from AI innovation to AI/ML budgets, as technology and business leaders explore all avenues for cost savings.