In the current inflationary and (potentially) recessionary economic climate, our customer conversations have begun to shift from AI innovation to AI/ML budgets, as technology and business leaders explore all avenues for cost savings. At the executive level, these leaders are seeking solutions to sustain or grow the successful AI/ML apps and services they’ve built. Unfortunately, most proposed cost-cutting measures significantly impact end-user experience. That’s not surprising to us, because these customers are faced with what appears to be an immovable budget object for them, which is that 90% of their AI/ML compute costs are tied to the inferencing production workloads (i.e. ML models running in a service).

The reason inference costs appear to be immovable is that, unfortunately, ML models are not readily portable. This is news to our customer executives because they are used to their teams shifting around generic software workloads in the cloud to get a major price/performance benefit from the latest and greatest chip innovations (i.e. Moore’s Law). Those operating AI/ML in production cannot do so for two reasons: the switching costs and risks are both too high. The skill level of the team required for this migration work would have to have spent their proverbial 10,000 hours in ML compiler technology. And in addition to the time it would take to hire these unicorns, it would still be 12 weeks of work to migrate workloads. Even with all this skill and effort, a successful outcome is a 50/50 proposition that may not deliver cost or performance benefits at all.

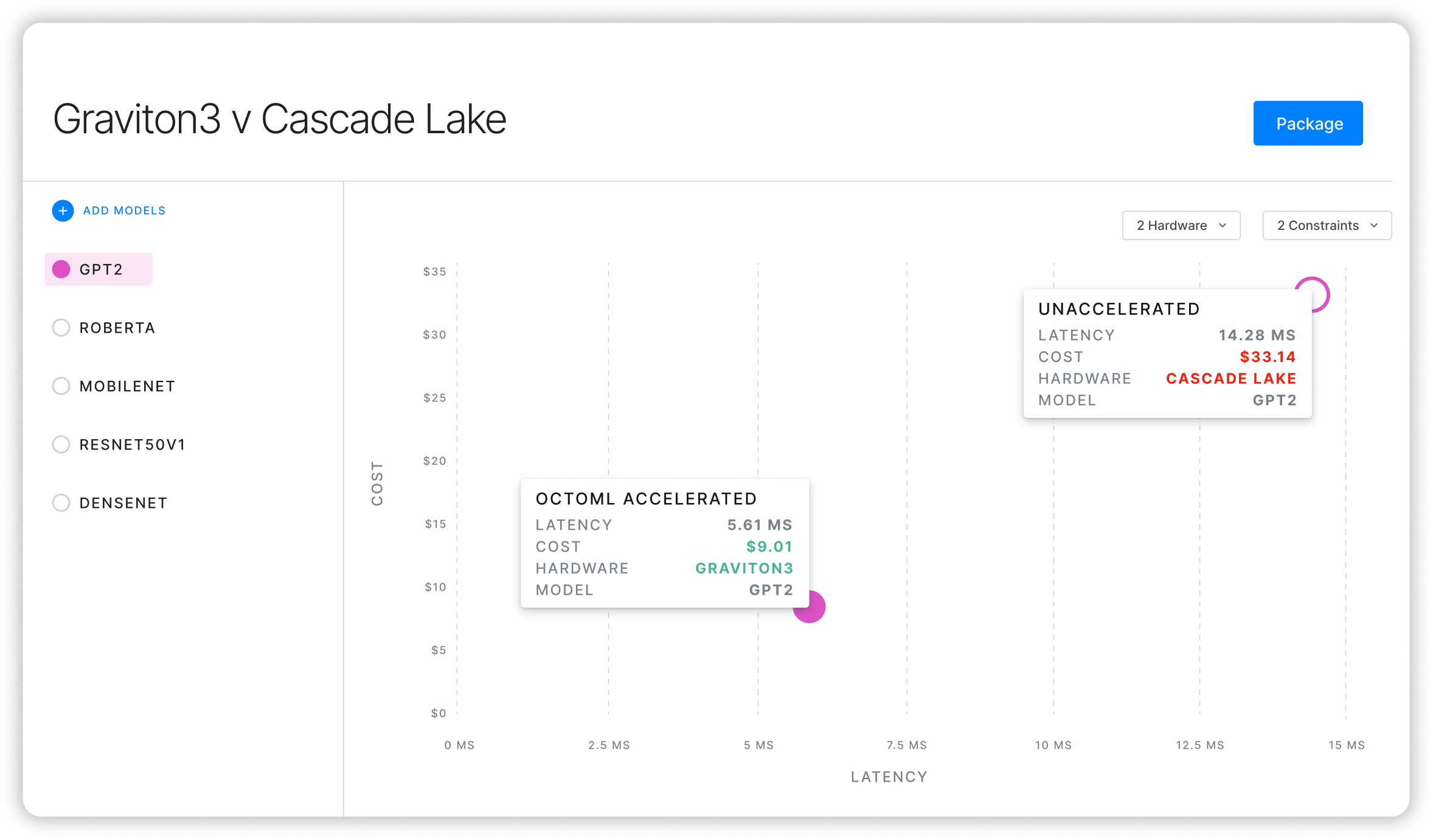

Addressing these challenges through automation is why the OctoML platform exists. Our SaaS platform sits across all three major clouds and has the most comprehensive vantage point of all the major chip provider options in these clouds across GPUs and CPUs. Our own machine learning technology matches the right software libraries and acceleration and optimization methods to ensure our customers have perpetual hardware independence. Customers can then analyze real-world workload migration options using the OctoML Comparison Dashboard (Figure 1). OctoML’s automation capabilities and the ability to evaluate real price and performance data achieved through acceleration and optimization, not simulation, allows our customers to look before they leap.

Figure 1: OctoML Comparison Dashboard for Analyzing AI/ML Hardware Migration Options

For our customers, insights generated through our platform are presenting a very compelling migration case based upon the arrival of cloud-based Arm CPUs in AWS and Azure (+ GCP in preview). These CPUs are equipped with ever more sophisticated vector and matrix extensions that cater directly to the needs of modern AI/ML models. However, using these extensions effectively requires code re-generation carefully tuned to each model's needs, which is exactly what OctoML fully automates.

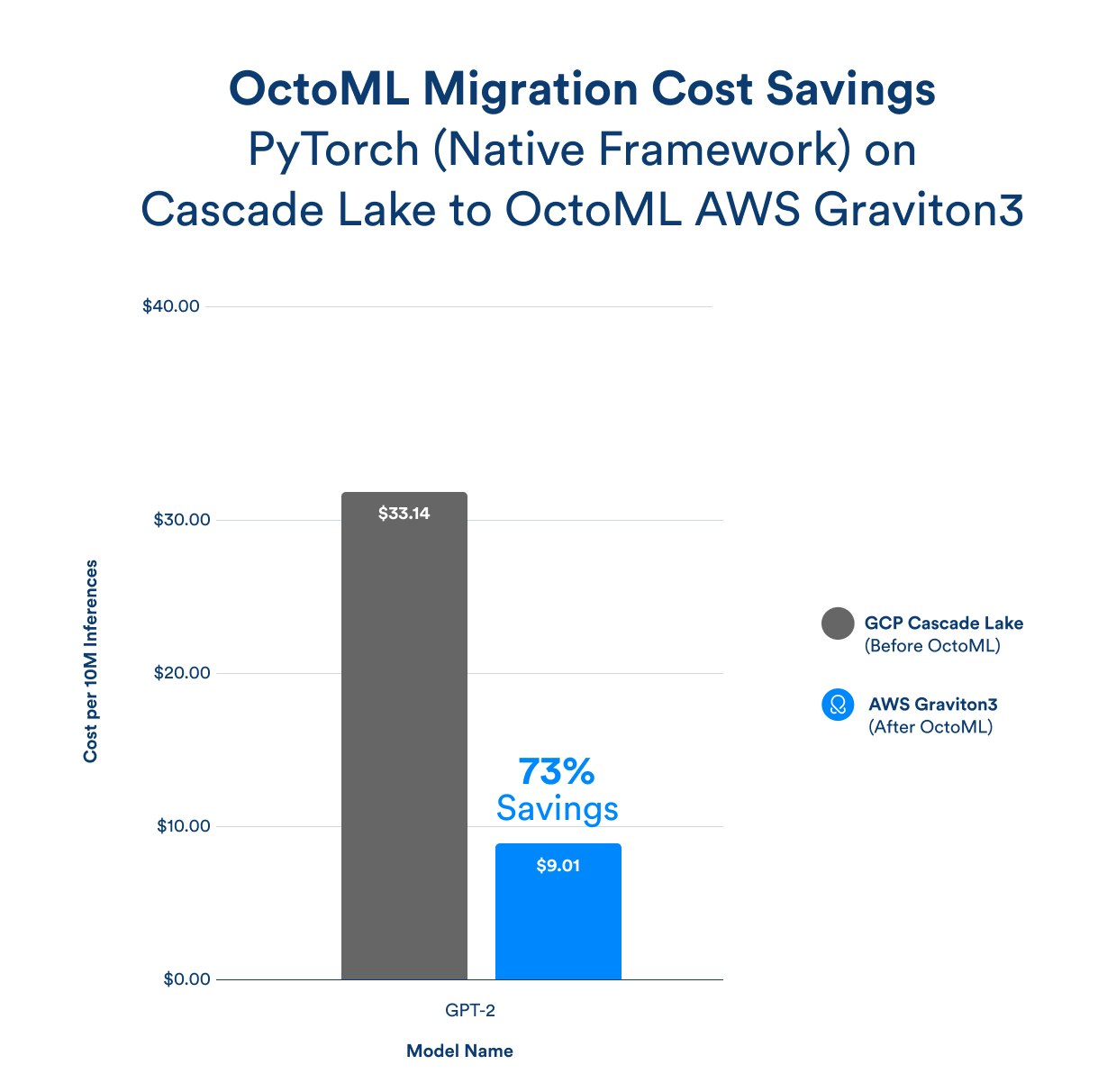

AWS Graviton was the first to market four years ago with an Arm-based cloud CPU solution, and we are excited to share with you the transformative results we are seeing for customers in the 3rd generation; AWS Graviton3. We are highlighting a migration use case tied to the most popular inference CPU we are seeing in our customer environments, which is Intel Cascade Lake. Here are some quick highlights of the benefits we’ve found moving workloads from GCP with Intel Cascade Lake to AWS Graviton3. Customers can:

Save 73% on compute costs for natural language processing (NLP) models, such as GPT-2

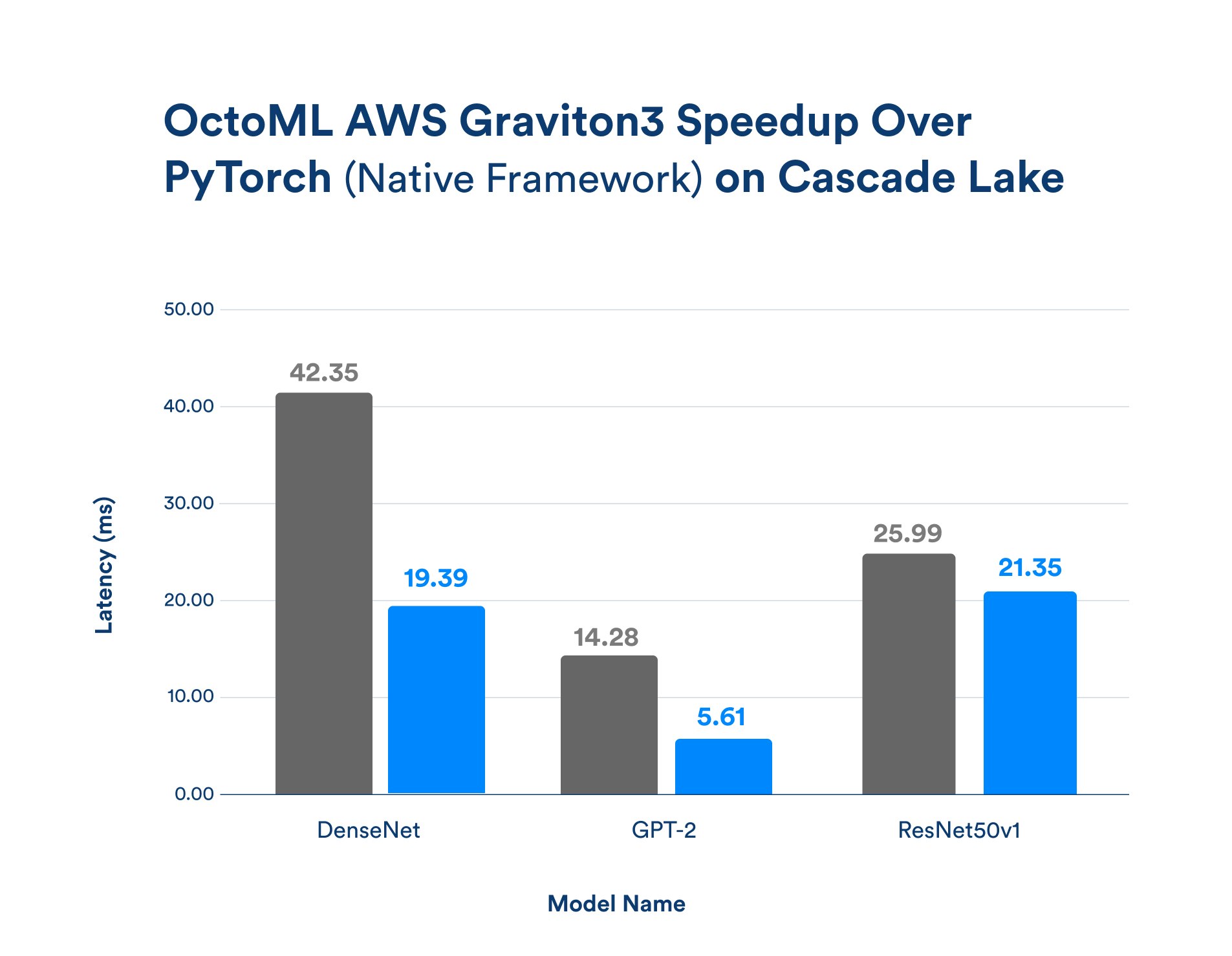

Dramatically improve end user experience (measured in latency)

Achieve those benefits in hours, not months, through OctoML’s automation

Figure 2a: Cost Savings for GPT-2 Through OctoML Automated Migration and Acceleration

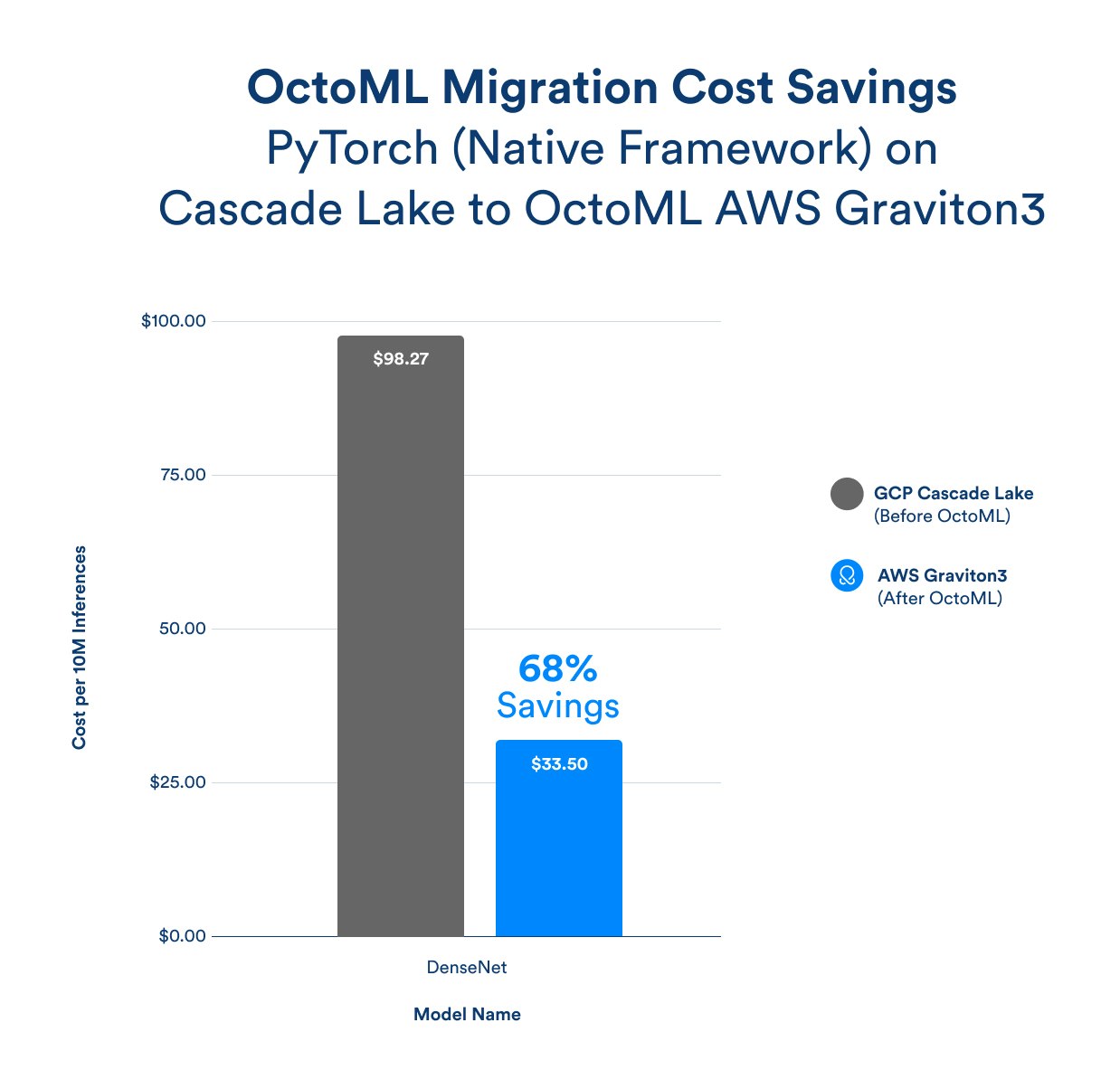

Figure 2b: Cost Savings for DenseNet Through OctoML Automated Migration and Acceleration

These cost savings numbers highlight why anyone doing CPU-based inference should at least investigate making a move. (Note: For simplicity we have kept these charts to a before/after OctoML, but the ROI for migration to Graviton3 was substantial even when users leveraged OctoML on Cascade Lake.) Figure 3 below shows that while dramatically improving the P&L of your AI/ML service through much better cost/per inference, you are also improving the user experience through substantial speedups.

Figure 3: Speedups for NLP and Computer Vision Models Through OctoML Automated Migration and Acceleration

If you are interested in seeing the full migration analysis, the PDF can be found here, which covers details for both PyTorch and TensorFlow baselines. We also share details on the methodologies used in the OctoML Platform for those interested in the technical details of ML acceleration and optimization. Finally, it is worth noting that the OctoML Platform has even more significant impact on proprietary models than the off-the-shelf models showcased in this blog.

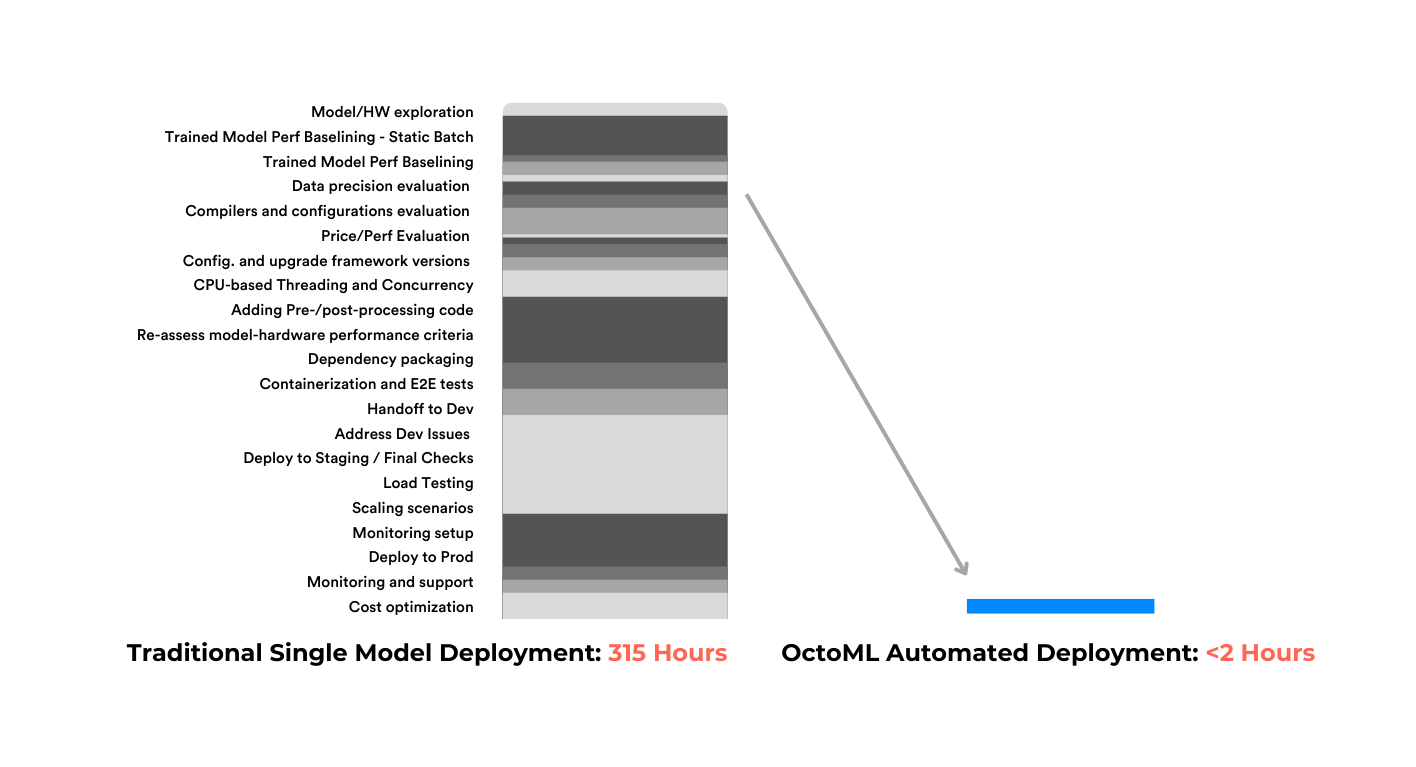

Figure 4: Traditional Single Model Deployment vs OctoML Automated Deployment