Quickstart

How to create an OctoAI API token

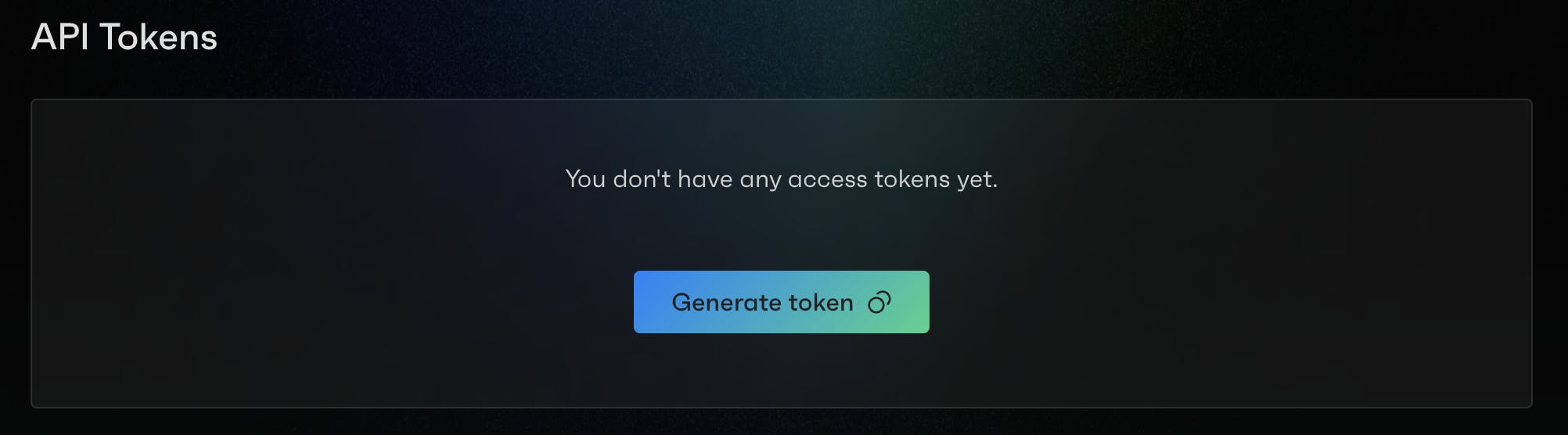

All endpoints require authentication by default. That means you will need an access token in order to run inferences against those endpoints. To generate a token, head to your Account Settings and click Generate token:

After generating a token, make sure to store it in your terminal and/or environment file for your app.

bash

export OCTOAI_TOKEN=<INSERT_HERE>

Now you’ll be able to run inferences! For example:

cURL

curl -X POST "https://text.octoai.run/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OCTOAI_TOKEN" \

--data-raw '{

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Hello world"

}

],

"model": "mixtral-8x7b-instruct",

"max_tokens": 512,

"presence_penalty": 0,

"temperature": 0.1,

"top_p": 0.9

}'

Was this page helpful?