OctoStack, an industry-leading private GenAI production stack for the enterprise

In this article

Enterprises lag AI-natives in GenAI adoption due to “control and AI autonomy” gaps in available options

OctoStack: Keep GenAI models and interactions inside the enterprise environment

4x better GPU utilization, 50% lower operating costs, and continuous systems optimizations

Get started today with a live OctoStack demo

In this article

Enterprises lag AI-natives in GenAI adoption due to “control and AI autonomy” gaps in available options

OctoStack: Keep GenAI models and interactions inside the enterprise environment

4x better GPU utilization, 50% lower operating costs, and continuous systems optimizations

Get started today with a live OctoStack demo

We are excited today to launch OctoStack, a production-ready GenAI inference stack that allows enterprises to efficiently serve generative AI models inside their environment (including on-premises and in a cloud VPC). OctoStack allows enterprises to:

Maintain the required control, privacy and security over their data

Run their choice of GenAI models or fine tunes in their environment

Improve TCO and maximize the utilization of expensive GPU hardware (4x better utilization compared to best-in-class alternatives, and 50% reduction in operating costs)

Benefit from learnings and continuous improvements from serving millions of daily customer inferences on the OctoAI SaaS API endpoints

Access all the functionalities available in a state-of-the-art SaaS API service, including function calling, ability to run fine tunes, and rich model support

One of the early evaluators of OctoStack is Apate.ai, a company delivering a global service to combat telephone scams using generative conversational AI. With highly custom models supporting multiple languages and regional dialects, the Apate team is building on OctoStack to efficiently run their suite of LLMs across multiple geographies and to meet the data residency constraints for their solutions — without trading off access to the latest GenAI innovations.

For our performance and security-sensitive use case, it is imperative that the models that process call data run in an environment that offers flexibility, scale and security. OctoStack lets us easily and efficiently run the customized models we need, within environments that we choose, and deliver the scale our customers require.

Dali Kaafar, Founder and CEO @ Apate AI

OctoStack is available for deployment today, reach out to get started with a live demo.

Enterprises lag AI-natives in GenAI adoption due to “control and AI autonomy” gaps in available options

Early adoption of GenAI has been led by AI-native companies. The OctoAI team has been working closely with several of these early adopters, including innovators like Otherside AI, Latitude Games, and NightCafe Studios. These AI natives often start with the proprietary models, but then are able to use their model interaction data to build and deliver even better customer experiences and massive ROI for the business. This transition is made possible by mechanisms like customization (like fine tuned models, structured data interactions, RAG) and the ability to efficiently run and consume these models using the OctoAI SaaS API service.

Enterprises have seen the early success in this approach, and are also keen to implement similar approaches. But we’ve seen enterprises face additional challenges on this path. Frequent friction points we’ve heard in discussions have been around (i) control and AI autonomy — the ability to retain control over their models, their interactions, and their data, all key to their business and market differentiation; (ii) flexibility and optionality — enterprises want to have broad flexibility to choose models, target hardware, and features. They need investments in GenAI to allow for quick and efficient transitions across these options as they evolve; and (iii) efficiency — currently available options to deploy GenAI models in-house require deep expertise and is a full time job. These also result in poor utilization of GPUs and downtimes due to scaling and configuration issues.

These themes have also been empirically uncovered in broader market research and industry insights. A recent study by Andreesen Horowitz dives into enterprise adoption of GenAI and highlights several factors limiting their ability to mimic the success of AI natives. Highlights from the research include:

- Control over the GenAI models and interactions (AI autonomy) is a key guiding factor in enterprise AI decisions: 60% of the enterprises report control as the primary reason to prefer open source models, referring to the need to have better control into the data and interactions between internal systems and the model. In addition, GenAI models are still new and companies want to continue understanding model outputs and relationships between outputs, customizations, and their data.

- Enterprises are “optimizing for optionality”, toward a “multi-model, open source world”: Over 90% of the enterprises are experimenting with 3 or more models actively today, and over 80% are actively using or planning to use open source models. Besides control and AI autonomy, a crucial reason is the recognition of the pace of evolution in the market. Enterprises want to rapidly evaluate and iterate new models and features as they emerge, and avoid lock-in with any model or vendor.

- The lack of in-house talent to efficiently and reliably run GenAI models is clear: Enterprises are recognizing the need for highly specialized skill sets and personnel to implement, maintain and scale the computing infrastructure for GenAI models. In 2023, implementation accounted for one the biggest AI spend areas in 2023. And this takes effort and resources away from the building on these capabilities towards the enterprise/business specific applications or solutions.

Early adopters have been able to address these needs, sometimes even by building internal systems and mechanisms to ensure the control and autonomy over the data and interactions with models. But these have not been feasible options for enterprises. The majority of enterprises we’ve been working with are seeking better options to bring together their internal data and GenAI models without giving up control over their GenAI interactions and models.

OctoStack: Keep GenAI models and interactions inside the enterprise environment

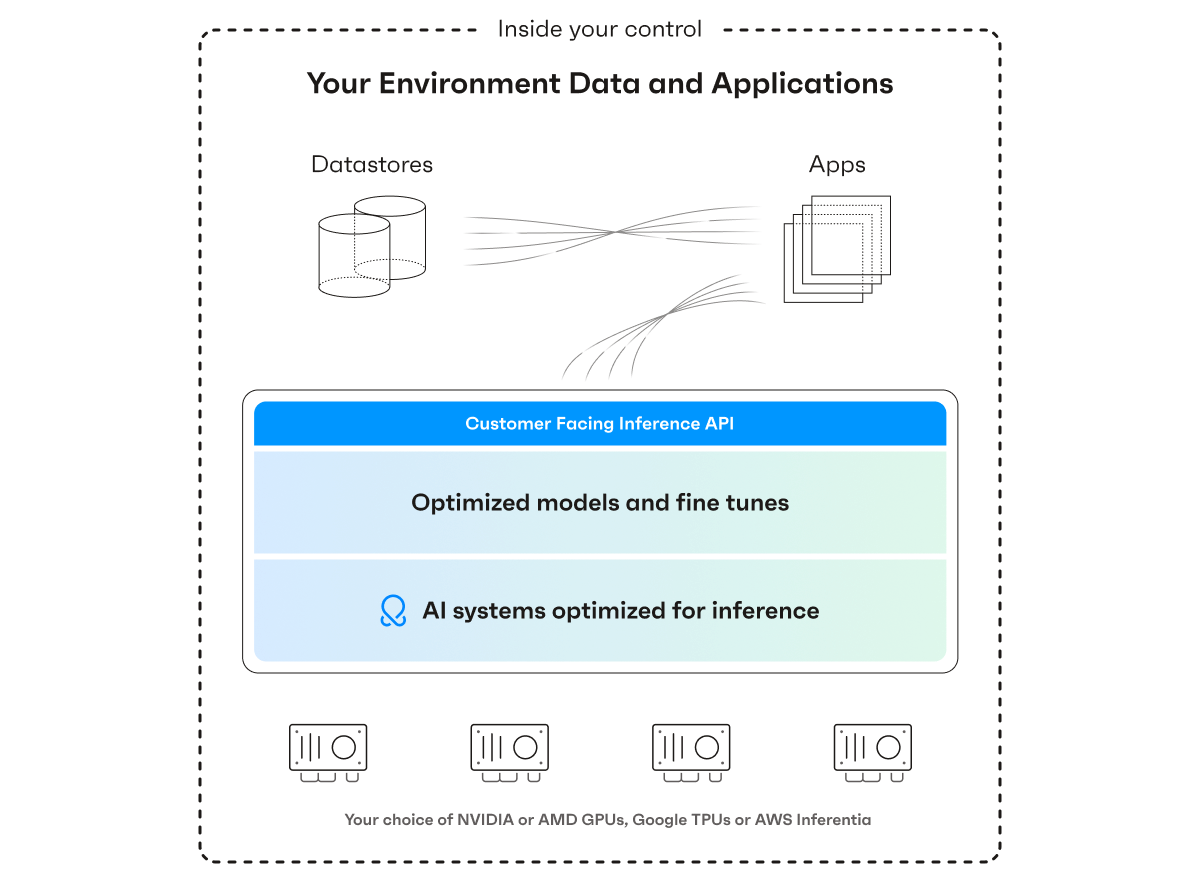

OctoStack is a turnkey end-to-end deployment of the OctoAI systems stack, the same efficient, customizable and reliable end-to-end stack that serves the millions of daily customer inferences on the OctoAI SaaS API. The OctoStack deployment consists of a set of containers pulled from the OctoAI container registry, running on customer selected hardware nodes, and orchestrated by the customer’s container orchestration system, all in the customer’s selected environment. OctoStack also includes the configuration files to provision and manage the deployment, like the required Docker compose files, Kubernetes manifests or Helm charts. With these, operating OctoStack is just like managing any modern application, building on skills and tools already in place at enterprises. No AI specific skills or tools needed, and no surprises.

The OctoAI APIs stay constant as the models, hardware, and deployment details evolve, presenting one consistent interface for applications. Inferences and data stay within the selected environment, and the deployment can be managed, iterated and optimized entirely within the enterprise’s control. OctoStack lets enterprises:

Easily ensure the required level of control and autonomy over their GenAI models, interactions and data

Access and iteratively explore the latest open source models, fine tunes, or even custom fine tuned models, all through one unified API with minimal disruption to their applications

Operate their internal GenAI stack just like any modern application, using the same skills, tools and processes as any other application

One of the early evaluators of OctoStack is Apate.ai, a company delivering a global service to combat telephone scams using generative conversational AI. With highly custom models supporting multiple languages and regional dialects, the Apate team is building on OctoStack to efficiently run their suite of LLMs across multiple geographies and to meet the data residency constraints for their solutions — without trading off access to the latest GenAI innovations.

4x better GPU utilization, 50% lower operating costs, and continuous systems optimizations

We are continuously learning from our millions of daily inferences and adding optimizations across the AI systems stack. These benefits are available to OctoStack on an ongoing basis, ensuring that the stack is always delivering the best utilization and speeds for the customer.

Initial benchmarking against best-in-class alternatives reveal 4x better utilization of expensive GPU resources and upto 50% reduction in operational costs through scaling and upgrade operations. Based on empirical research of enterprises, these are among the biggest components of GenAI project budgets today. OctoStack allows these resources to be reallocated to your higher layer applications and innovations.

OctoStack builds on the OctoAI’s deep history with Apache TVM, model optimizations, and hardware portability. OctoAI (formerly OctoML) was founded on a mission to optimize systems for AI model inferences. Our founding team includes the inventors of Apache TVM, XGBoost and MLC LLM, and the deep expertise that this brings into inference serving. Inference optimization is a full stack problem in the AI world, and the team has been working on this for several years. OctoStack brings these capabilities to the enterprise environment, unlocking access to better efficiencies, highly customizable models, and a reliable production stack, allowing enterprises to focus on building on GenAI to power their business without becoming AI infrastructure experts.

Get started today with a live OctoStack demo

OctoStack is generally available for customers today. Reach out to the team if you would like to see a live demo of the product. This will also include a detailed walkthrough of the hardware requirements and options, setting up the deployment, and running pilot inferences to benchmark the deployment. You are also welcome to join our upcoming technical deep dive on OctoStack.

If you are currently using a closed source LLM like GPT-3.5-Turbo or GPT-4 from OpenAI, we have a new promotion to help you accelerate integration of open source LLMs in your applications. Read more about this in the OctoAI Model Remix Program introduction.