Your 10 AI Resolutions for 2024

In this article

1. Explore your model options

2. Finally get that prototype into production

3. Stop overspending

4. Embrace flexibility

5. Become a master of RAG

6. Discover the power of fine tuning

7. Don’t miss out on new models

8. Explore the broader text-gen ecosystem

9. Learn to mix a great model cocktail

10. Be open to change

In this article

1. Explore your model options

2. Finally get that prototype into production

3. Stop overspending

4. Embrace flexibility

5. Become a master of RAG

6. Discover the power of fine tuning

7. Don’t miss out on new models

8. Explore the broader text-gen ecosystem

9. Learn to mix a great model cocktail

10. Be open to change

2024 is here and change is in the air! We’re embracing the season of renewal and transformation by adopting the company name OctoAI. This reflects our ongoing commitment to empowering customers to run, tune, and scale AI applications (yes, it is also the name of our platform – brand confusion is so 2023).

And speaking of AI…are you prepared for what 2024 has in store? If last year was any indication, expect the pace of AI innovation to continue at warp speed. But don’t worry — OctoAI is here to use our ML systems expertise to help you turn rapid market change into your strategic advantage. Our hardware-independent infrastructure empowers you to build with any model you choose, ensuring you're fully prepared for whatever 2024 has in store.

As your partners in GenAI, we wanted to impart some advice to start your new year off right. Since the usual resolutions are off the table (let’s face it, no amount of morning meditation can protect you from the frenzy that is the AI market), we busted out our shiny new planner and wrote up 10 AI Resolutions developers can adopt for a successful and prosperous 2024. And, in the spirit of accountability, our team is going all out all January long publishing new educational content and code samples here and on our GitHub repo. We’re also launching new data security features and enhancements to our Text Gen Solution, like the ability to bring any fine-tuned LLM and run it on OctoAI for the same price-per-token as our hosted models. We’ll be tracking our progress throughout January and sharing all the links below, so follow along!

1. Explore your model options

Exclusivity is overrated! If you’re feeling a little too dependent on big, proprietary models like OpenAI or Anthropic, you’re not alone. Last year, ChatGPT took the world by storm and quickly became the default option for developers building text-gen applications. But open source models like Llama2 and Mixtral have emerged as real alternatives, and more are in store for 2024. Are you willing to miss out on these advances because your app is tethered to OpenAI? It might be time to let them down easy. Call it a conscious uncoupling. When you’re ready to start exploring your other options, check out the OctoAI Model Remix Program for up to $15,000 in bonus tokens on the OctoAI Text Gen Solution.

2. Finally get that prototype into production

If 2023 was the year everyone started tinkering with AI, 2024 will be the year we see AI apps flood into the market en masse. DevOps teams around the world will discover what OctoAI customers learned the hard way: scaling generative AI applications is really, really tough. That’s why we architected OctoAI to support massive scale, serving millions of inferences a day with reliable SLAs and a top-notch customer service team.

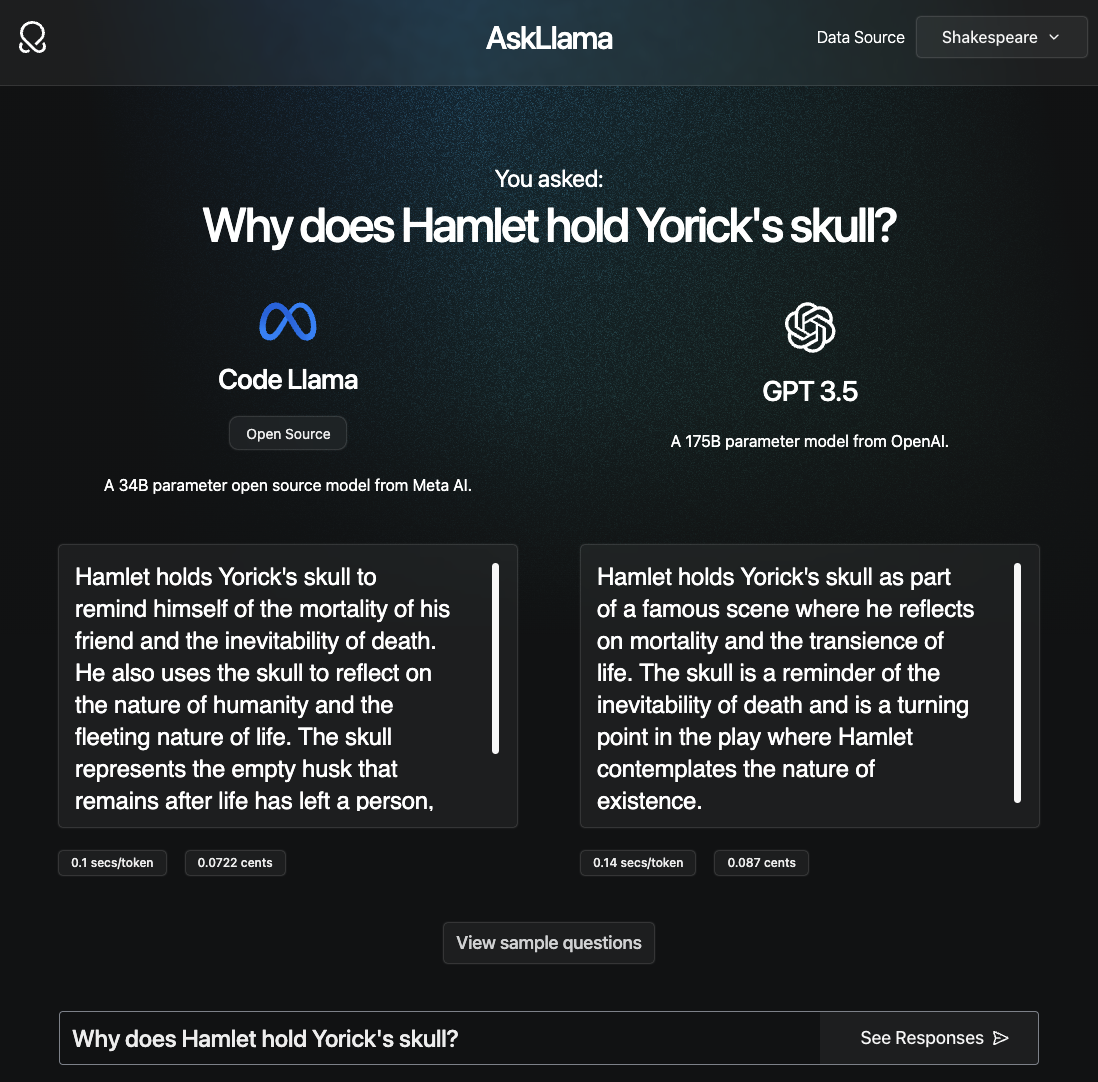

3. Stop overspending

As text-gen apps reach critical scale, one thing becomes abundantly clear: production costs add up. As we like to say: training is a one-time expense, but inference is forever. The app you launch in January could become unsustainably expensive by Q2. There are a few ways to reduce spend, including model optimization or running on cheaper commodity hardware. Another avenue for savings is downsizing the model itself. You may not need the biggest, baddest (most expensive) model to get certain jobs done. In many cases, a smaller, fine-tuned, open-source LLM will work great and cost much less.

An example of CodeLlama generating a similar response to GPT 3.5 faster, for less money

4. Embrace flexibility

Building a demo against one proprietary model can be an easy starting point, but you can quickly run into friction as you add production features. Customers often realize that the “walled garden” nature of adjacent tools (eg. moderation models), prompt engineering work (eg. pre-packaged completion templates and automations), and programmatic interfaces (eg. SDKs and APIs) can limit the extensibility and scalability of these early projects.

The alternative is to instead create pipelines with a mix of models with different capabilities and strengths, and using these integrated architectures to deliver value that is more than the sum of the parts. The key is to build in flexibility early in the adoption process. While this may not be a day 0 priority for many projects, the earlier you consciously consider and prioritize flexibility, the easier and more agile the journey will be as you and your team build on generative AI.

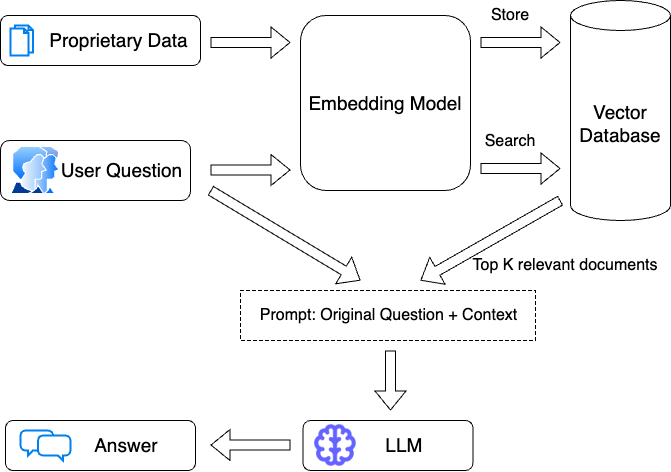

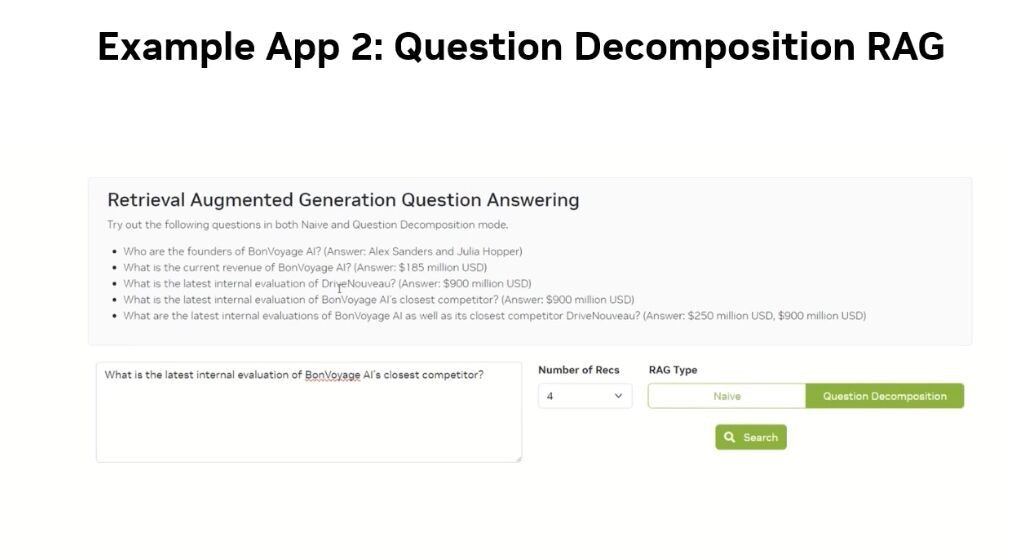

5. Become a master of RAG

Basic RAG architecture credit: LangChain

In 2024, Retrieval Augmented Generation (RAG) is the must-have skill for developers of AI applications. Here’s why: even the largest, most complex LLMs are trained for generalized tasks – they don’t have expertise in a given domain, and they most certainly don’t have access to your company’s data. With RAG, you can easily “augment” the model’s knowledge with external data (think: help docs), making them much more useful for most applications outside of general purpose chat or summarization. By supplying relevant context and factual information to the LLM, RAG makes for more accurate responses (even allowing it to cite sources), improves auditability and transparency, and enables end users to access the same source documents used by the LLM during answer creation.

The great news is that there are tons of resources available to quickly get up to speed on RAG and start building. Get started with our domain-aware LLM roundtable featuring experts LangChain, Unstructured.io, Lamini and Rohirrim, or check out this excellent primer from our friends at Pinecone.io.

6. Discover the power of fine tuning

Where RAG excels in grounding LLMs in fact, fine-tuning excels at applying a specific style (e.g. making an LLM write like a lawyer). Fine-tuning your own LLM might not be high on your January bucket list, but leveraging existing fine-tunes from the community is a practical entry point. Model hubs are loaded with OSS fine-tunes you can build with to mimic real world experiences (like chatting with a real, live customer service rep).

Recent studies have also shown that fine tuning with smaller models (like the Mistral 7B) can even provide superior quality to larger models, applied to the right use case and fine tuned with the right data sets. These can result in order of magnitude improvements in performance and cost, both crucial as applications scale to address broader needs and higher volumes. Even applications that are fundamentally augmenting data using RAG benefit from fine tuning, with approaches like question decomposition improving the overall quality and effectiveness of using context data in generation.

RAG question decomposition

7. Don’t miss out on new models

Let’s start with an example: Until December 2023, open source mixture-of-experts (MoE) models didn’t exist. In the two-weeks since Mistral launched the first, Mixtral 8x7B, new MoE models are popping up everywhere, occupying the top spots on the HuggingFace open LLM leaderboard as of Jan 3, 2024.

What this really highlights is the rate of change, and how vital it is to stay open and flexible to new, emerging models. The MoE approach, which became part of the mainstream open LLM efforts only a matter of weeks ago — is now already the new dominant approach driving progress. Just a few months back, it was Llama2, and six months ago, it was Falcon. In a matter of months, we have seen smaller models, new architectures, and new models leap-frog incumbent approaches and fundamentally raise the bar.

AI developers who want to stay ahead of the curve must position themselves to evaluate new models as they emerge in order to stay on the forefront and tap into the momentum and progress in this space. This flexibility is what OctoAI customers prioritize – and their products are among the most innovative and fastest-growing new AI products in the market.

So don't be afraid to jump in and try new models. You’re in good company as you make this an active part of your 2024 focus!

8. Explore the broader text-gen ecosystem

We discussed earlier in the year, how the emergence of Stable Diffusion kicked off the evolution and establishment of a strong ecosystem of tools and frameworks that acted as a catalyst for the image generation space. LLMs and text generation are no different — and we are already seeing this today with technologies like Langchain, LlamaIndex, and Pinecone. Building on the best-of-breed right components in the ecosystem can accelerate development, and avoid/reduce internal time spent on maintenance and upgrades of the plumbing needed for your application and your experiences.

Equally important are the AI/ML systems, the platforms, and the orchestration frameworks that serve these models. These choices determine several important attributes — like the ability to support traffic at scale, the ability to power models to new hardware or clouds, and the SLAs that you can deliver to your customers. Understanding and building on these components can also be a way to choose the parts that you want to have control over, and reduce time and effort on components that you need but don't want to build out as your differentiation.

The ecosystem can be a powerful catalyst for your project and your initiatives. Make sure you’re paying attention to exploring it!

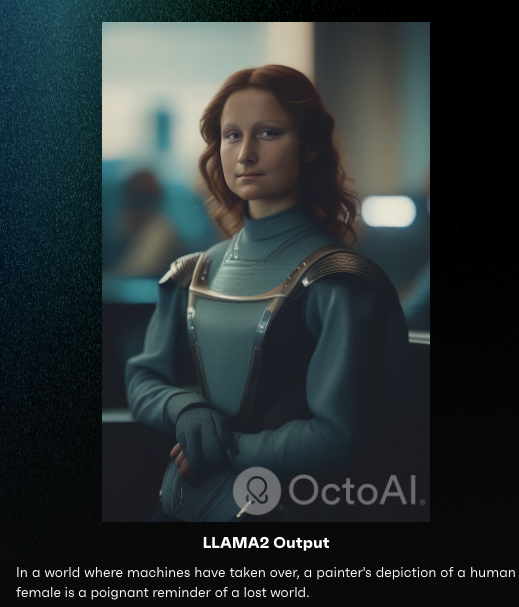

9. Learn to mix a great model cocktail

If individual models are becoming more powerful by the day (not to mention more capable thanks to RAG and fine-tuning), you can make multimodal magic when you combine them to mix the perfect “model cocktail.” One of our favorites is using LLMs to generate detailed prompts that automatically produce unique SDXL images.

LLMs like Llama2 and Mixtral are great for image prompting at scale, because they think fast on their feet (relative to us humans). OctoShop uses an image-to-image pipeline that sneakily leverages LLMs to enhance image output.

Example model cocktail output

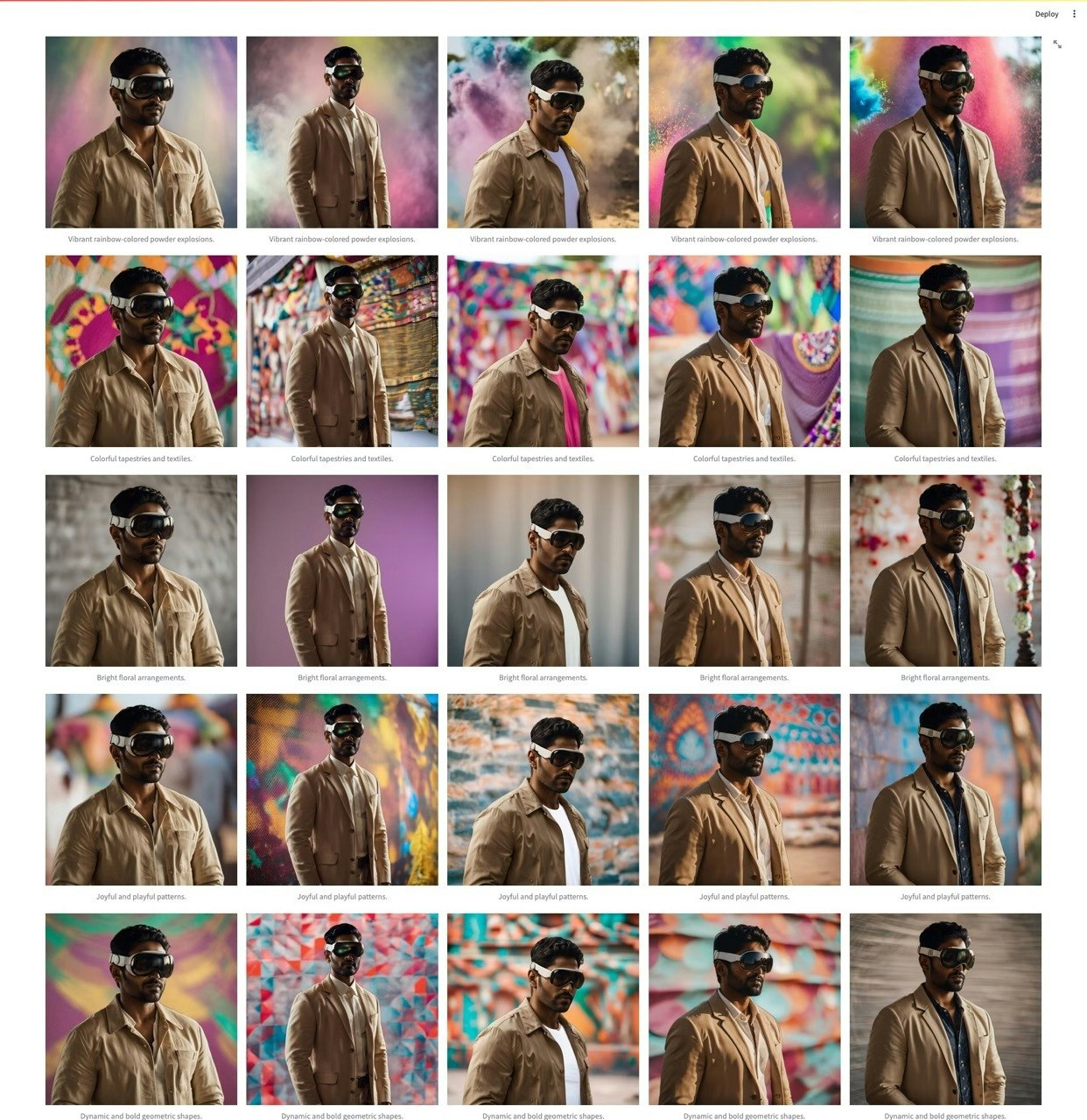

For OctoStudio, we extended this idea to show how to generate a larger number of images. Typically these are prompts a user would have to think up on their own, and then manually enter into SDXL. By relying instead on an LLM, you can quickly and easily perform an exploration of various generation ideas based on a single keyword. The whole process of creating a gallery is drastically accelerated, with minimal user intervention.

OctoStudio image array

10. Be open to change

Since every January tech blog is legally obligated to include predictions for the coming year, here are some things we’re excited about in 2024 (and you should be too!):

The growing utility of smaller, fine-tuned models

The boost that mixture-of-experts has brought to open LLMs

The flexibility that function calling adds to real world applications

Continuing to push the boundaries of local LLMs with MLC-LLM

Launching fine tuning, private deployment, and LLM capabilities to the OctoAI platform

Working with partners to increase model portability across hardware types and sizes

Private LLMs, bring your GenAI secret sauce in-house for privacy, security and speed

Through it all, our mission continues to simplify and accelerate the adoption of generative AI for customers. And we will do this by always being the best location to run, tune, and scale the latest large language models and frameworks being added in 2024.