Last week, 14 Octonauts packed their bags and headed out to AWS re:Invent. It was a thrill to connect with ML engineers, heads of IT, DevOps and infrastructure leads over the course of four days. We gave more than 200 demos showing how OctoML helps you save on your AI/ML journey, and gave away a dream trip to one lucky winner.

Reflecting back on a successful event, the best part (as always) was learning from the audience of 55,000 attendees what their goals and challenges are for the coming year.

Three big takeaways

1. Machine learning is still largely inaccessible to developers and infrastructure/ops teams.Training, testing, and deploying ML models is still largely the domain of a select few specialized ML engineers and data scientists. With the rise of hype around “AI-powered applications” there is fervent buzz around the power of AI/ML, but IT leaders, developers up and down the stack, and infra teams aren’t hands on…yet. They’re aware that AI/ML is becoming a business-level imperative, and they work closely with colleagues who are in the thick of ML deployment, but most are still firmly on the sidelines of the AI revolution.

Speaking of the AI revolution:

2. Deep learning is having a moment, but classical ML still dominates production AI workloads.Generative models like Stable Diffusion and large natural language models like GPT3 are opening eyes to the potential of deep learning in the enterprise. But we can’t overlook the classical ML models deployed in the first wave of enterprise AI-adoption. All of those recommendation systems, search engines, and document classifiers are still running in production – and still providing business value.

It’s easy to see why they remain popular. Classical ML has some inherent benefits versus deep learning models. The output of classical ML is, in general, easier to interpret as compared to more complex neural networks or ensemble models. This is important because explainability can help identify unintentional biases within the system and verify compliance with privacy regulations.

Many of these classical ML workloads, however, are running on pricey, scarce GPUs, when they could be running less expensive, more abundant CPU architectures. Companies looking to reduce cloud costs should explore GPU to CPU migrations for classical ML workloads that are sticking around for the long haul.

Which brings us to our final takeaway:

3. Inference costs are a growing concern in the current economic climate. If even a fraction of AI projects poised to launch in 2023 are to succeed, the cost of serving ML models in production will consume a significant portion of cloud spend. The old adage goes: “Good, fast, or cheap. Pick two.” At AWS re:MARS earlier this year, we spoke with ML practitioners who cared deeply about the former two. Their objective is to deploy high-performance ML models, quickly, with minimal friction. At AWS re:Invent, we spoke with budget owners who are exploring ways to cut down on costs. The trick will be doing it without slowing down their projects or negatively impacting user experience with performance degradation.

The team is already looking forward to next year’s event. We predict that the pace of AI/ML adoption will continue to accelerate, and expect to see more DevOps, infrastructure and software engineering teams getting in the mix. In the meantime, we’ll be doing our part to make AI/ML deployment more agile, portable, and cost effective.

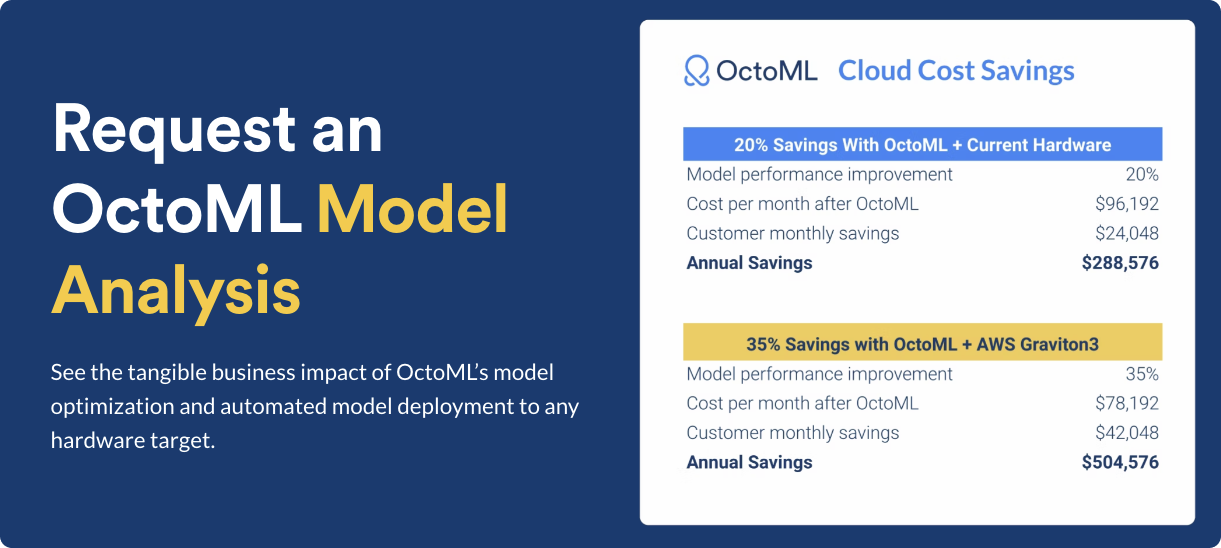

To find out how much you could save on AI/ML in production, request a model analysis