LangChain Python SDK, MoviePy

Nous Hermes 2 Mixtral, Mixtral (in JSON mode), SDXL, SVD 1.1

Apr 4, 2024

Thierry Moreau

Build a GenAI video generation pipeline

LangChain Python SDK, MoviePy

Nous Hermes 2 Mixtral, Mixtral (in JSON mode), SDXL, SVD 1.1

Apr 4, 2024

Thierry Moreau

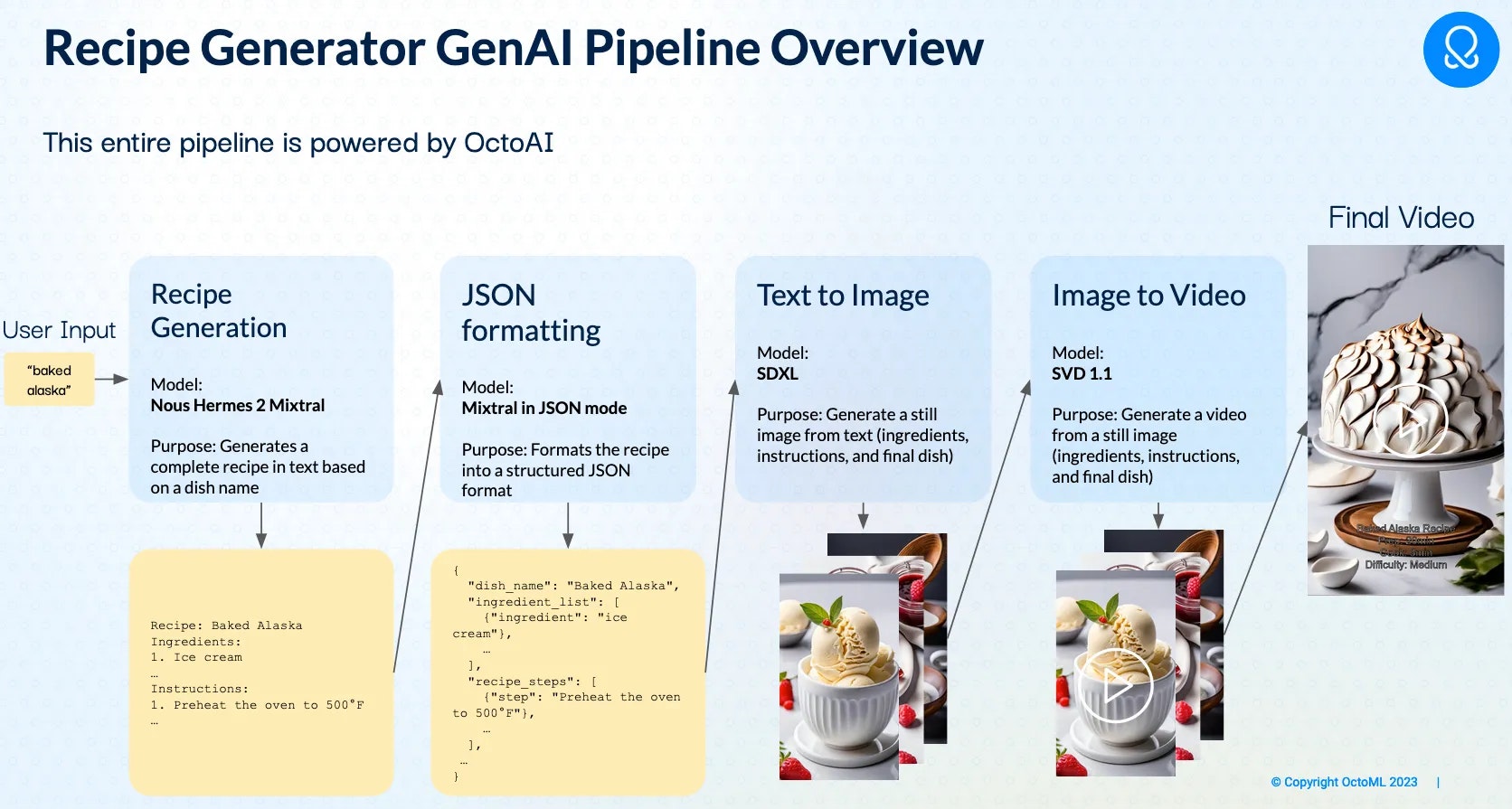

If you want an alternative to, Sora, learn how to generate your own 1 minute long videos from simple text prompts using open source models on OctoAI, all for under $3. I started with the idea to name any dish (real or fictional) and the pipeline generates a video showing users how to prepare and cook the dish.

Prerequisites

An OctoAI account

You can deploy all the models yourself on required hardware, but this could be a long and cumbersome task. The simplest way to get started with all these models is by creating an OctoAI account, where you get $10 free credit upon sign up.

Create an OctoAI API key

Jupyter notebook

You will need a place to run your code and the easiest is to launch a jupyter notebook on your local machine or on Google Colab. See our shared notebook.

Install ImageMagick and the following pip packages:

octoai-sdk

langchain

openai

pillow

ffmpeg

moviepy

Overview

I thought of the idea: name any dish (real or pretend) and a pipeline generates a video showing the ingredients, how to prepare and cook the dish. The videos needed to be high quality and factual, so they could be ready for TikTok, YouTube, or your favorite video platform.

To make this all come together, I will need to build a pipeline of models specialized for the outcome. See all the models used and their purpose:

- Nous Hermes 2 Mixtral 8x7B: to generate a recipe from the name of a dish

- Mixtral 8x7B in JSON mode: to take the recipe and put into a structure JSON format by certain fields: recipe title, prep time, cooking time, level of difficulty, ingredients, and instruction steps

- SDXL: to create images of the each ingredient, each cooking step, and the final dish

- Stable Video Diffusion 1.1: to animate each image into a short 4 second video

Lastly, I stitch all the video clips together using MoviePy, and add subtitles and a human generated soundtrack for the full length video. Let's get started.

#1 Recipe generations with LangChain

I will use Nous Hermes 2 Mixtral by utilizing a popular library, LangChain Python SDK, and OctoAI LLM endpoint. Simple add the following to your Python script:

from langchain.llms.octoai_endpoint import OctoAIEndpointNext, instantiate your OctoAIEndpoint LLM by passing in under the model_kwargs dictionary the Nous Hermes 2 Mixtral model, or if you prefer another model, and the maximum number of tokens.

Now, define your prompt template. You want to provide enough rules to get the LLM to create a recipe with the right amount of information and detail. This is important because this text is used in the next generation steps for images and the videos.

Lastly, you should instantiate an LLM chain by passing in the LLM and the prompt template you created. The chain is ready to be invoked by passing in the user input: the name of the dish to generate a recipe. Let's take a look at the code:

from langchain.llms.octoai_endpoint import OctoAIEndpoint

from langchain import PromptTemplate, LLMChain

llm = OctoAIEndpoint(

endpoint_url="https://text.octoai.run/v1/chat/completions",

model_kwargs={

"model": "nous-hermes-2-mixtral-8x7b-dpo",

"messages": [

{

"role": "system",

"content": "Below is an instruction that describes a task. Write a response that appropriately completes the request.",

}

],

"stream": False,

"max_tokens": 1024,

"temperature": 0.01

},

)

# Define a recipe template

template = """

You are a food recipe generator.

Given the name of a dish, generate a recipe that's easy to follow and leads to a delicious and creative dish.

Here are some rules to follow at all costs:

1. Provide a list of ingredients needed for the recipe.

2. Provide a list of instructions to follow the recipe.

3. Each instruction should be concise (1 sentence max) yet informative. It's preferred to provide more instruction steps with shorter instructions than fewer steps with longer instructions.

4. For the whole recipe, provide the amount of prep and cooking time, with a classification of the recipe difficulty from easy to hard.

Human: Generate a recipe for a dish called {human_input}

AI: """

prompt = PromptTemplate(template=template, input_variables=["human_input"])

# Set up the language model chain

llm_chain = LLMChain(prompt=prompt, llm=llm)

# Let's request user input for the recipe name

print("Provide a recipe name, e.g. baked alaska")

recipe_title = input()

# Invoke the LLM chain and print the response

response = llm_chain.predict(human_input=recipe_title)

print(response)Let's test this out by providing the dish name "Doritos consommé", and I should get the following output recipe:

Sure, I'd be happy to help you create a unique dish named "Doritos Consomme". Here's the recipe:

**Ingredients:**

1. 2 cups of Doritos, any flavor

2. 1 small onion, chopped

3. 2 cloves of garlic, minced

4. 1 celery stalk, chopped

5. 1 carrot, chopped

6. 6 cups of vegetable broth

7. Salt and pepper to taste

8. Optional garnish: a handful of crushed Doritos and a sprig of fresh cilantro

**Instructions:**

1. In a large pot, sauté the Doritos, onion, garlic, celery, and carrot over medium heat until the Doritos are slightly toasted and the vegetables are softened.

2. Add the vegetable broth, bring to a boil, then reduce heat and let it simmer for 30 minutes.

3. Strain the mixture through a fine-mesh sieve into a large bowl, pressing on the solids to extract as much liquid as possible.

4. Season the consomme with salt and pepper to taste.

5. Serve the consomme hot, garnished with crushed Doritos and a sprig of fresh cilantro if desired.

**Prep Time:** 10 minutes

**Cooking Time:** 30 minutes

**Difficulty:** Medium (due to the straining process)

Enjoy your creative and delicious Doritos Consomme!#2 Structured output formatting (JSON) with OpenAI SDK

Now that we have a recipe we need to create the associated media (images, videos, captions). The formatting we have now is not very helpful because it is too difficult to parse with all the lists, bullets, etc. The best solution is to get the recipe into a JSON object format, which can be processed in a defined way for each detail: ingredients, instructions, and the metadata.

Start by defining a Pydantic class from which to derive a JSON object. The high level structure should look like:

A

dish_namefield (string) - name of the dishAn

ingredient_listfield (List[Ingredient]) - lists ingredients. Each Ingredient contains aningredientfield (string) that describes the ingredient and anillustrationfield (string) that describes a visual per ingredient.A

recipe_stepsfield (List[RecipeStep]) - lists the recipe steps. EachRecipeStepcontains astepfield (string) which describes the step and anillustrationfield (string) that describes a visual for that step.A

prep_timefield (int) - prep time in minutesA

cook_timefield (int) - cooking time in minutesA

difficultyfield (string) - difficulty rating of the recipe

Since lots of developers like to use the OpenAI SDK, but we can easily use popular OSS models since OctoAI's API is compatible. You simply need to override OpenAI's base URL and API key, see below:

client = openai.OpenAI(

base_url="https://text.octoai.run/v1", api_key=OCTOAI_API_TOKEN

)Next, when you instantiate your chat completion instance set the model to mixtral-8x7b-instruct. Then we can pass the Recipe Pydantic class defined above as our response format constraint.

client.chat.completions.create(

model="mixtral-8x7b-instruct",

# Other arguments

response_format={"type": "json_object", "schema": Recipe.model_json_schema()}

)The code for the recipe in JSON mode using the OpenAI SDK overridden to invoke Mixtral 8x7B.

import openai

import json

from pydantic import BaseModel, Field

from typing import List

client = openai.OpenAI(

base_url="https://text.octoai.run/v1", api_key=OCTOAI_API_TOKEN

)

class Ingredient(BaseModel):

"""The object representing an ingredient"""

ingredient: str = Field(description="Ingredient")

illustration: str = Field(description="Text-based detailed visual description of the ingredient for a photograph or illustrator")

class RecipeStep(BaseModel):

"""The object representing a recipe steps"""

step: str = Field(description="Recipe step/instruction")

illustration: str = Field(description="Text-based detailed visual description of the instruction for a photograph or illustrator")

class Recipe(BaseModel):

"""The format of the recipe answer."""

dish_name: str = Field(description="Name of the dish")

ingredient_list: List[Ingredient] = Field(description="List of the ingredients")

recipe_steps: List[RecipeStep] = Field(description="List of the recipe steps")

prep_time: int = Field(description="Recipe prep time in minutes")

cook_time: int = Field(description="Recipe cooking time in minutes")

difficulty: str = Field(description="Rating in difficulty, can be easy, medium, hard")

chat_completion = client.chat.completions.create(

model="mixtral-8x7b-instruct",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "{}".format(response)},

],

temperature=0,

response_format={"type": "json_object", "schema": Recipe.model_json_schema()},

max_tokens=1024

)

formatted_response = chat_completion.choices[0].message.content

recipe_dict = json.loads(formatted_response)

print(json.dumps(recipe_dict, indent=2))Running the code should produce the below JSON output:

{

"ingredient_list": [

{

"ingredient": "2 cups of Doritos, any flavor",

"illustration": "A pile of Doritos chips"

},

{

"ingredient": "1 small onion, chopped",

"illustration": "A chopped onion on a cutting board"

},

{

"ingredient": "2 cloves of garlic, minced",

"illustration": "Two cloves of garlic on a cutting board"

},

{

"ingredient": "1 celery stalk, chopped",

"illustration": "A chopped celery stalk"

},

{

"ingredient": "1 carrot, chopped",

"illustration": "A chopped carrot"

},

{

"ingredient": "6 cups of vegetable broth",

"illustration": "Six cups of vegetable broth in a pot"

},

{

"ingredient": "Salt and pepper to taste",

"illustration": "A salt and pepper shaker"

},

{

"ingredient": "Optional garnish: a handful of crushed Doritos and a sprig of fresh cilantro",

"illustration": "A handful of crushed Doritos and a sprig of fresh cilantro"

}

],

"recipe_steps": [

{

"step": "In a large pot, saut\u00e9 the Doritos, onion, garlic, celery, and carrot over medium heat until the Doritos are slightly toasted and the vegetables are softened.",

"illustration": "A pot with Doritos, onion, garlic, celery, and carrot being saut\u00e9ed"

},

{

"step": "Add the vegetable broth, bring to a boil, then reduce heat and let it simmer for 30 minutes.",

"illustration": "A pot with vegetable broth added to the saut\u00e9ed ingredients, being brought to a boil, and then simmering"

},

{

"step": "Strain the mixture through a fine-mesh sieve into a large bowl, pressing on the solids to extract as much liquid as possible.",

"illustration": "A fine-mesh sieve with the mixture being strained into a large bowl, with pressure being applied to the solids"

},

{

"step": "Season the consomm\u00e9 with salt and pepper to taste.",

"illustration": "A bowl of consomm\u00e9 being seasoned with salt and pepper"

},

{

"step": "Serve the consomm\u00e9 hot, garnished with crushed Doritos and a sprig of fresh cilantro if desired.",

"illustration": "A bowl of hot consomm\u00e9 being garnished with crushed Doritos and a sprig of fresh cilantro"

}

],

"prep_time": 10,

"cook_time": 30,

"difficulty": "Medium"

}Yes! now you can generate the needed media: images, video, and captions.

#3 Generate images with SDXL

The JSON objects provides a strait-forward way to generate an image for each ingredient and recipe step. To create the images we are going to use SDXL and invoke it using the OctoAI Python SDK.

Instantiate the OctoAI ImageGenerator with the OctoAI API token, then invoke the generate method for all the images you want to create. Pass the following in the arguments:

engine, selects what model to use, SDXLprompt, describes the image to createnegative_prompt, provides parameters and attributes we do not want in the imageswidth,height, specify a resolution for imagessampler, used in every denoising step. Read more here.steps, set the number of denoising steps for imagescfg_scale, specifies the configuration scale, which defines how close to adhere to the promptnum_images, states the amount of images to generate all at onceuse_refiner, when on allows use of the SDXL refiner model to enhance image qualityhigh_noise_frac, states the ration of steps to perform on base model (SDXL) vs refiner modelstyle_preset, sets the type of preset style to apply to both negative and positive prompts, learn more here.

Learn about all the options for the OctoAI Media Gen API.

Now the code should look something like this:

import PIL

from octoai.clients.image_gen import Engine, ImageGenerator

# Instantiate the OctoAI SDK image generator

image_gen = ImageGenerator(token=OCTOAI_API_TOKEN)

# Ingredients stills dictionary (Ingredient -> Image)

ingredient_images = {}

# Iterate through the list of ingredients in the recipe dictionary

for ingredient in recipe_dict["ingredient_list"]:

# We do some simple prompt engineering to achieve a consistent style

prompt = "RAW photo, Fujifilm XT, clean bright modern kitchen photograph, ({})".format(ingredient["illustration"])

# The parameters below can be tweaked as needed, the resolution is intentionally set to portrait mode

image_gen_response = image_gen.generate(

engine=Engine.SDXL,

prompt=prompt,

negative_prompt="Blurry photo, distortion, low-res, poor quality, watermark",

width=768,

height=1344,

num_images=1,

sampler="DPM_PLUS_PLUS_2M_KARRAS",

steps=30,

cfg_scale=12,

use_refiner=True,

high_noise_frac=0.8,

style_preset="Food Photography",

)

ingredient_images[ingredient["ingredient"]] = image_gen_response.images[0].to_pil()

display(ingredient_images[ingredient["ingredient"]])

# Recipe steps stills dictionary (Step -> Image)

step_images = {}

# Iterate through the list of steps in the recipe dictionary

for step in recipe_dict["recipe_steps"]:

# We do some simple prompt engineering to achieve a consistent style

prompt = "RAW photo, Fujifilm XT, clean bright modern kitchen photograph, ({})".format(step["illustration"])

# The parameters below can be tweaked as needed, the resolution is intentionally set to portrait mode

image_gen_response = image_gen.generate(

engine=Engine.SDXL,

prompt=prompt,

negative_prompt="Blurry photo, distortion, low-res, poor quality, watermark",

width=768,

height=1344,

num_images=1,

sampler="DPM_PLUS_PLUS_2M_KARRAS",

steps=30,

cfg_scale=12,

use_refiner=True,

high_noise_frac=0.8,

style_preset="Food Photography",

)

step_images[step["step"]] = image_gen_response.images[0].to_pil()

display(step_images[step["step"]])

# Final dish in all of its glory

prompt = "RAW photo, Fujifilm XT, clean bright modern kitchen photograph, professionally presented ({})".format(recipe_dict["dish_name"])

image_gen_response = image_gen.generate(

engine=Engine.SDXL,

prompt=prompt,

negative_prompt="Blurry photo, distortion, low-res, poor quality",

width=768,

height=1344,

num_images=1,

sampler="DPM_PLUS_PLUS_2M_KARRAS",

steps=30,

cfg_scale=12,

use_refiner=True,

high_noise_frac=0.8,

style_preset="Food Photography",

)

final_dish_still = image_gen_response.images[0].to_pil()

display(final_dish_still)Now we should have many still images to work with to create our full video.

#4 Animate still images with Stable Video Diffusion

Now to use Stable Video Diffusion 1.1 to animate each of our still images. It is an open source model, but OctoAI and Stability AI's partnership let's you use SVD 1.1 on OctoAI commercially.

Using the OctoAI Python SDK to animate the images you need to instantiate the OctoAI VideoGenerator with your OctoAI API token, then invoke generate for each animation you want to make. Pass the following arguments:

engine, selects the model to use - SVDimage, encodes the input image as base64 stringsteps, sets the number of denoising steps for each video framecfg_scale, sets the configuration scale that defines how close to adhere to the image descriptionfps, sets the numbers of frames per secondmotion_scale, sets how much motion to include in the animationnoise_aug_strength, sets how much noise to add to the initial images, and a higher value outputs more creative resultsnum_video, how many animation outputs to make

Let's take a look at the code for this:

from PIL import Image

from io import BytesIO

from base64 import b64encode, b64decode

from octoai.clients.video_gen import Engine, VideoGenerator

# We'll need this helper to convert PIL images into a base64 encoded string

def image_to_base64(image: Image) -> str:

buffered = BytesIO()

image.save(buffered, format="JPEG")

img_b64 = b64encode(buffered.getvalue()).decode("utf-8")

return img_b64

# Instantiate the OctoAI SDK video generator

video_gen = VideoGenerator(token=OCTOAI_API_TOKEN)

# Dictionary that stores the videos for ingredients (ingredient -> video)

ingredient_videos = {}

# Iterate through every ingredient in the recipe

for ingredient in recipe_dict["ingredient_list"]:

key = ingredient["ingredient"]

# Retrieve the image from the ingredient_images dict

still = ingredient_images[key]

# Generate a video with the OctoAI video generator

video_gen_response = video_gen.generate(

engine=Engine.SVD,

image=image_to_base64(still),

steps=25,

cfg_scale=3,

fps=6,

motion_scale=0.5,

noise_aug_strength=0.02,

num_videos=1,

)

video = video_gen_response.videos[0]

# Store the video in the ingredient_videos dict

ingredient_videos[key] = video

# Dictionary that stores the videos for recipe steps (step -> video)

steps_videos = {}

# Iterate through every step in the recipe

for step in recipe_dict["recipe_steps"]:

key = step["step"]

# Retrieve the image from the step_images dict

still = step_images[key]

# Generate a video with the OctoAI video generator

video_gen_response = video_gen.generate(

engine=Engine.SVD,

image=image_to_base64(still),

steps=25,

cfg_scale=3,

fps=6,

motion_scale=0.5,

noise_aug_strength=0.02,

num_videos=1,

)

video = video_gen_response.videos[0]

# Store the video in the ingredient_videos dict

steps_videos[key] = video

# Generate a for the final dish presentation (it'll be used in the intro and at the end)

video_gen_response = video_gen.generate(

engine=Engine.SVD,

image=image_to_base64(final_dish_still),

steps=25,

cfg_scale=3,

fps=6,

motion_scale=0.5,

noise_aug_strength=0.02,

num_videos=1,

)

final_dish_video = video_gen_response.videos[0It takes 30 seconds to create each 4 second animation. Since it is creating each video sequentially, this might take a few minutes to complete. You can simple extend this code to make it asynchronous or parallelize it.

#5 Create a full length video with MoviePy

Using the MoviePy library we can make a montage of the videos.

For every animation, we have corresponding text that goes with it from the recipe_dict JSON object. So, we can use this to create a montage of captions.

Now to put it all together in a user friendly way. All the animations have 25 frames, and are 6FPS animations lasting about 4.167 seconds. But, our ingredients list can get long, we should edit the videos to only be 2 seconds long to keep the overall video flow moving. For the steps portion of the video we play 4 seconds of each animation because the user needs time to easily read the directions.

Stitches together the animations in this order: final dish, all ingredients, all instructions, and ending with final dish being cooked.

Adds subtitles throughout the video so there are easy to follow instructions

Adds a soundtrack to the video to delight users

from IPython.display import Video

from moviepy.editor import *

from moviepy.video.tools.subtitles import SubtitlesClip

import textwrap

# Video collage

collage = []

# To prepare the closed caption of the video, we define

# two durations: ingredient duration (2.0s) and step duration (4.0s)

ingredient_duration = 2

step_duration = 4

# We keep track of the time ellapsed

time_ellapsed = 0

# This sub list will contain tuples in the following form:

# ((t_start, t_end), "caption")

subs = []

# Let's create the intro clip presenting the final dish

with open('final_dish.mp4', 'wb') as wfile:

wfile.write(final_dish_video.to_bytes())

vfc = VideoFileClip('final_dish.mp4')

collage.append(vfc)

# Add the subtitle which provides the name of the dish, along with prep time, cook time and difficulty

subs.append(((time_ellapsed, time_ellapsed+step_duration), "{} Recipe\nPrep: {}min\nCook: {}min\nDifficulty: {}".format(

recipe_dict["dish_name"].title(), recipe_dict["prep_time"], recipe_dict["cook_time"], recipe_dict["difficulty"]))

)

time_ellapsed += step_duration

# Go through the ingredients list to stich together the ingredients clip

for idx, ingredient in enumerate(recipe_dict["ingredient_list"]):

# Write the video to disk and load it as a VideoFileClip

key = ingredient["ingredient"]

video = ingredient_videos[key]

with open('clip_ingredient_{}.mp4'.format(idx), 'wb') as wfile:

wfile.write(video.to_bytes())

vfc = VideoFileClip('clip_ingredient_{}.mp4'.format(idx))

vfc = vfc.subclip(0, ingredient_duration)

collage.append(vfc)

# Add the subtitle which just provides each ingredient

subs.append(((time_ellapsed, time_ellapsed+ingredient_duration), "Ingredients:\n{}".format(textwrap.fill(ingredient["ingredient"], 35))))

time_ellapsed += ingredient_duration

# Go through the recipe steps to stitch together each step of the recipe video

for idx, step in enumerate(recipe_dict["recipe_steps"]):

# Write the video to disk and load it as a VideoFileClip

key = step["step"]

video = steps_videos[key]

with open('clip_step_{}.mp4'.format(idx), 'wb') as wfile:

wfile.write(video.to_bytes())

vfc = VideoFileClip('clip_step_{}.mp4'.format(idx))

collage.append(vfc)

# Add the subtitle which just provides each recipe step

subs.append(((time_ellapsed, time_ellapsed+step_duration), "Step {}:\n{}".format(idx, textwrap.fill(step["step"], 35))))

time_ellapsed += step_duration

# Add the outtro clip

vfc = VideoFileClip('final_dish.mp4'.format(idx))

collage.append(vfc)

# Add the subtitle: Enjoy your {dish_name}

subs.append(((time_ellapsed, time_ellapsed+step_duration), "Enjoy your {}!".format(recipe_title.title())))

time_ellapsed += step_duration

# Concatenate the clips into one initial collage

final_clip = concatenate_videoclips(collage)

final_clip.to_videofile("collage.mp4", fps=vfc.fps)

# Add subtitles to the collage

generator = lambda txt: TextClip(

txt,

font='Century-Schoolbook-Roman',

fontsize=30,

color='white',

stroke_color='black',

stroke_width=1.5,

method='label',

transparent=True

)

subtitles = SubtitlesClip(subs, generator)

result = CompositeVideoClip([final_clip, subtitles.margin(bottom=70, opacity=0).set_pos(('center','bottom'))])

result.write_videofile("collage_sub.mp4", fps=vfc.fps)

# Now add a soundtrack: you can browse https://pixabay.com for a track you like

# I'm downloading a track called "once in paris" by artist pumpupthemind

import subprocess

subprocess.run(["wget", "-O", "audio_track.mp3", "http://cdn.pixabay.com/download/audio/2023/09/29/audio_0eaceb1002.mp3"])

# Add the soundtrack to the video

videoclip = VideoFileClip("collage_sub.mp4")

audioclip = AudioFileClip("audio_track.mp3").subclip(0, videoclip.duration)

video = videoclip.set_audio(audioclip)

video.write_videofile("collage_sub_sound.mp4")Open the "collage_sub_sound.mp4" to see the full video on how to make Doritos consommé. Check out our video of "skittles omelette" too.

Conclusion

Sora is not yet mainstream, but if you get creative you already have the building blocks to create highly usable GenAI media today.

How did your video turn out? What recipe did you try to create? Feel free to show us in Discord. We are looking forward to see what you build.