Pinecone, LangChain, BeautifulSoup4

Pinecone

Llama 2 13B Chat

Jan 11, 2024

Jan 12, 2024

Bassem Yacoube

DocTalk

Pinecone, LangChain, BeautifulSoup4

Pinecone

Llama 2 13B Chat

Jan 11, 2024

Jan 12, 2024

Bassem Yacoube

The DocTalk demo is a RAG Python app using several libraries: pinecone, langchain, and beautifulsoup4 to build a chat app that can search, summarize, and answer questions from two documentation data sources. It shows how to perform document processing, embedding generation, and conversational retrieval using OctoAI LLM and Embeddings models and Pinecone as a Vector database. You can run as both a CLI app and as an AWS Lambda function.

Prerequisites

Install Python along with the app dependencies on your system

Install using these packages using pip:

pip install -r requirements.txt

Setup a

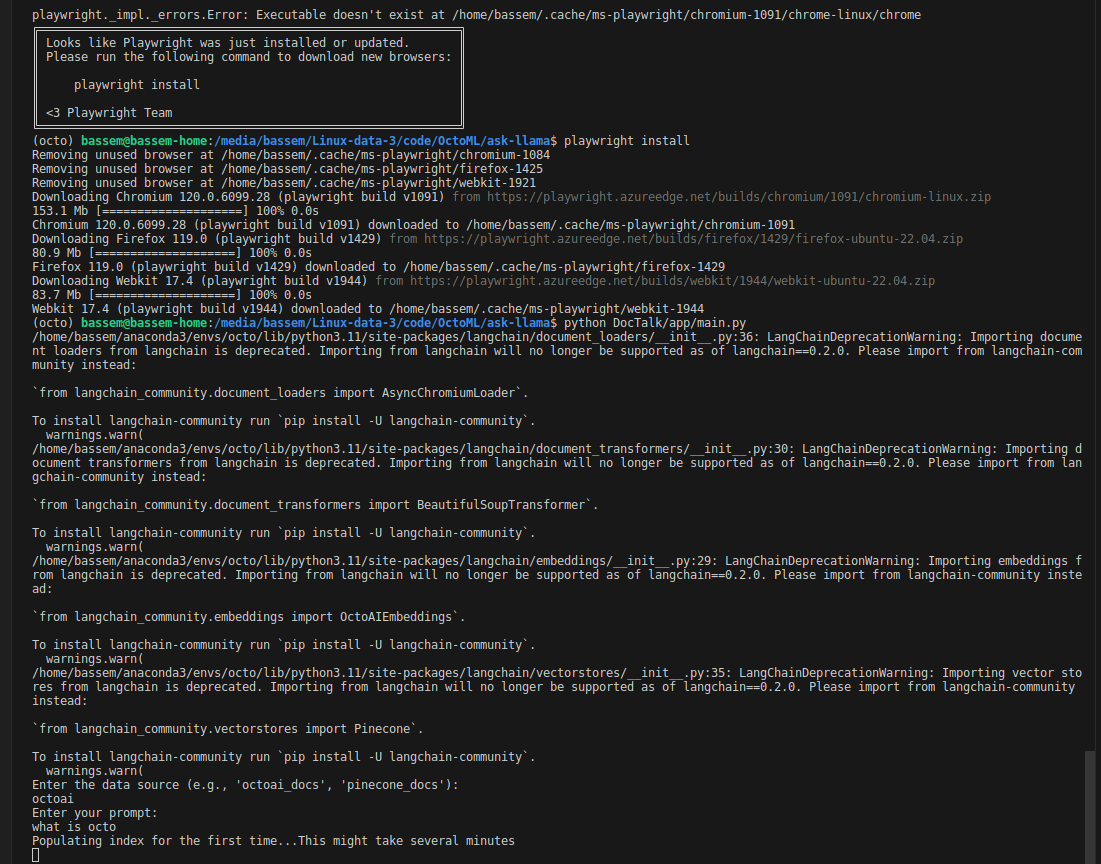

.envfile in the root of the project with necessary environment variables, and you will need:The playwrite module is required for the LangChain document loader

Initialize before first use with the following command:

python3 -m playwright install

Environment variables

Make sure you have the .env file in the project's app directory, following this template:

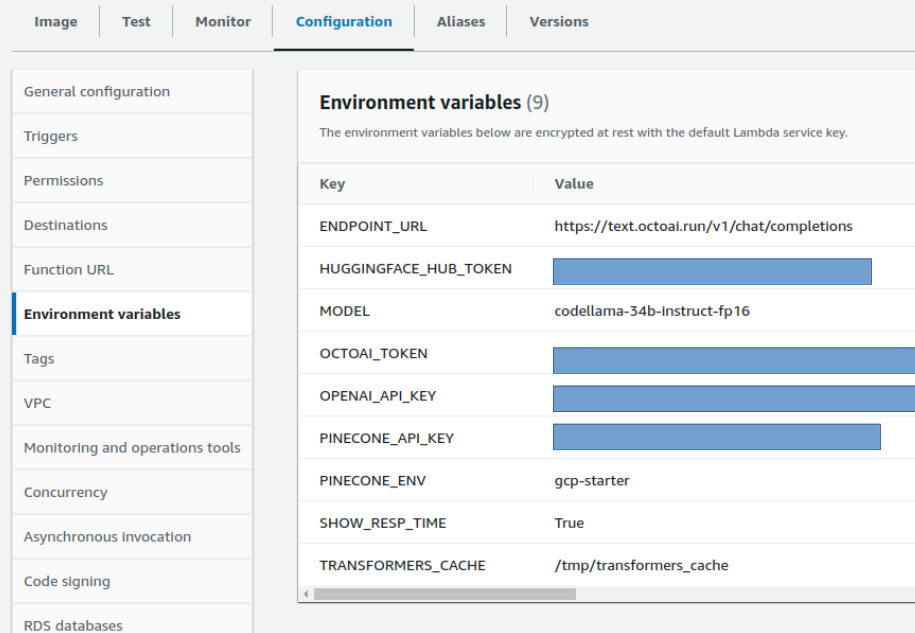

PINECONE_API_KEY=YOUR-TOKEN

PINECONE_ENV=gcp-starter

OCTOAI_TOKEN=YOUR-TOKEN

OCTOAI_ENDPOINT_URL="https://text.octoai.run/v1/chat/completions"

OCTOAI_MODEL="llama-2-13b-chat-fp16"Replace the placeholder values with your actual API keys and endpoints. Do remember to update the PINECONE_ENV variable if you are using an existing Pinecone environment.

Running the app

As a command-line interface (CLI)

To run using CLI, execute the main script: python main.py

You will be prompted to enter the data source and your query. After providing the needed inputs, the application will process the request and display the output. Note: The first time you run the application it takes a bit (several minutes) to populate the pinecone index for the first time. Afterward it should be faster.

As an AWS Lambda function

Package the app with all needed dependencies and upload to AWS Lambda. Set the handler function as main.handler.

Deploying the app to as a container image

AWS account

AWS CLI configured

Docker installed

To deploy the Python app to AWS Lambda using a container image, follow these steps:

Prepare the Dockerfile: make sure it is setup correclty to build a container image for AWS Lambda.

Specify the base image

Copy your app code into the container

Install any dependencies

Set the entry point for your Lambda function

Build the container image by using the AWS SAM CLI with the command (generally):

sam build --use-containerTest locally (optional, but advised) to see if the Lambda function is working using SAM:

sam local invoke

To deploy to AWS Lambda follow these steps:

Upload the container image to Amazon Elastic Container Registry (ECR)

You can use Docker CLI to do this

Use AWS SAM CLI to deploy the app

Package the app and deploy using a SAM template

sam package --output-template-file packaged.yaml --s3-bucketsam deploy --template-file packaged.yaml --stack-name --capabilities CAPABILITY_IAM

Configure Lambda and API Gateway

Do this in the AWS Management Console

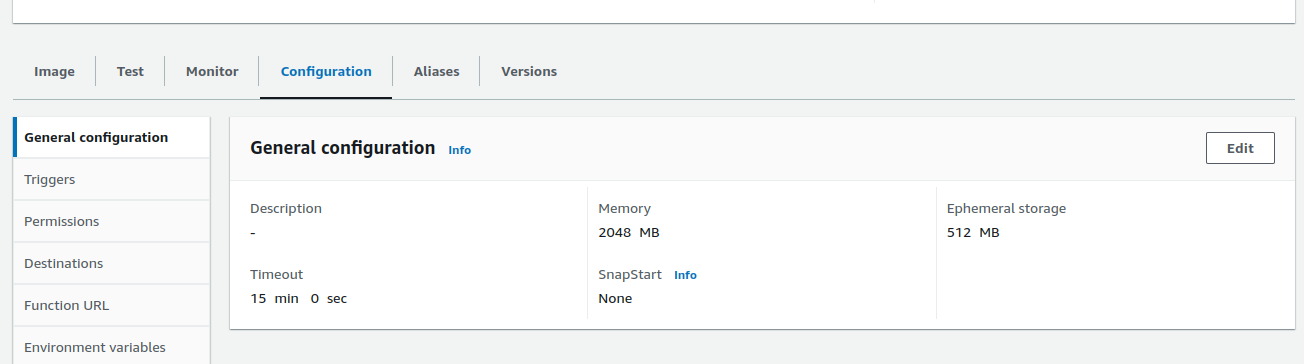

Increase the function timeout to 15 minutes

Scale up resource specs — increase the Memory to 2048 MB

Add configuration variables:

You can follow the steps in the AWS API Gateway docs, or use the python script at api-gateway.py to create an API gateway for the Lambda function that allows calls over HTTPS.

Contribute

We would love to have your contributions to this project, as long as the code follows the project's coding standards and includes appropriate testing.

Your choice of models on our SaaS or in your environment

Run any model or checkpoint on our efficient, reliable, and customizable API endpoints. Sign up and start building in minutes.