What is a Mixtral of Experts?

Mixtral 8x7B, or just “Mixtral” to its friends, is the latest model released by pioneering French AI startup Mistral. Launched in December 2023 (with the paper coming out in January 2024), it represents a significant extension of the prior foundational large language model, Mistral 7B.

Mixtral is an 8x7B Sparse Mixture of Experts (SMoE) language model with more substantial capabilities than the original Mistral 7B. It’s larger, using 13B active parameters during inference out of 47B parameters, and supports multiple languages, code, and 32k context window.

Here, we want to explain the details of the Mixtral language model architecture and its performance against the leading LLMs like LLaMa 1/2 and GPT3.5. We’ll also examine how developers can consider using Mixtral in their work and build on open-source models. Let’s begin.

What is Mixtral?

Mixtral is a mixture-of-experts (MoE) network, similar to GPT4. The idea behind MoE is to divide a complex problem into simpler parts, each handled by a specialized expert. The experts are typically individual models or neural networks good at solving specific tasks or operating on particular data types. The gating network then decides how to weight the output of each expert for a given input, effectively choosing which experts are most relevant or blending their outputs in a meaningful way.

While GPT4 constitutes eight expert models of 222B parameters, Mixtral is a mixture of 8 experts of 7B parameters each. Thus, Mixtral only requires a subset of the total parameters during decoding, allowing faster inference speed at small batch sizes and higher throughput at large batch sizes.

Sparse Mixture of Experts

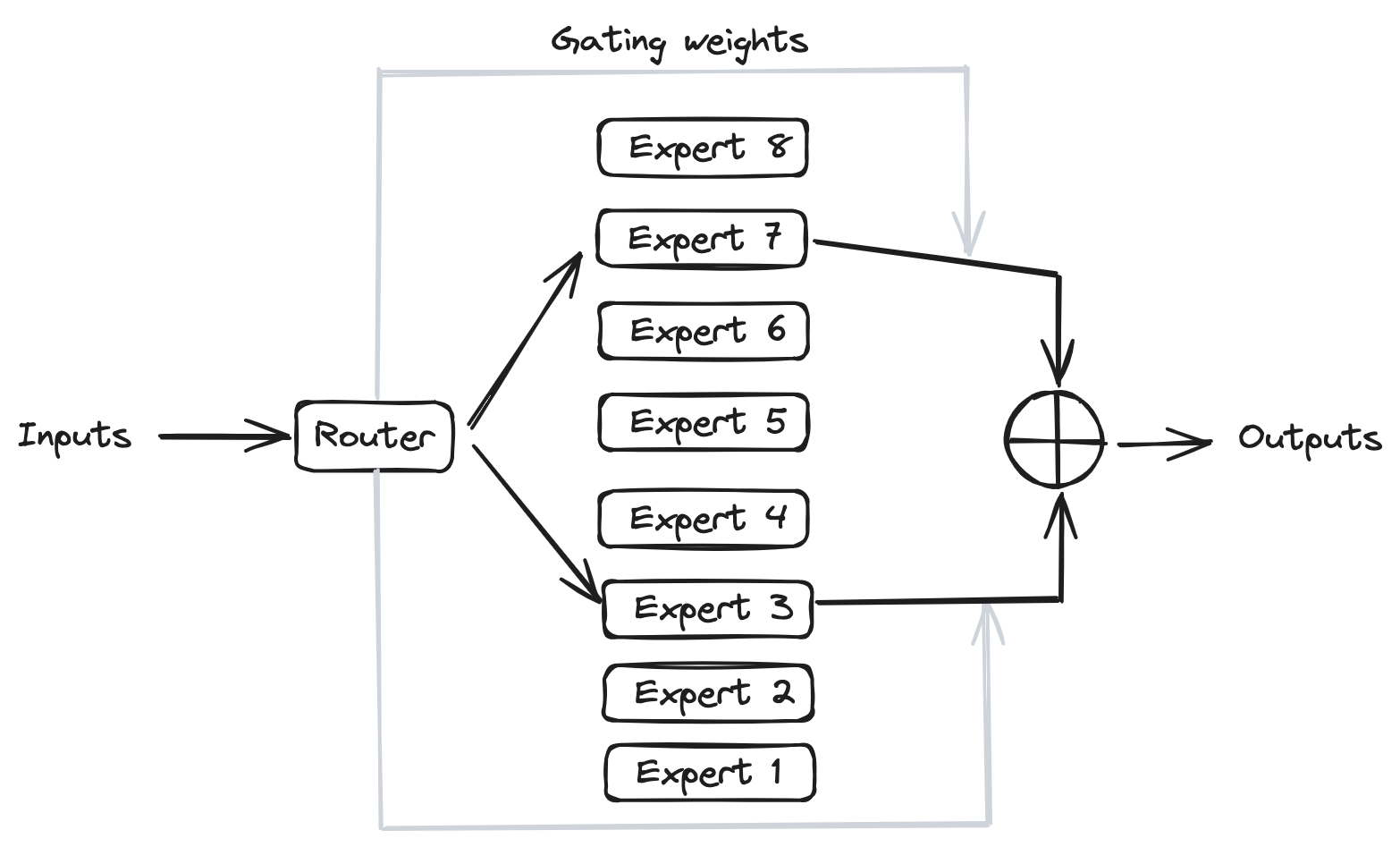

Here’s what this Mixture of Experts (MoE) layer looks like:

Mixtral has eight experts, and each input token is routed to two experts with different weights. The final output is a weighted sum of the expert networks' outputs, where the gating network's output determines the weights. The number of experts (n) and the top K experts are hyperparameters set to 8 and 2, respectively. The number of experts, n, determines the total or sparse parameter count, while K determines the number of active parameters used for processing each input token.

The MoE layer is applied independently per input token instead of the feed-forward sub-block of the original Transformer architecture. Each MoE layer can be run separately on a single GPU using a model parallelism distributed training strategy.

Mistral 7B

Mixtral’s core architecture is similar to Mistral 7B, so let’s quickly look at that to gain a more comprehensive understanding of Mixtral. Mistral 7B is based on the Transformer architecture. Compared to LLaMa, it has a few novel features that contribute to surpassing LLaMa 2 (13B) on various benchmarks.

Grouped-Query Attention

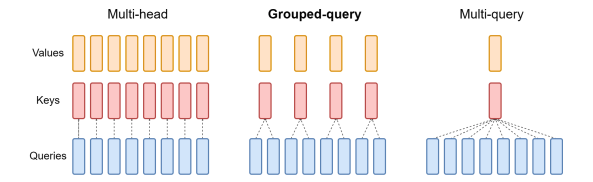

GQA represents an interpolation between multi-head and multi-query attention with single key and value heads per subgroup of query heads. GQA divides query heads into G groups, each of which shares a single key and value head.

It differs from multi-query attention, which shares single key and value heads across all query heads. GQA is an essential feature as it significantly accelerates inference speed and reduces the memory requirements during decoding. This enables the models to scale to larger batch sizes and throughput, a critical requirement for real-time AI applications.

Sliding window attention

Sliding window attention (SWA), introduced in the Longformer architecture, exploits the stacked layers of a Transformer to attend to information beyond the typical window size. SWA is designed to attend to a much longer sequence of tokens than vanilla attention and offers significant computational cost reductions.

The combination of GQA and SWA collectively enhances the performance of Mistral 7B and Mixtral relative to other language models like the LLaMa series.

How Mixtral performs compared to GPT and LLaMa

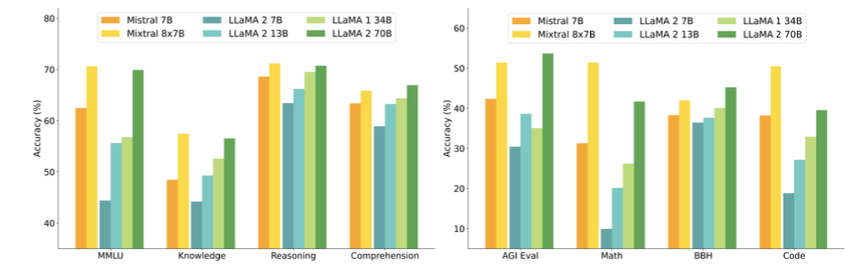

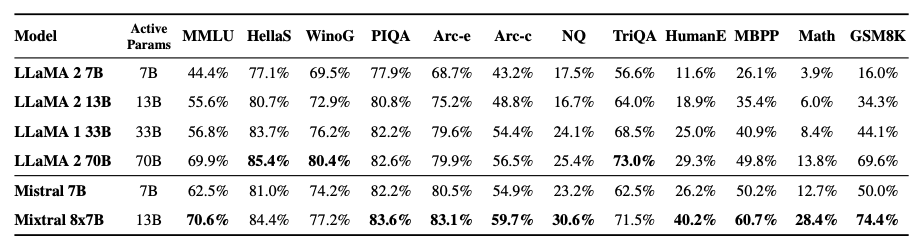

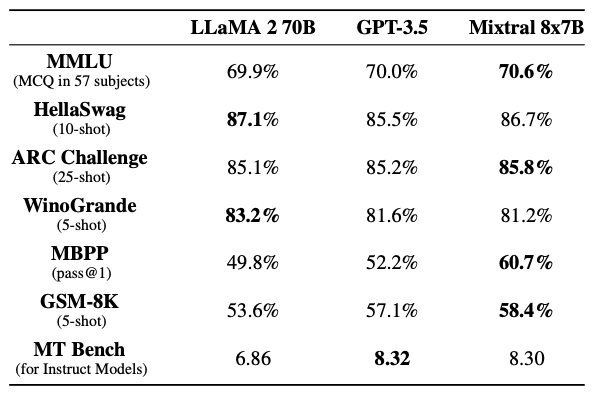

Mixtral is better than much larger language models. It outperforms models with up to 70B parameters like LLaMa 2 70B while only using 13B (~18.5%) of the active parameters during inference. Mixtral’s performance is superior in mathematics, code generation, and multitask language understanding.

LLaMa 2 70B beats Mixtral in just a handful of scenarios, such as the HellaSwag reasoning test, the WinoGrande reasoning test, and the TriviaQA comprehension test.

Mixtral also outperformed GPT-3.5 on all benchmarks bar the MT Bench test for instruct models.

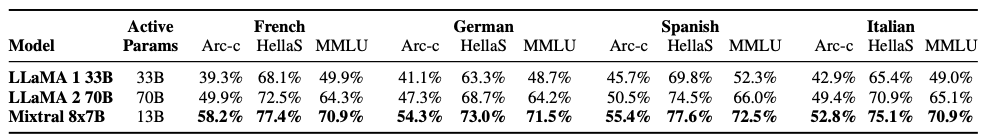

Regarding multilingual ability, as Mixtral was pre-trained with a significantly higher proportion of multilingual data, it can outperform LLaMa 2 70B on multilingual tasks in French, German, Spanish, and Italian while being comparable in English.

Long-range performance

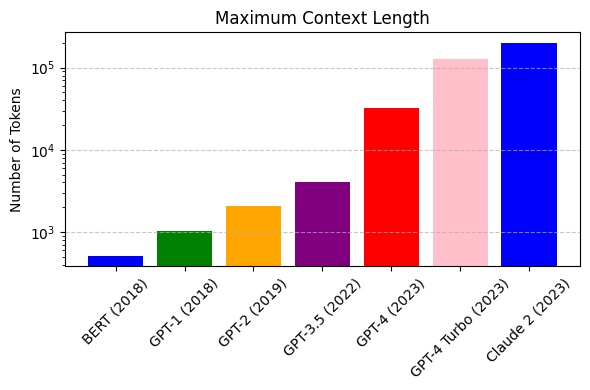

As well as having fewer parameters, Mixtral also uses a smaller context window than most current models. The input context length of language models has increased by several orders of magnitude in the last few years - from 512 tokens for the BERT model to 200k tokens for Claude 2.

However, most large language models struggle to efficiently use the more extended context. Nelson and colleagues showed that current language models do not robustly use information in extended input contexts. Their performance is typically highest when the relevant information for tasks such as question-answering or key-value retrieval occurs at the beginning or the end of the input context, with significantly degraded performance when the models need to access information in the middle of extended contexts.

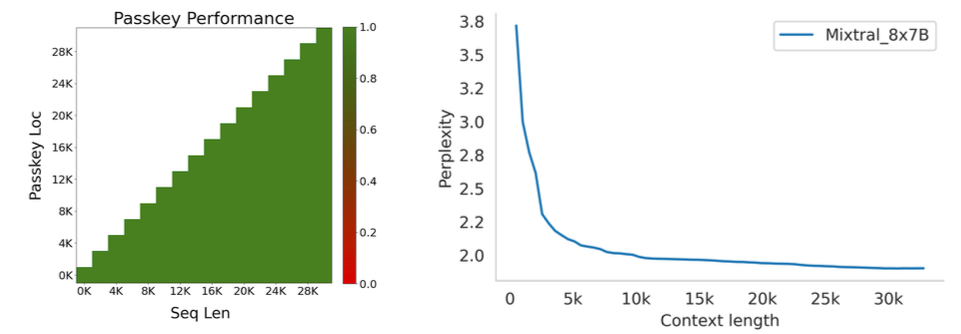

Mixtral, which has a context size of 32k tokens, overcomes this deficit of large language models and shows 100% retrieval accuracy regardless of the context length or the position of the key to be retrieved in an extended context.

The perplexity, a metric that captures the capability of a language model to predict the next word given the context, decreases monotonically as the context length increases. Lower perplexity implies higher accuracy, and the Mixtral model can perform exceptionally well on tasks based on long context lengths.

Instruction fine-tuning

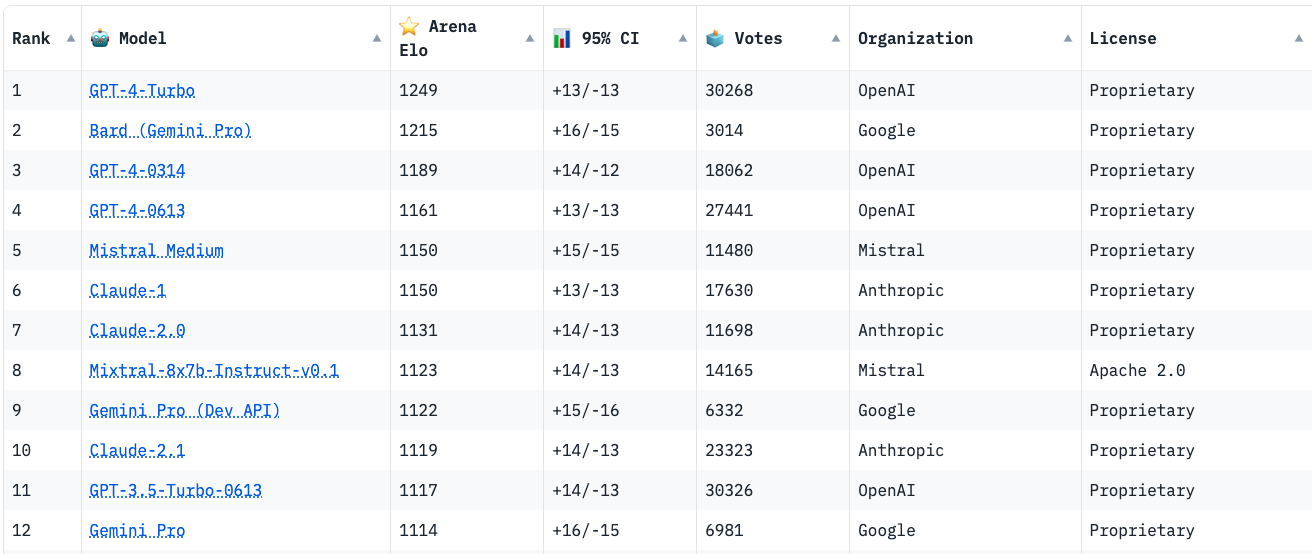

The “Mixtral-Instruct” model was fine-tuned on an instruction dataset, followed by Direct Preference Optimization (DPO) on a paired feedback dataset. DPO is a technique to optimize large language models to adhere to human preferences without explicit reward modeling or reinforcement learning. As of January 26, 2024, on the standard LMSys Leaderboard, Mixtral-Instruct is the best-performing open-source large language model.

This leaderboard is a crowdsourced open platform for evaluating large language models that rank models following the Elo ranking system in chess. While smaller, Mixtral-Instruct only ranks below proprietary models like OpenAI’s GPT-4 and Google’s Bard and Anthropic’s Claude models.

This solid performance of Mixtral-Instruct, along with an open-source-friendly Apache 2.0 license, opens up the possibility for tremendous adoption of Mixtral for both commercial and non-commercial applications. It represents a much more powerful alternative to LLaMa 2 70B that is already being used as the foundational model for extending large language models to other languages like Hindi or Tamil, which are spoken widely but not adequately represented in the training dataset of these large language models.

Using Mixtral as a developer

Mixtral represents the numero uno of open-source large language models as it outperforms the previous best open-source model, LLaMa 2 70B, by a significant margin while providing for faster and cheaper inference. You can start using Mixtral with Octo AI today.

Mixtral challenges the hegemony of proprietary models like OpenAI’s GPT-4. With Mixtral, all developers have access to an open-source model that is on par with or better than proprietary models. This allows developers to integrate AI into their applications without the constraints of licensing fees or usage limitations.

Mixtral has been available in the open source for less than two months, and we have yet to see many examples of how it is being used in the industry. However, some early movers, like the Brave browser, have already incorporated Mixtral in its AI-based browser assistant, Leo. It is only a matter of time before Mixtral witnesses widespread adoption across the industry for various use cases. Given the relative strengths of Mixtral, some likely uses would be:

- Code generation. Developers can leverage Mixtral to create lightweight cloud IDEs that provide real-time coding assistance. Incorporating Mixtral into the development workflow can also mean having an AI-powered coding assistant to debug, suggest optimizations, and write code snippets or entire modules based on high-level requirements.

- Translation. Given Mixtral's multi-language training, developers can offer real-time translation within applications or chatbots. allowing products and services to reach a global audience.

- Fine-tuning. With Mixtral's open-source flexibility, developers can fine-tune the model to cater to specific requirements. Fine-tuning Mixtral involves further training the model on curated datasets, including proprietary or niche data, to improve its performance on specific tasks. You then have complete control over this model and its deployment. Fine-tuning also cuts costs further and plays to the strengths of Mixtral by not requiring large context windows.

Open access to the world of experts

Mixtral is a cutting-edge, mixture-of-experts model with state-of-the-art performance among open-source models. It consistently outperforms LLaMa 2 70B on various benchmarks, with 5x fewer active parameters during inference. It thus allows for faster, more accurate, and cost-effective performance for diverse tasks, including mathematics, code generation, and multilingual understanding. Mixtral-Instruct outperforms proprietary models such as Gemini-Pro, Claude-2.1, and GPT-3.5 Turbo on human evaluation benchmarks.

Mixtral thus represents a powerful alternative to the much larger and more compute-intensive LLaMa 2 70B as the de facto best open-source model and will facilitate the development of new methods and applications benefitting a wide variety of domains and industries. If you want to start experimenting with Mixtral, you can start with open-source models with Octo AI by signing up here and deploying models immediately.