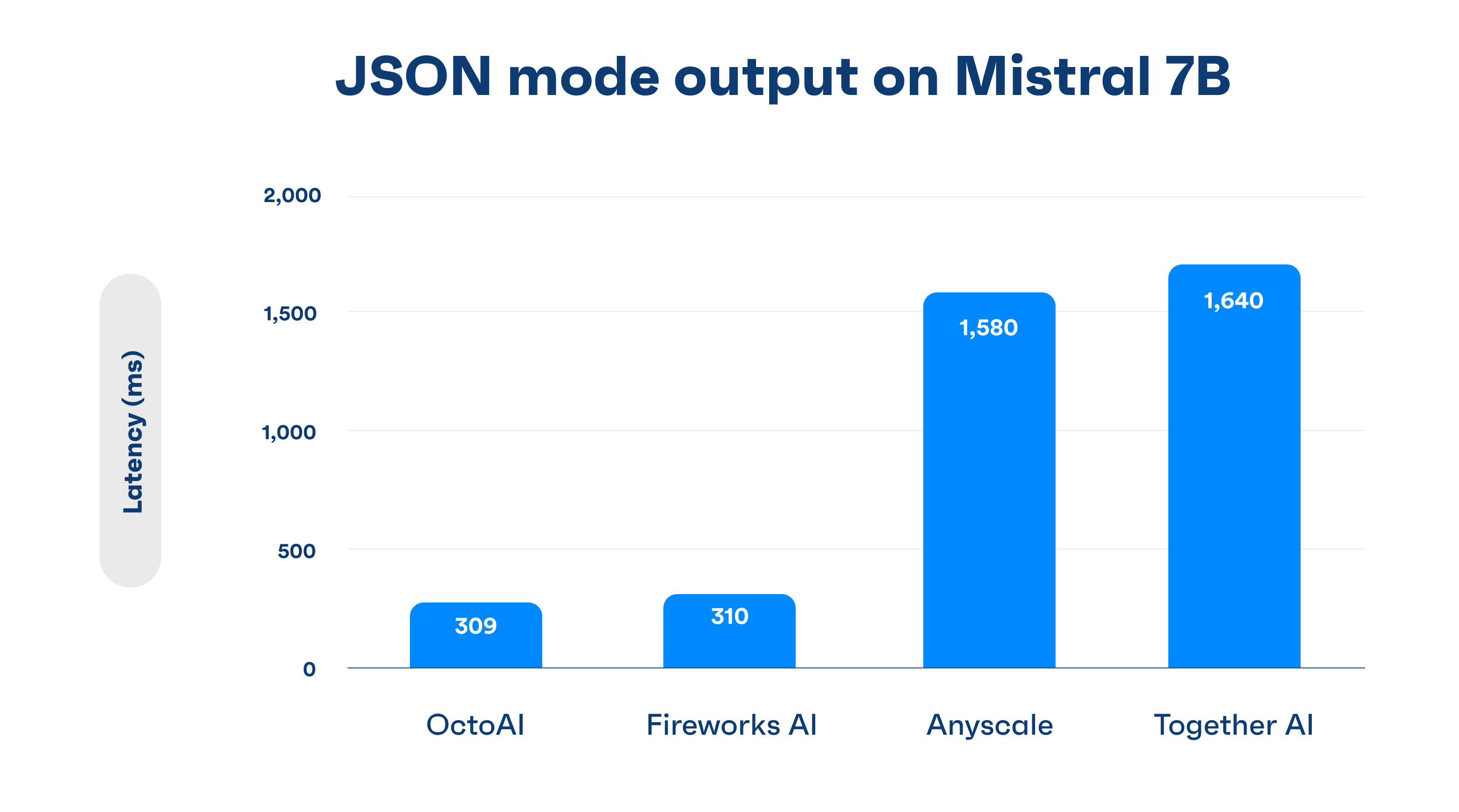

We are excited to announce that JSON mode is now available with all models on the OctoAI Text Gen Solution. JSON mode output allows developers detailed control over the format and organization of large language model (LLM) responses, simplifying the integration of generated text with other components of an application. OctoAI’s implementation of JSON mode is built into the OctoAI Systems Stack, making the feature immediately available for all LLMs in the platform with no disruption to the LLM or its quality. This approach also allows us to have further control over optimization of the function, allowing JSON mode implementation with minimal latency overhead and industry leading latency performance — evidenced in initial test results in the chart below.

You can get started with JSON mode on the OctoAI Text Gen Solution today.

Structured LLM outputs for predictable formatted generation

Applications typically interact with an LLM over the Chat Completions API. The content in a Chat Completions response is a verbose text string by default. While this may be sufficient for many use cases, this verbose string format is not convenient when the response has to be used in a structured manner, like for example, to populate a predetermined template with information. This is where JSON mode outputs can be helpful. JSON mode allows you to define a JSON schema that determines the format of the response content. With JSON mode, you gain control over the response’s structure and data types. This results in more organized and predictable output, tailored to your particular requirements, and more easily able to be integrated into the rest of your application.

Using the Chat Completions API, you can specify JSON mode outputs with your requests using the response_format attribute as shown in the example below.

curl -X POST "https://text.octoai.run/v1/chat/completions" -H "Content-Type: application/json" -H "Authorization: Bearer $YOUR_OCTOAI_TOKEN" --data-raw '{

"messages": [

{

"role": "system",

"content": "You are a helpful assistant. Keep your responses limited to one short paragraph if possible."

},

{

"role": "user",

"content": "Describe the structure of a typical Hello World application"

}

],

"model": "mistral-7b-instruct",

"response_format": {

"type": "json_object",

"schema": {

$YOUR_SCHEMA_STRING

}

}

}This ensures that the LLM response is generated as JSON output using the schema template provided in the request. OctoAI’s JSON mode is API compatible with OpenAI, and OpenAI based applications will be able to start using JSON mode on OctoAI by changing the authorization token and model endpoint, as described here.

JSON mode implementation in the OctoAI Systems Stack

A common approach to implementing JSON is at the model layer, using a mix of fine tuning and prompt engineering to create predictable JSON formatted responses. This is already possible on OctoAI, and we have multiple customers meeting their JSON mode requirements in this manner. The key benefits are that this reduces the effort to get JSON mode working for a specific model, and avoids work to the serving stack — which may be complex or not possible. The downside to this is that it involves modification of the model and possible impact to quality and portability. This effort also does not translate horizontally across models.

We’re seeing today that customers are actively adopting and evaluating new models, and that they want to be able to integrate the latest models and fine tunes to their applications as quickly as possible, without any risk of quality disruption. To enable this rapid iteration, our OctoAI’s JSON mode implementation is abstracted away from the model and built into the serving layer. This serving layer is part of the OctoAI Systems Stack, an end-to-end systems stack for serving and scaling GenAI models effectively, built and maintained by OctoAI. Implementation of JSON mode formatting within the serving layer also opened additional optimization opportunities — including batching and cached generation. These allow us to further reduce the latency overhead, and we continue to work on new speed optimizations and functional improvements to structured LLM output generation on the platform. Through implementation within the OctoAI Systems Stack, JSON mode is now immediately available for all current (and future) models on the OctoAI Text Gen Solution.

Get Started with JSON mode on OctoAI Text Gen Solution today!

You can try JSON mode responses with your choice of models on the OctoAI Text Gen Solution today. Read more about JSON mode in our API documentation.

You’re also welcome to join us on Discord to engage with the team and community, and to share your insights into the latest LLMs and capabilities. We look forward to hearing from you.