Introducing OctoAI’s Embedding API to power your RAG needs

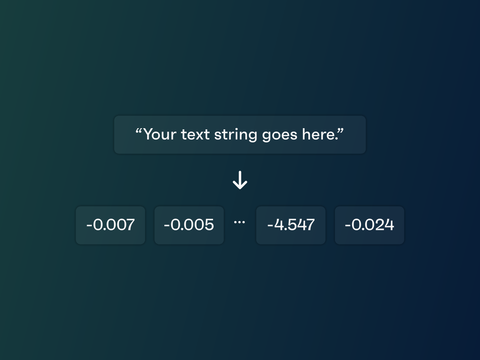

We’re excited to announce the availability of the GTE Large embedding as our first text embedding endpoint on OctoAI’s Text Gen Solution. Embedding models extract meaning out of raw data, and turn it into a vector representation. This representation is used to enhance the ability of machine learning models to understand, generalize, and make predictions in a wide range of applications.

The GTE Large embedding model is now available in the OctoAI webUI and API, and you can use it today on OctoAI to power your retrieval augmented generation (RAG) or semantic search needs.

GTE Large embedding model

OctoAI now offers the General Text Embeddings (GTE) Large embedding model. The GTE models have been trained by Alibaba DAMO Academy on a large-scale corpus of relevance text pairs covering a large domain and scenarios. This makes GTE models very suitable to information retrieval, semantic textual similarity and text reranking etc.

GTE-Large performs very well on the MTEB leaderboard with an average score of 63.13% (very comparable to OpenAI’s text-embedding-ada-002 which scores 61.0%). The embedding model caters predominantly to text input in english. Input text is limited to a maximum of 512 tokens (any text longer than that will be truncated) and it produces an embedding vector of 1024 in length.

The embedding model endpoint is available at the following OctoAI-hosted URL https://text.octoai.run/v1/embeddings and you can easily curl it with the following command.

curl https://text.octoai.run/v1/embeddings \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OCTOAI_TOKEN" \

-d '{

"input": "Your text string goes here",

"model": "thenlper/gte-large"

}'OpenAI compatible API to make migration a breeze

We’ve made our embedding endpoint OpenAI compatible so using it will result in very minimal code changes (one line of code and two environment variables changes) to support your embedding model needs if you’re already build your code around OpenAI’s APIs.

Let’s take the following OpenAI code example that returns the embedding vector on an input string:

from openai import OpenAI

client = OpenAI()

response = client.embeddings.create(

model="text-embedding-ada-002",

input=[

"Why did the octopus cross the road",

],

)

print(response)Now if you want to use OctoAI’s GTE-Large as a substitute to OctoAI’s text-embedding-ada-002, all you need are the following code changes:

Step 1

Replace in your Python script:

model="text-embedding-ada-002"with:

model="thenlper/gte-large"Step 2

Override the OPENAI_BASE_URL environment variable with the following GTE-Large endpoint URL to route the API calls to OctoAI:

export OPENAI_BASE_URL="https://text.octoai.run/v1"Step 3

Override the OPENAI_API_KEY environment variable with the OctoAI API token that you can obtain by following these instructions.

export OPENAI_API_KEY=$OCTOAI_API_TOKENThat’s all you need to do to switch to using GTE-Large on OctoAI. Note that if you already have a vector database populated with another Embedding model, e.g. text-embedding-ada-002 you’ll need to re-populate your vector database with GTE-Large. Also keep in mind that different embedding models have different embedding dimensions. So be aware that you’ll need to resize your vector database as well.

LangChain usage

For the Langchain developers out there, we’ve extended the Langchain Community embeddings to include our OctoAI embedding.

Note that for this code to work make sure to set your OCTOAI_API_TOKEN in your environment based on these instructions.

export OPENAI_API_KEY=<your API token>If you use langchain==0.1.5 or newer you’ll be able to use the OctoAIEmbeddings as follows.

from langchain_community.embeddings import OctoAIEmbeddings

embed = OctoAIEmbeddings(endpoint_url="https://text.octoai.run/v1/embeddings")

em = embed.embed_query("Why did the octopus cross the road")

print(em)If you’re coming from using OpenAI embeddings here again the number of changes you’ll need to apply to your Langchain application will be fairly minimal.

Step 1

Let’s start with the following changes. Replace the import of the OpenAI Embedding with OctoAI’s.

Replace:

from langchain_openai import OpenAIEmbeddingsWith:

from langchain_community.embeddings import OctoAIEmbeddingsStep 2

Next we’ll need to slightly change the way we instantiate our embedding in Langchain.

Replace:

embeddings = OpenAIEmbeddings()With:

embeddings = OctoAIEmbeddings(endpoint_url="https://text.octoai.run/v1/embeddings")That’s about it! The rest of the application will behave as expected. Once again, given the change in vector dimensionality that may result from switching to a different embedding model, make sure to (re-)size your vector database accordingly.

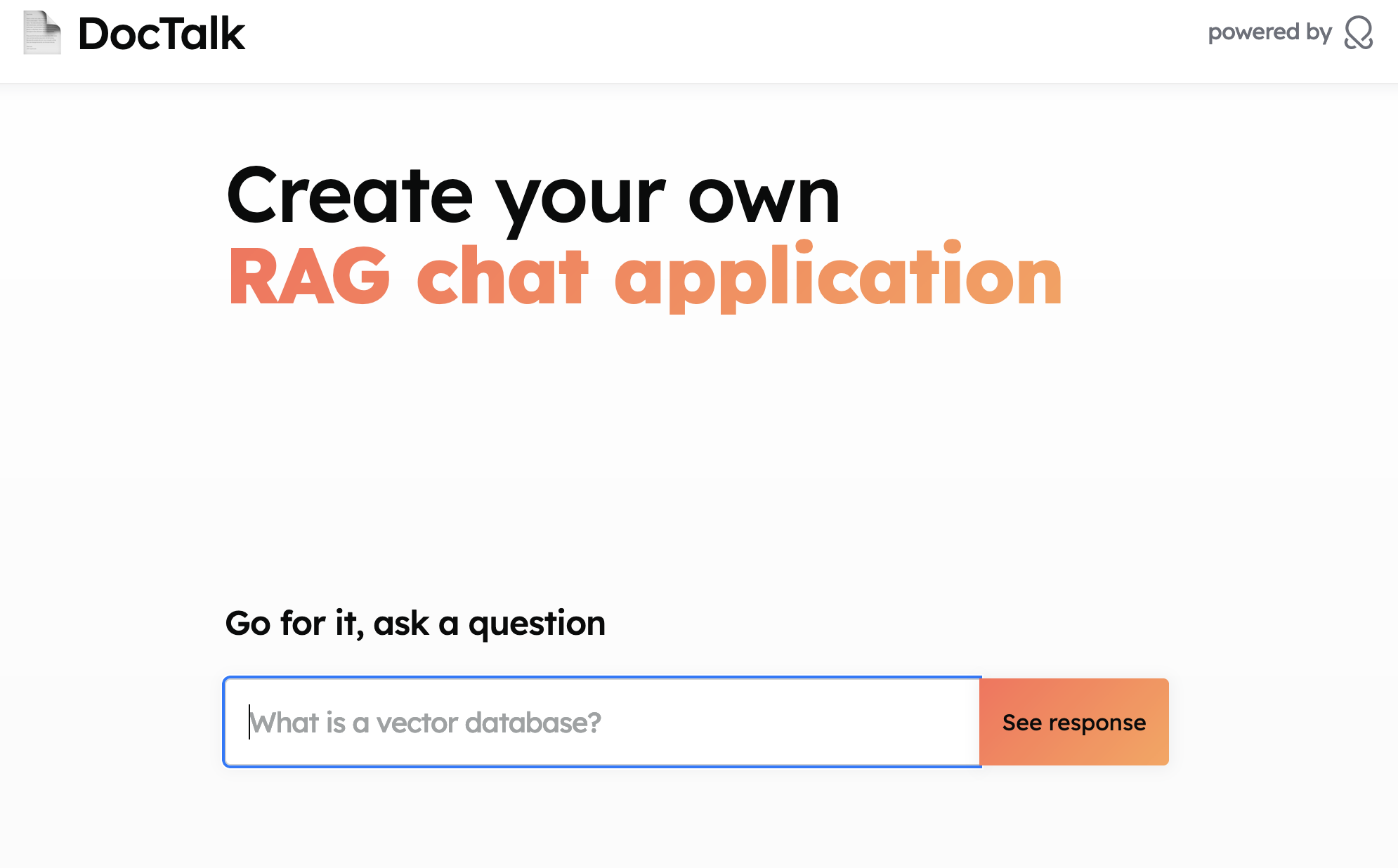

RAG demo

We’ve built a RAG demo featuring OctoAI’s GTE-Large embedding model, Mixtral-8x7B as the completion model, and Pinecone as a vector database.

You can go ahead and reproduce the LangChain backend code here and deploy it on a cloneable web app here.

Get started with OctoAI's embedding API today

With our OpenAI compatible API and intuitive Langchain integration, the availability and speed of our endpoint, migrating your embedding needs to OctoAI’s text generation solution has never been simpler.

GTE-Large is available on OctoAI at the price of $0.00005/1K token. You can get started today by visiting OctoAI’s text generation tools.

You’re also welcome to join us on Discord to engage with the team and our community. We look forward to hearing from you!