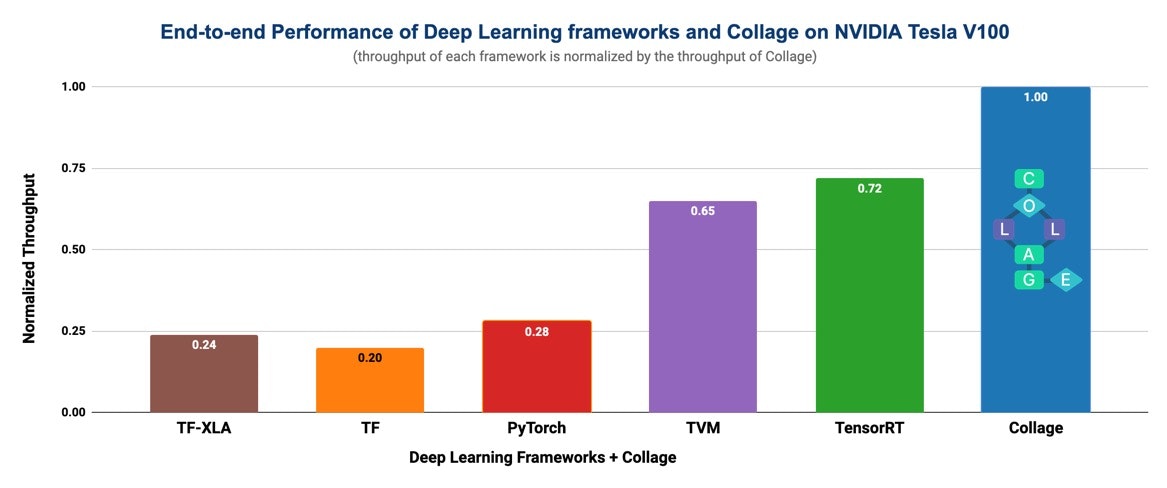

At TVMCon this week, we will be presenting our latest research from Carnegie Mellon University and University of Michigan for generating the fastest possible executable for a given machine learning model by using Collage. Once various backends such as cuDNN, cuBLAS, TensorRT, TVM, etc are registered, Collage automatically searches for the best execution backend for the workloads in the network without any manual intervention. This allows Collage to mix different backends to create a “collage of backends” to achieve the best performance. Our evaluation shows that Collage outperforms existing frameworks by 1.21x - 1.4x on NVIDIA GPUs and Intel CPUs.

Backend integration challenges

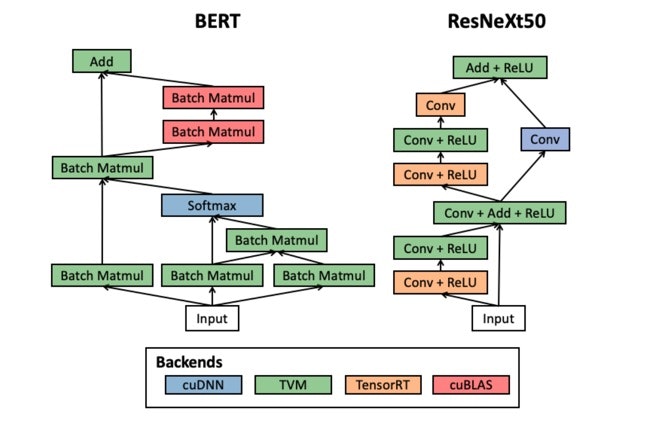

Collage leverages various backends given their unique strength to enhance performance. Figure shows representative backend placements discovered by Collage on V100. (source: collage pdf on arxiv)

To get the best runtime performance for deep learning models, a common technique utilizes mixing various optimized libraries and runtimes to get fast execution times for inference. However, current manual techniques require significant effort such as modifying the core TensorFlow or PyTorch frameworks to integrate different backends (backend registration problem) for the same model and unless the individual workloads are sent to the ideal backend (backend placement problem), they fail to deliver high performance. It is also cumbersome to stay up to date with each backend’s strengths and weaknesses as the best backend choice depends on the context (model architecture and hardware target). Additionally, the best backend choice today may not be the best in the future as hardwares and software versions evolve.

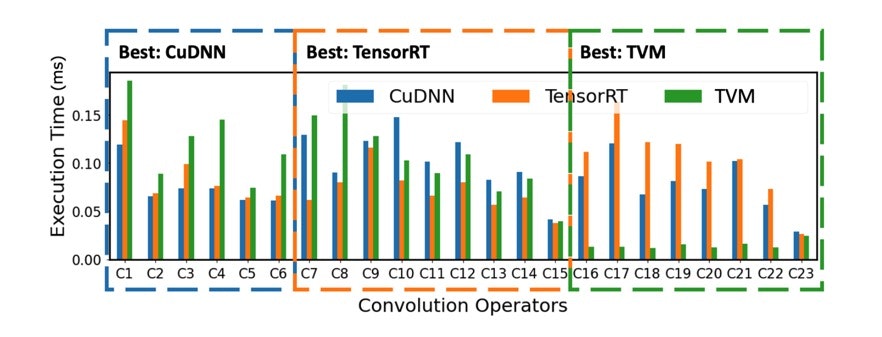

Consider the graph below that shows 23 convolutions with different configurations such as tensor shapes, strides, dilations, etc. Even for this single operation, there is no single backend that always delivers the fastest performance.

Performance of various convolutions with different configurations in ResNext-50 on NVIDIA RTX 2070; Note that there is no single backend that always delivers the fastest execution (source: collage pdf on arxiv)

Integrating various backends also introduces a new backend placement challenge: how do you divide the neural network into sub-graphs and find the best placement for each backend? Deep learning frameworks currently employ rule-based heuristics to use available backends. Common expert knowledge gets boiled down into general rules, such as “Send all conv2ds to CuDNN for execution”. But the experiments in Figure 2 above shows that this doesn’t always hold true for the best performance. Rule-based heuristics are easily outdated in the rapidly evolving deep learning landscape.

Collage’s solution: Easy backend registration and automated placement

To solve the backend registration problem, Collage decouples the deep learning workload (neural network or model) from the backend specifications (cuDNN, cuBLAS, TensorRT, etc). Each backend specification also contains details of what operator patterns (convolution, matrix multiplication, add, relu, etc) are supported by each of them. With this information in hand, Collage will automatically trigger the backend placement by exploring the computation graph, and finding the best pattern match for each sub-graph using a feedback-directed search.

One of the challenges we tackled with building this automated solution was integrating both classes of backends, operator-level libraries and graph-level inference libraries. Operator-level libraries such as cuDNN, cuBLAS, MKL, TVM ops provide efficient kernel implementations for deep learning operations and typically supports operator fusion. Fusion combines multiple high-level operators in the computation graph into a single kernel, and is one of the most efficient techniques for neural network optimization.

On the other hand, graph-level inference libraries, such as TensorRT, DNNL, etc, provide an overall execution strategy for the graph via efficient runtimes for deep learning networks by applying cross-kernel optimizations such as memory footprint and scheduling optimizations.

To handle both library types, Collage uses a two-level placement optimizer to navigate the search space. The first operator-level optimizer ignores cross-kernel optimizations, which simplifies the process and enables fast customizations with operator fusion. Next, the graph-level optimizer fine-tunes the placement of the operator-level optimizations using evolutionary search to find cross-kernel optimizations which compensates for the potential loss from the earlier simplification.

As model sizes increase and new backends appear, the search space we must explore grows exponentially. And new and diverse backend capabilities and complex fusion patterns further complicate the search for the best runtime performance.

Collage delivers state of the art model performance results

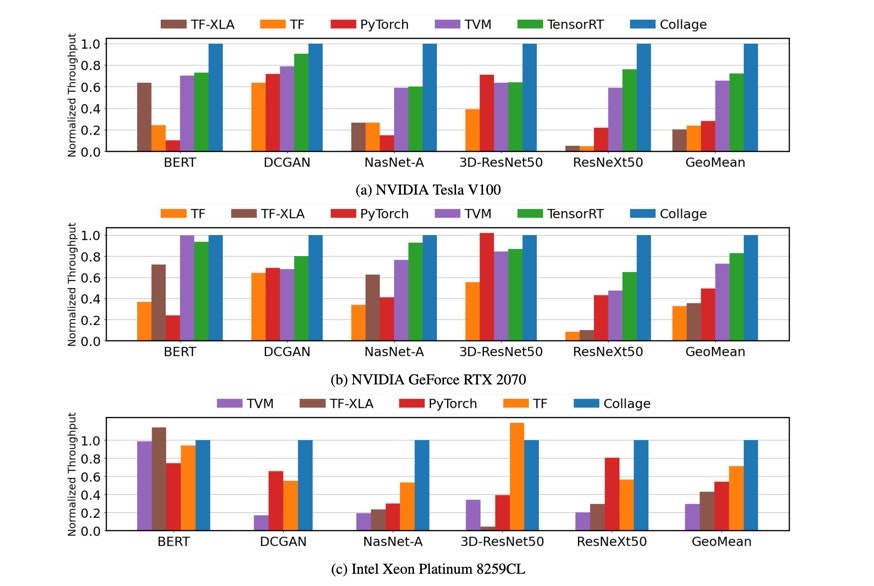

End-to-end performance of state-of-the-arts DL frameworks and Collage in five real-life workloads on NVIDIA GPUs and Intel CPU. (source: collage pdf on arxiv)

We evaluated Collage’s approach two NVIDIA GPUs and an Intel CPU and found that Collage delivers:

1.39x speedup on Tesla V100

1.21x speedup on RTX 2070

1.40x speedup on Xeon

What’s next?

Ready to use College for your deep learning workloads? We’re excited to announce that today we are open sourcing our code for Collage on GitHub under the Apache 2.0 license. In the coming year, we look forward to more deeply collaborating with the TVM community to integrate Collage into the wider vision of TVM Unity.

Currently, for GPU execution, Collage supports TVM ops, cuDNN, cuBLAS and TensorRT; for CPU execution, the supported backends are TVM ops, MKL and DNNL. We also provide an interface so additional backends can be easily integrated.

Tune in to our conference lightning talk on Dec 16 at 3:28pm to hear more about Collage.

Finally, we’d like to thank our co-authors and their supporting organizations for their significant contributions to Collage: Peiyuan Liao, Sheng Xu, Tianqi Chen and Zhihao Jia.

Related Posts

OctoML enters the MLPerf inference benchmark with the first two submissions accelerated via Apache TVM and automated with MLCommons' Collective Knowledge framework.

Today, machine learning engineers and data scientists use popular frameworks such as Scikit-learn, XGBoost, and LightGBM to train and deploy classical ML models such as linear and logistic regression, decision trees and gradient boosting.