Using Swift and Apache TVM to develop ML apps for the Apple ecosystem

In this article

What is Swift?

Apache TVM for Apple developers

Let’s build an image classification app

Integrating Apache TVM and Swift

Step #1) Model preparation - conversion, tuning and compiling

Step #2) Integrating TVM’s runtime and model into your XCode project

Step #3) Wrapping Apache TVM inference into C for Swift support

TVM Inference via an iPhone App

Conclusion

In this article

What is Swift?

Apache TVM for Apple developers

Let’s build an image classification app

Integrating Apache TVM and Swift

Step #1) Model preparation - conversion, tuning and compiling

Step #2) Integrating TVM’s runtime and model into your XCode project

Step #3) Wrapping Apache TVM inference into C for Swift support

TVM Inference via an iPhone App

Conclusion

Authors: Iliya Slavutin (Deelvin), Josh Fromm

We are excited to announce that now you can bring many more machine learning models to the Apple ecosystem via Apache TVM. The latest release of Apache TVM has significantly increased model coverage for mobile phones, while improving stability and increasing performance.

In this tutorial, we’ll walk you through creating a simple Swift-based iOS app that uses Apache TVM to run an image classification model.

What is Swift?

First released in 2014, Swift is a powerful modern open-source programming language for iOS, iPadOS, macOS, tvOS and watchOS. Applications written in Swift can target all Apple platforms, effectively making it the lingua franca for Apple. The fact that Swift is easy to learn, designed for safety, and extremely fast (thanks to the LLVM compiler technology) has led to it becoming the preferred language for most Apple developers.

Apache TVM for Apple developers

Similar to how Swift compiles your *application* to run on any Apple device, the Apache TVM deep learning compiler compiles your *machine learning model* to run on any hardware device, whether it’s Apple, Android, desktop OS or a cloud-based application.

As an Apple developer, you may be familiar with using Core ML to integrate ML models into your apps. After training a model via deep learning libraries like PyTorch or TensorFlow, Core ML converts the model to a unified representation that can execute locally on any modern Apple device. Additionally, Core ML also optimizes the model for inference with a minimal memory footprint and reduced energy usage.

Apache TVM brings similar benefits to developers, including privacy-preserving local device inference, optimized models with reduced memory footprint and lower battery consumption… while also allowing the model to run on any hardware device, even outside of the Apple ecosystem.

Similar to Core ML, Apache TVM also supports converting models from all popular deep learning frameworks such as PyTorch, TensorFlow and Keras to an optimized intermediate representation for local execution on supported hardware devices. The key difference is that Apache TVM generates the optimized model automatically, without hand-tuning or expert knowledge. Apache TVM’s new auto-scheduler explores many more optimization combinations and picks the best performing one via evolutionary search and a learned cost model. Especially as deep learning models rapidly evolve, it can be challenging for human experts to keep up with writing custom operators. Apache TVM solves this problem via automated model optimization and finds the optimal runtime execution for each target hardware.

In summary, Apache TVM compiles your machine learning models to run on any hardware target, whether it’s a CPU, GPU or accelerator. By using Apache TVM, developers can learn one machine learning optimization and deployment framework to target practically any device, whether it is part of the Apple ecosystem or not.

Let’s build an image classification app

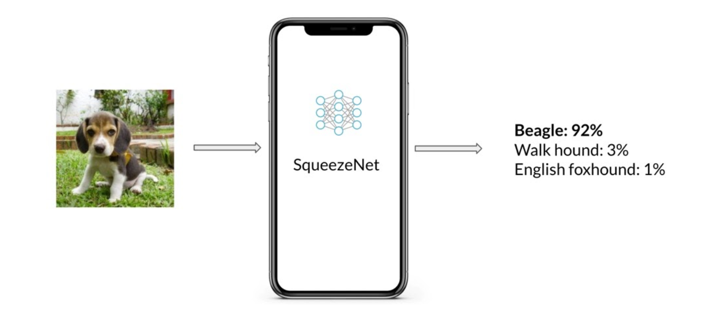

Our prototype app will allow users to pick an image from a gallery to classify into one of potentially 1,000 categories using the SqueezeNet model. SqueezeNet, released in Nov 2016, is a mobile-friendly deep neural network that is 5 MB and easily fits into a phone’s limited memory.

Integrating Apache TVM and Swift

This tutorial illustrates how Swift can be combined with the cross OS/hardware benefits of Apache TVM. You can download this sample app and follow along.

We’ll use the Python API to convert the model into TVM’s format and then optimize it with Apache TVM. Finally for deployment and inference, although no official Swift API exists for Apache TVM, we can wrap TVM’s C++ Runtime API to plug into our Swift application.

Step #1) Model preparation - conversion, tuning and compiling

Start by downloading the pre-trained SqueezeNet v1.1 using the torch.hub.load() method.

Our model preparation script will convert, tune and compile the model. First, it converts the PyTorch model into TVM’s format (Relay). The next step is to tune the TVM model for a specific hardware device, which can take hours. However, we have already generated the tuning artifacts text file for the iPhone 12 mini, so the script reuses our previous artifacts to speed up the process. Finally, the turned model will be compiled into a deployment-ready package.

After the model preparation is completed, we can test and compare the accuracy results between the downloaded PyTorch SqueezeNet model and the TVM-optimized version to ensure there was no accuracy loss.

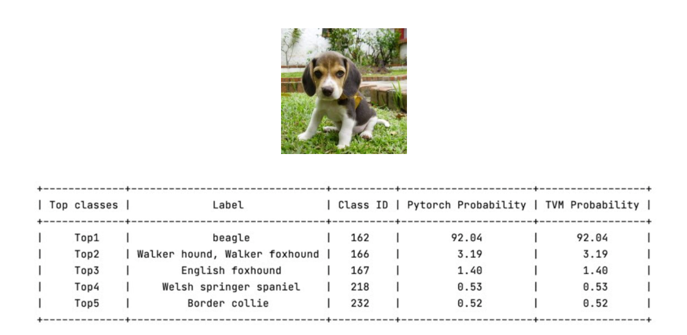

For example, here we used PyTorch 1.9 and TVM’s Python API to run inference for the following image and compare the accuracy:

The last two columns show that inference through TVM has the same accuracy as the downloaded PyTorch model for this image.

We are now ready to integrate the compiled model into our mobile application

Step #2) Integrating TVM’s runtime and model into your XCode project

We will use the XCode IDE to create an iPhone app and integrate the TVM Runtime and optimized model into the app. The TVM Runtime is like a bridge layer between the iPhone app and the model that makes the model accessible from the application.

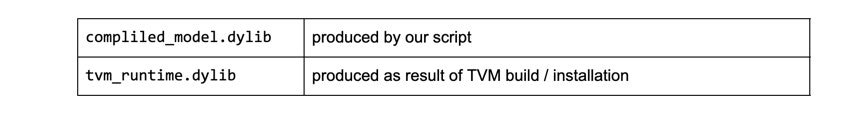

The new TVM model is 5.3 MB and is compiled into a dynamic library with all the required weights and kernels already built in. This means that the original 5 MB PyTorch model only grew by about 300 KB for the TVM-optimized version. Additionally the TVM Runtime is a constant 2 MB overhead. So bundling this TVM model into the application only adds about 7.3 MB to the final application, guaranteeing a minimal memory footprint.

We only need to add two additional binary files into the XCode project:

Step #3) Wrapping Apache TVM inference into C for Swift support

To handle Apache TVM inference from Switch, we will need to create a simple C++ Wrapper class TVMInferWrapper to handle Apache TVM inference.

TVM uses the concept of packed functions, a powerful and flexible mechanism to allow multi-language support. We will use it to get access to the required TVM functions for inference.

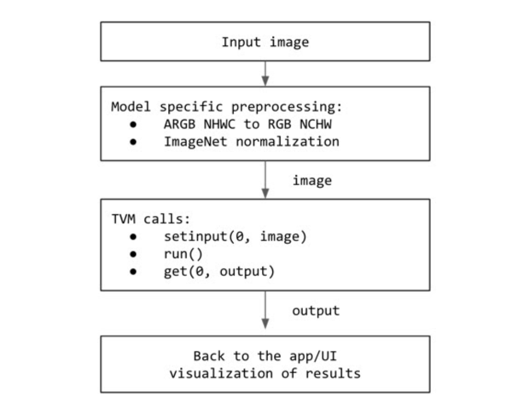

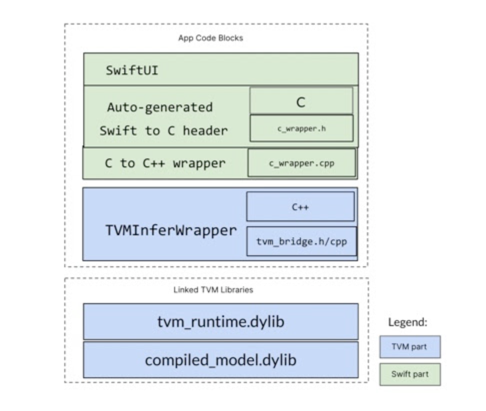

We are now ready to use TVM inference functions inside the wrapper. The full stack for inference within the application looks like this:

The input image first goes through a preprocessing stage to align to the color format used during model training. Then we call the TVM API to run inference and return the image classification results to the application.

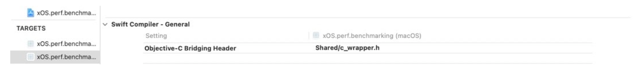

XCode and Swift make it easy to call C functions from Swift via a binding. Let’s create a c_wrapper.c to wrap our TVM C++ code into C calls and configure the usage of binding header file in XCode project settings.

Now the functions declared in the C wrapper will be visible and accessible from the Swift code. Next, we will invoke TVM inference from the Swift side.

Our final application stack will integrate Swift app with TVM:

TVM Inference via an iPhone App

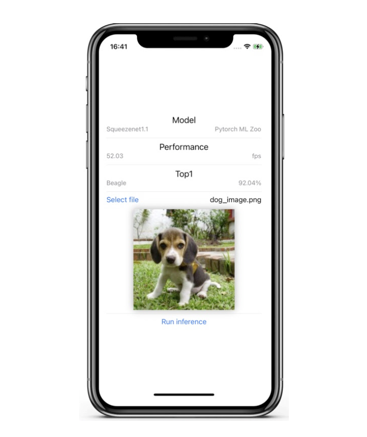

Finally we can run inference from our iPhone application:

As you can see from the application UI, we have demonstrated that TVM preserves the accuracy of the original PyTorch model while serving the model via an efficient runtime.

Conclusion

In this tutorial we demonstrated how to quickly add machine learning models to iPhone apps by integrating Apache TVM with iOS Swift code. We look forward to seeing what new intelligent applications you build with TVM!

Related Posts

We're excited to share our collaboration with AMD around Apache TVM and our commercial OctoML Machine Learning Deployment Platform. With Apache TVM running on AMD’s full suite of hardware, ML models can run anywhere from the cloud to the edge.

The Call For Proposals for the fourth annual TVMcon (Dec 15-17) is open till October 15. We encourage you to submit your machine learning acceleration talk!