TVMCon 2023 Recap

TVMCon 2023 has officially come to a close, and it was an unforgettable experience! Our 5th annual conference brought together over 1,000 registrants, and featured three days packed with content, over 60 speakers, and 10 generous sponsors who made it all possible.

Generative AI

Generative AI made a strong showing, where for the first time ever, TVM compilation enabled Stable Diffusion to run entirely in the browser at speed by leveraging WebGPU. Learn more about how this was possible in the tutorial session.

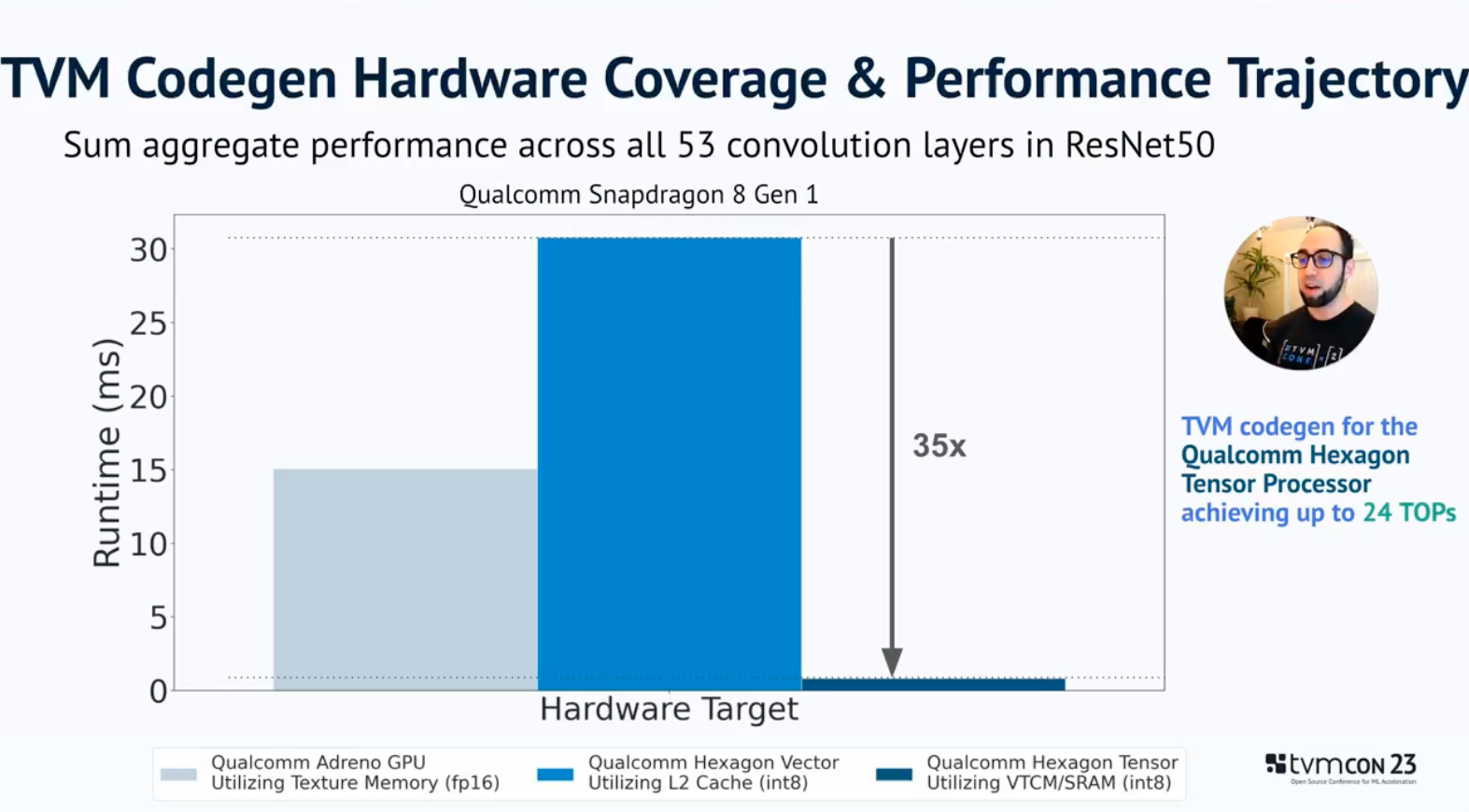

Codegeneration

Codegeneration for NPU accelerators using TVM was a theme this year, with OctoML demonstrating work with Qualcomm on generating HVX and HTA code on the Snapdragon Hexagon processor (beyond TVM’s capability already to generate high performance code on the the ARM CPU and Adreno GPU for that chip) at up to 24 trillion operations per second.

Applications, integrations, and use cases

Meta’s Peng Wu gave a great keynote on PyTorch 2.0 and how users will be able to more easily enjoy access to ML compilers like TVM and Inductor. NIO showed how TVM fits a key need to enable their autonomous driving use cases and Tencent showed how TVM accelerates reinforcement learning use cases for the gaming industry.

Code on the edge

TVM’s ability to generate code on the edge was again on display this year with two talks on training on the edge, and some great talks from edge silicon vendors including Qualcomm, Encharge, ARM, Andes, CEVA, Renesas, TI, and more.

TVM on the cloud

And it’s not just the edge, NVIDIA showed how their new CUTLASS 3.0 API better enables ML compilers and optimization experts to leverage NVIDIA hardware in the cloud, and AMD showed their use of TVM for their FPGA Vitis toolchain and leveraging the OctoML Platform makes it easier to find high performance ML instances in the cloud for each model and usage scenario. Intel also showed how they’ve added support to TVM for their latest processors to support AMX (matrix extensions) and Bfloat16.

Thank you

Thanks to everyone who made TVMCon this year such an amazing success! You can learn more about the community over at the Apache TVM home page and Twitter feed. We look forward to seeing you in the community in the coming year.

Related Posts

OctoML is investing in a PyTorch 2.0 + Apache TVM integration because we believe that a PyTorch 2.0 integration will provide users with a low-code approach for utilizing Apache TVM.

Today we are excited to share early results with the Apache TVM community of two new features: MetaSchedule and AutoTensorization.