TinyML: TVM taming the final (ML) frontier

TL;DR: Optimizing and deploying machine learning workloads to bare metal devices today is difficult, and Apache TVM is laying the open source foundation to making this easy and fast for anyone. Here we show how the µTVM component of TVM brings broad framework support, powerful compiler middleware, and flexible autotuning to embedded platforms. Check out our detailed post on the TVM project blog for all the technical details, or read on for a bite (or should I say byte?) sized overview.

The proliferation of low-cost, AI-powered consumer devices has led to widespread interest in “bare-metal” (low-power, often without an operating system) devices among ML researchers and practitioners. While it is already possible for experts to run some models on some bare-metal devices, optimizing models for diverse sets of devices is challenging, often requiring manually optimized device-specific libraries. Because of this, in order to target new devices, developers must implement one-off custom software stacks for managing system resources and scheduling model execution.

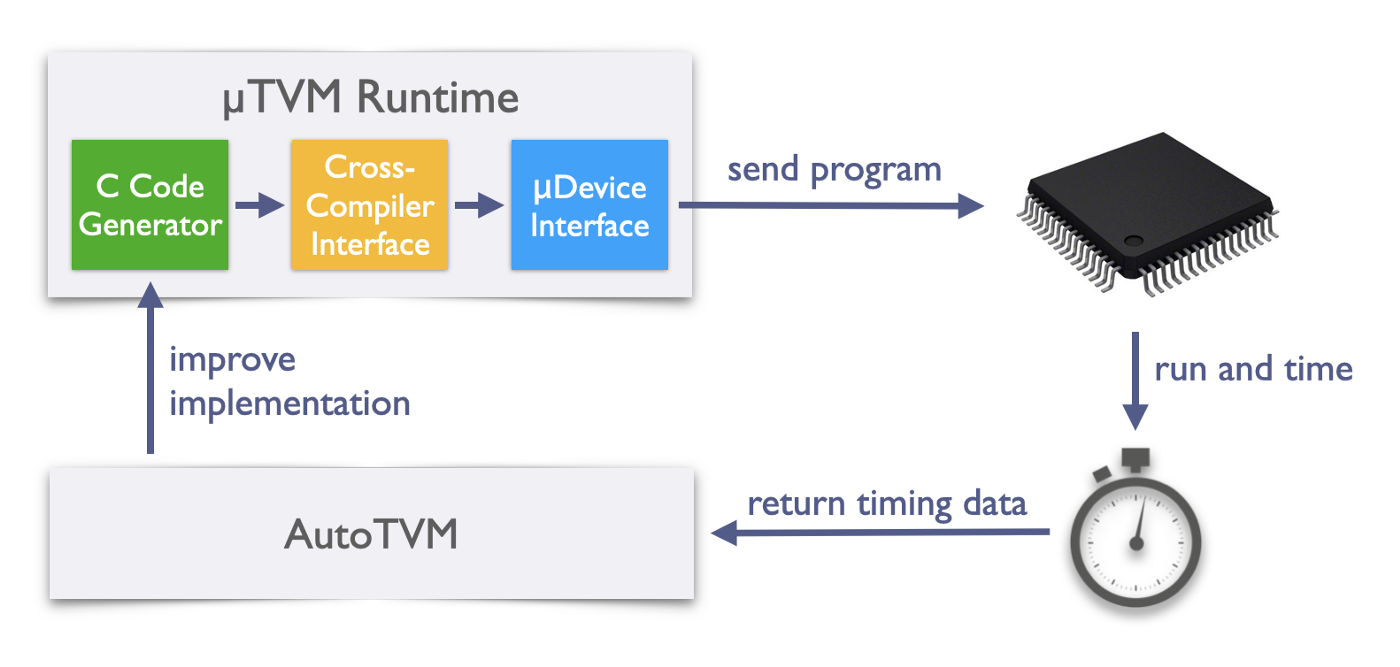

The manual optimization of machine learning software is not unique to the domain of bare-metal devices. In fact, this has been a common theme for developers working with other hardware backends (e.g., GPUs and FPGAs). Apache TVM has helped many ML engineers and companies handle the breadth of hardware targets available, but until now, it had little to offer for the unique profile of microcontrollers. To solve this gap, we’ve extended TVM to feature a microcontroller backend, called µTVM (pronounced “MicroTVM”). µTVM facilitates host-driven execution of tensor programs on bare-metal devices and enables automatic optimization of these programs via AutoTVM, TVM’s built-in tensor program optimizer. In the figure below, a bird’s eye view of the µTVM + AutoTVM infrastructure is shown:

The µTVM flow

Let’s see it in action

Before we talk about what TVM/MicroTVM is or how it works, let’s see a quick example of it in action.

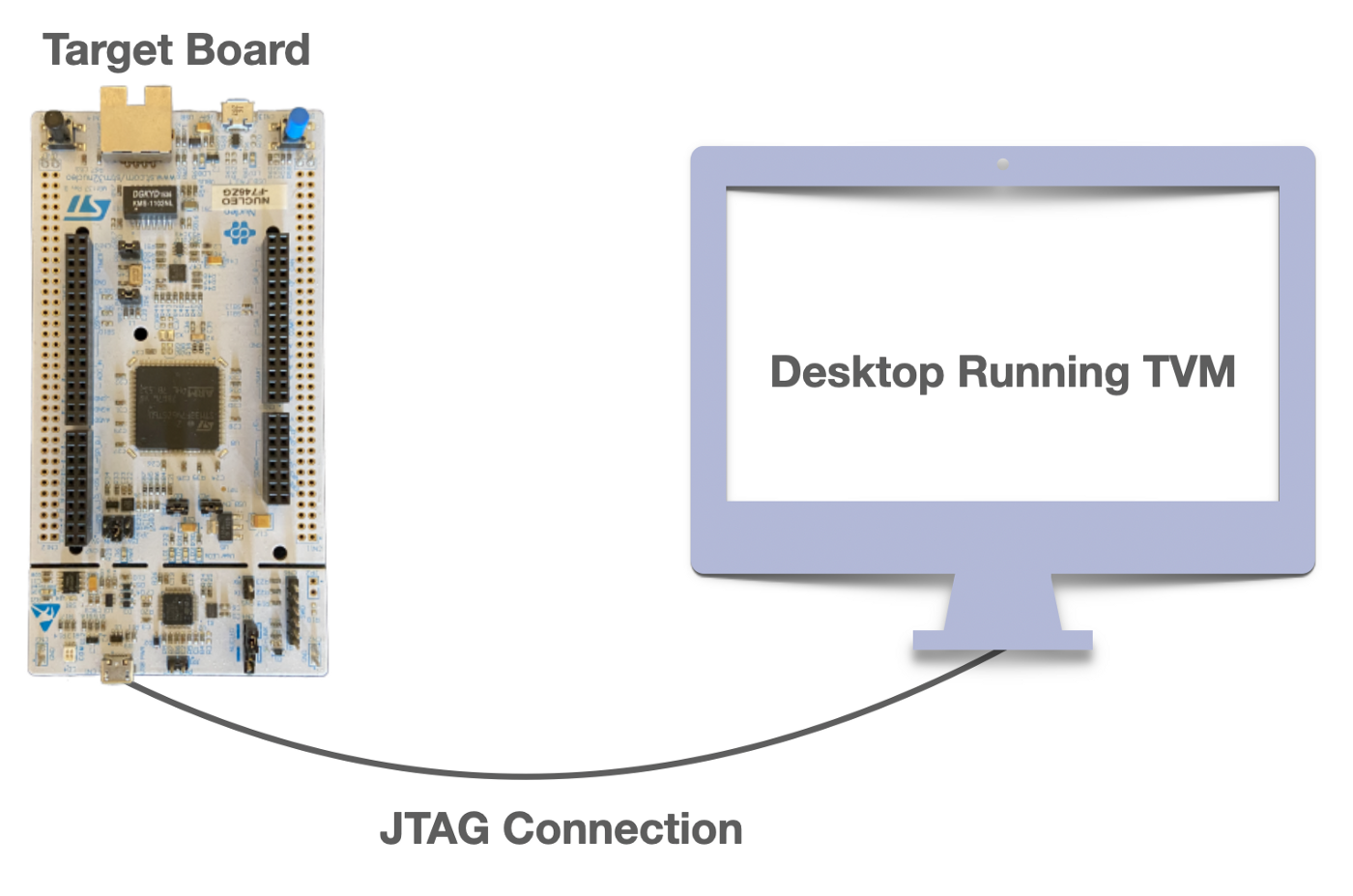

A standard µTVM setup, where the host communicates with the device via JTAG

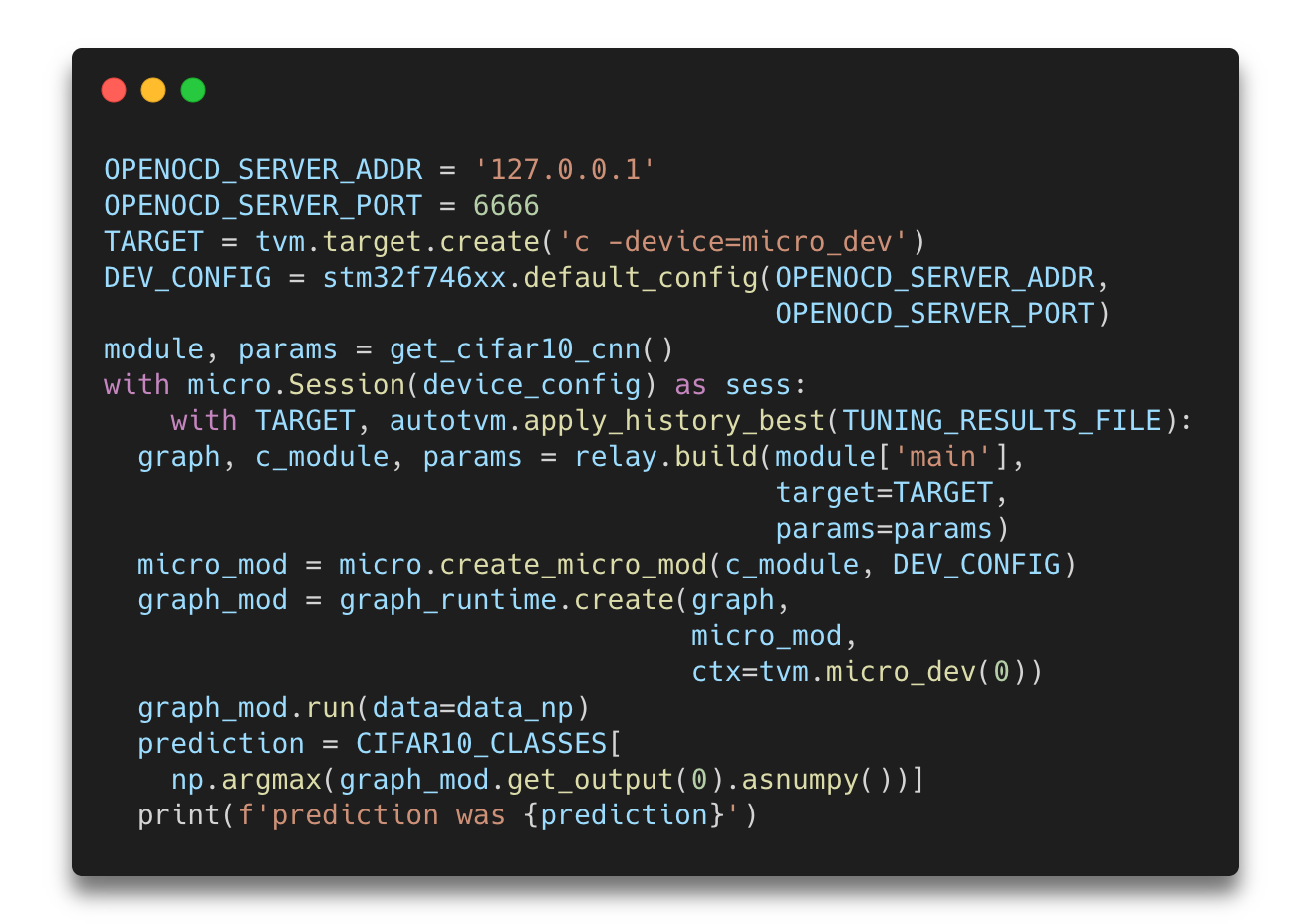

Above, we have an STM32F746ZG board housing an ARM Cortex-M7 processor, an ideal part for AI on the edge given it’s strong performance in a low power envelope. We use its USB-JTAG port to connect it to our desktop machine. On the desktop, we run OpenOCD to open a JTAG connection with the device; in turn, OpenOCD allows µTVM to control the M7 processor using a device-agnostic TCP socket. With this setup in place, we can run a CIFAR-10 classifier using TVM code that looks like this (full script here):

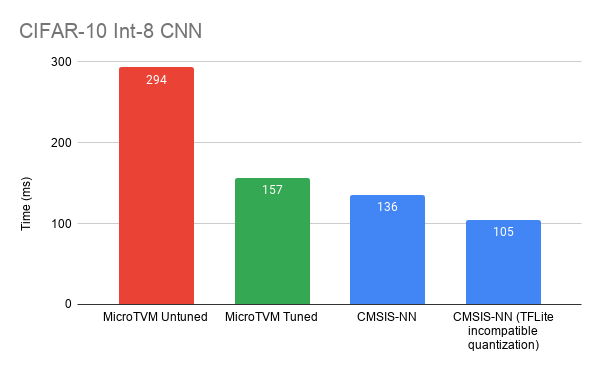

Below are the performance results of MicroTVM, compared with CMSIS-NN version 5.6.0 (commit b5ef1c9), a hand-optimized library of ML kernels.

Using AutoTVM (again, see the full post for details) we’re quite close to CMSIS-NN (especially if you want TFLite compatible quantization support), which is code written by some of the best ARM engineers in the world! TVM with µTVM enables you to play with the best of them. To see how it works, see the detailed deep-dive written by OctoML engineer Logan Weber

Next steps: Self-Hosted Runtime

Generating efficient ML code for embedded devices as we showed above is the first half of the puzzle to deploying machine learning. Running the generated kernels in a standalone capacity is next on our roadmap since µTVM currently relies on the host to allocate tensors and to schedule function execution.

Stay tuned for details on how µTVM will enable users to generate a single binary to run standalone on bare-metal devices. Users will then be able to easily integrate fast ML into their applications by linking this binary in their edge application. Much of this capability already exists in the TVM project today, so now it’s just a matter of gluing it all together, so expect updates from us soon.

Conclusion

MicroTVM for single-kernel optimization and code generation is ready today and is the choice for that use case. As we now build out self-hosted deployment support we hope you’re just as excited as we are to make µTVM the choice for model deployment as well. However, this isn’t just a spectator sport — this is all open source! Check out the TVM contributor’s guide if you’re interested in building with us or jump straight into the TVM forums to discuss ideas first.

Also feel free to reach out to us here at info@octoml.ai if you have business interest or application regarding our µTVM work.

Acknowledgements

None of this work would have been possible, if not for the following people:

Tianqi Chen, for guiding the design and for being a fantastic mentor.

- Pratyush Patel, for collaborating on early prototypes of MicroTVM.

OctoML, for facilitating the internships where I have been able to go full steam on this project.

- Thierry Moreau, for mentoring me during my time at OctoML.

- Luis Vega, for teaching me the fundamentals of interacting with microcontrollers.

- Ramana Radhakrishnan, for supplying the Arm hardware used in our experiments and for providing guidance on its usage.