Running the industry’s most cost effective LLaMA 65B on OctoAI

At OctoML, our research team has been working hard to improve the cost of operating large open source foundation models like the LLaMA 65B. Today we're sharing some exciting progress: our accelerated LLaMA 65B on the OctoAI* compute service is nearly 1/5 the cost of running standard LLaMA 65B on Hugging Face Accelerate while being 37% faster despite using less hardware. This work is enabled by recent advances of post training int4 quantization including work from IST Austria and the University of Washington, and our on-going research effort on reducing running costs for large generative AI models like LLaMA 65B.

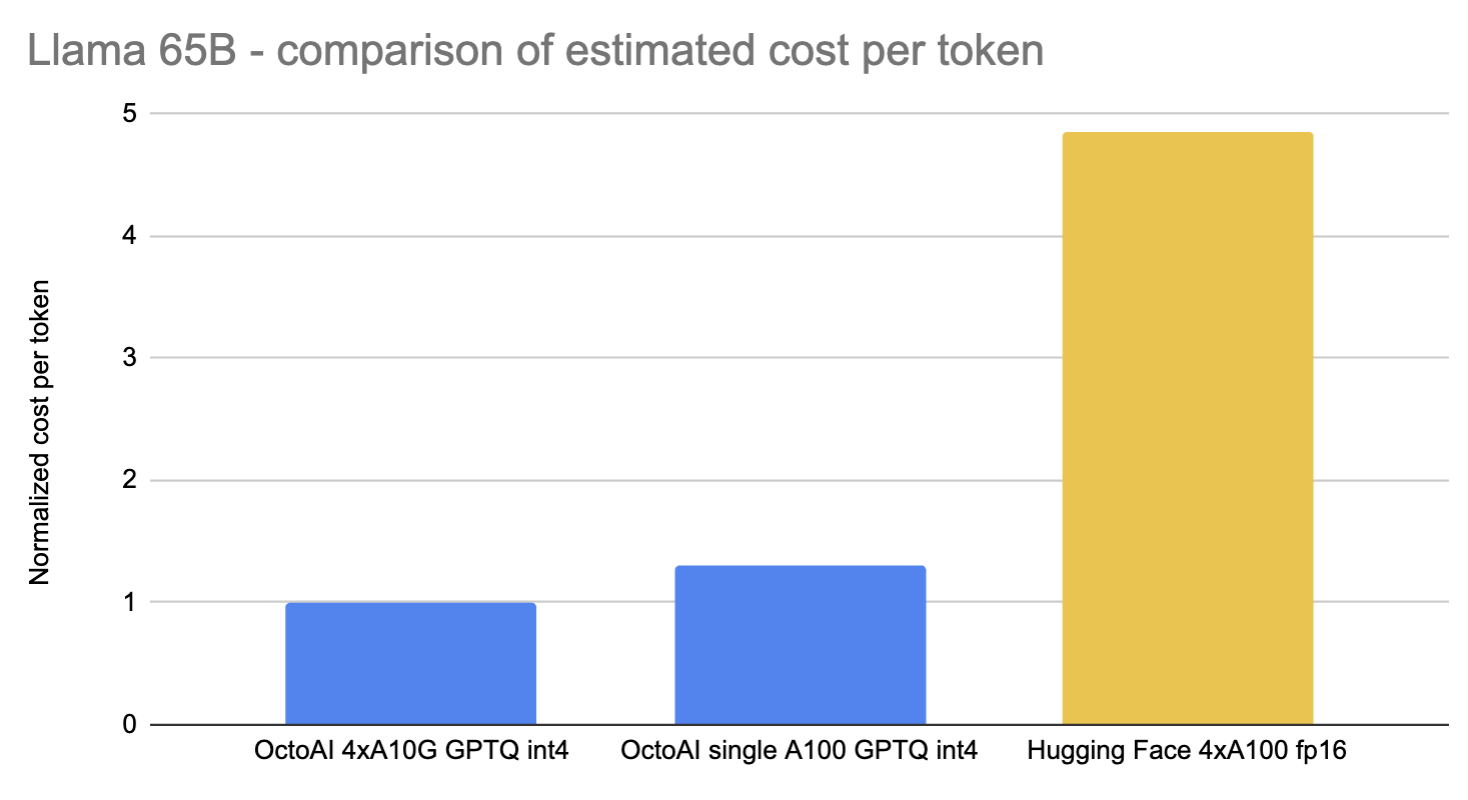

Estimated customer costs for running accelerated int4 LLaMA 65B on OctoAI vs standard fp16 model with Hugging Face Accelerate

The rise of LLaMA

Ever since the launch of ChatGPT half a year ago, there has been an explosion of innovation in the world of open large language models (LLMs). Notable among these was the announcement of Large Language Model Meta AI (LLaMA), an open LLM family from Meta which offers superior quality to GPT 3 on several comparisons, and is the basis for some of the best open LLM models today.

LLaMA 65B is the largest and most performant model in the LLaMA family. While competitive in quality, it is a resource hungry model requiring multiple A100 GPUs for effective operation, which are increasingly in high demand. Improving the operational efficiency of LLaMA continues to be a focus for researchers and companies on the forefront of LLMs, as companies look for ways to take advantage of more easily available NVIDIA A10G GPU's, even as they increase their adoption of generative AI models.

Building on open LLMs to offer developers more options, like our recently released commercializable multi-modal model InkyMM, is an important focus for us. We are an active collaborator and contributor on the effort to reduce LLaMA costs and have been evaluating several approaches to reduce the resource requirements, including experimenting with different quantization algorithms to balance cost and quality, and improve the effectiveness of parallel execution. This work passed key internal benchmarking thresholds today, and we’re excited to share results!

Getting to 1/5 the cost with LLaMA 65B on OctoAI

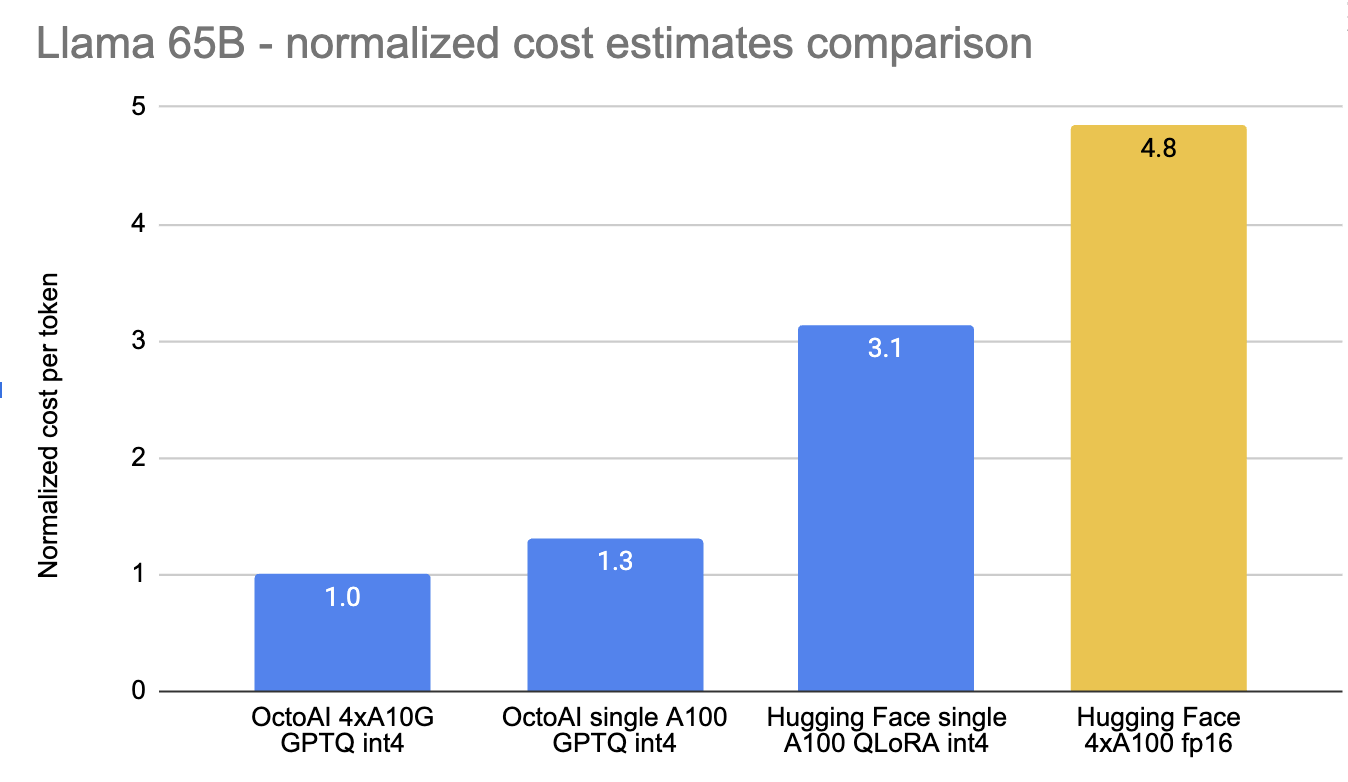

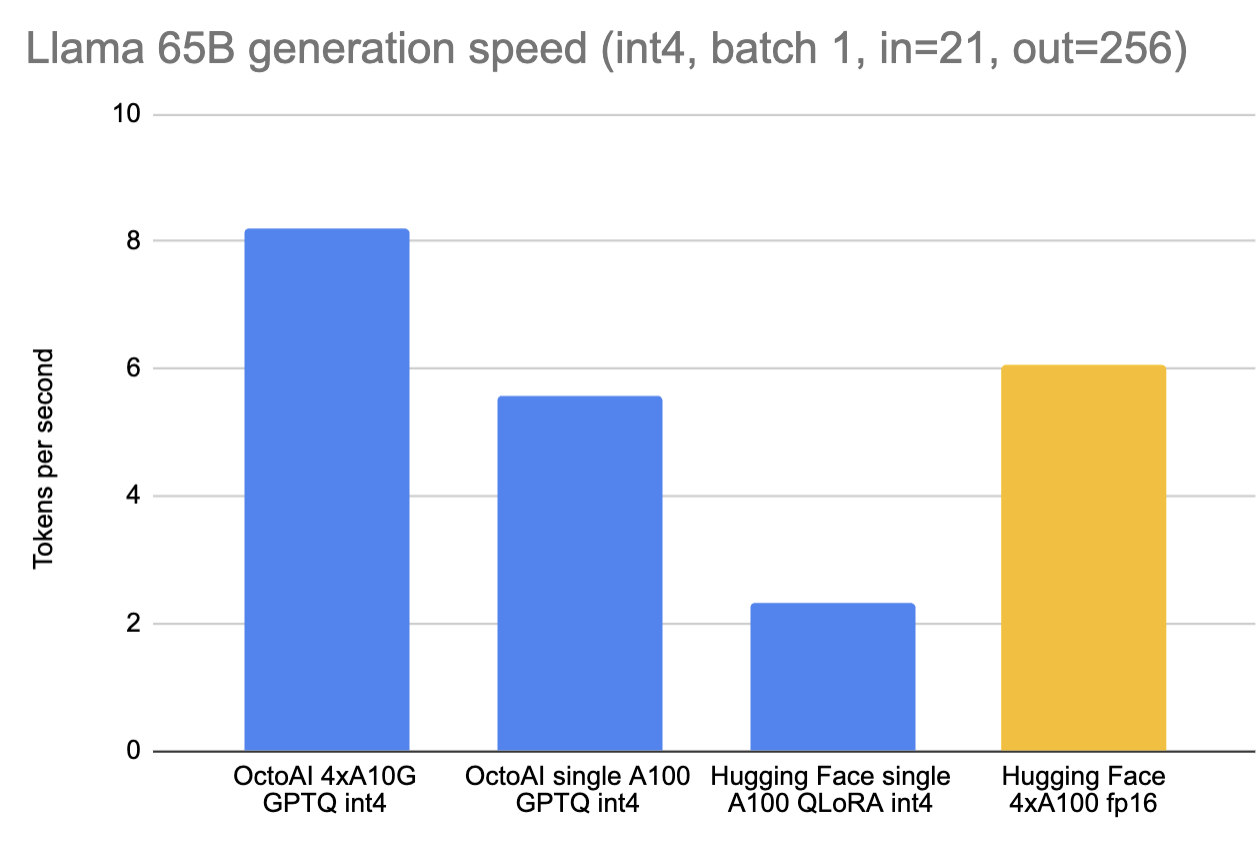

Our work builds on the recent research momentum and experimentation around the LLaMA 65B model in the LLM research community, specifically the various efforts to effectively deliver an int4 quantized version of the model. We used the recently published GPTQ quantization approach, which we found works well for use cases like generative question answering, to implement int4 quantization on LLaMA 65B. With this, we were able to deploy the quantized model on one A100 GPU on OctoAI, allowing us to run it at a third of the cost compared to running the fp16 model and half the cost compared to other similar approaches to optimization. With parallelization across GPUs added, we were also able to deploy the model across four A10Gs in OctoAI* resulting in a solution that is 37% faster than HuggingFace Accelerate’s 4x A100 speed while running at 1/5 the cost compared to the base fp16 model.

What is int4 quantization?

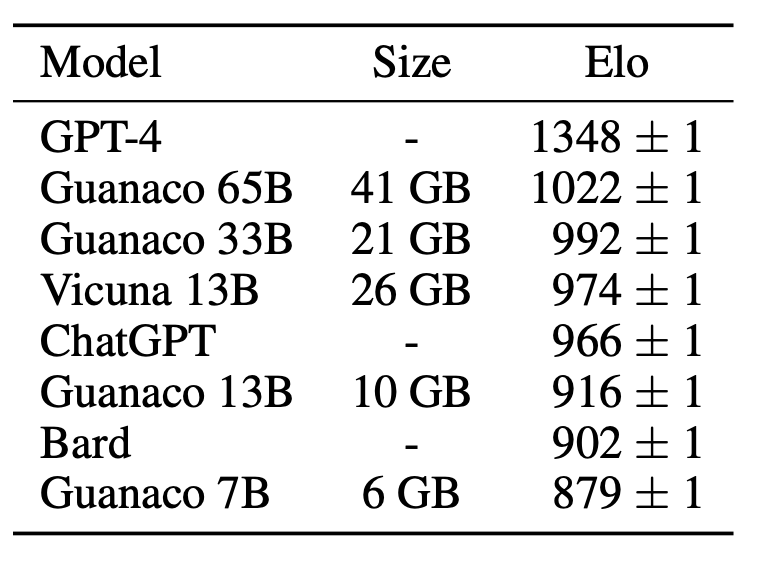

We believe that when effectively implemented, int4 quantization can result in highly effective outcomes and an order of magnitude reduction in cost, for a number of use cases. Recent research, including a University of Washington research publication from just weeks ago, are starting to build empirical validation and increase confidence around the effectiveness of int4 quantization for LLaMA 65B. We believe this approach also offers a path towards efficient fine tuning and for cost-effectively operating customized versions of large multi-billion parameter LLMs for specific scenarios and use cases. See the figure below which shows fine tuned versions of Llama (Guanaco which is a 4bit base model and Vicuna) are competitive with proprietary models including GPT-4, ChatGPT and Bard.

Source: “QLoRA: Efficient Finetuning of Quantized LLMs.”

Additionally, the following figure from the GPTQ paper shows that the accuracy in Q&A tasks like LAMBADA for models like Llama are nearly unaffected by careful 4 bit and even 3 bit quantization. This observation also holds for other tasks and datasets including PIQA, ARC, and StoryCloze as discussed in the paper in detail.

Source: “GPTQ: Accurate Post-Training Quantization for Generative Pre-trained Transformers.”

The graphs below highlight the latency and cost comparisons between our implementation using GPTQ for int4 quantization, the recent QLoRA based int4 quantized model discussed in the University of Washington paper running on Hugging Face, and the baseline of fp16 LLaMA 65B model on Hugging Face.

And these are just our first steps. We are still early in our journey with more speedups, fine-tunings, and lower costs to come, and we are excited for how developers make use of these cost, performance, and accessibility benefits. In particular, while Llama models are not usable today for commercial applications, we want to put these models in the hands of developers so that as open source, commercially available models of this scale and quality are made available, that developers can hit the ground running.

Run LLaMA 65B on OctoAI, available next week

Get access to the most cost effective LLaMA 65B available to developers as early as next week on OctoAI*. Sign up for our virtual launch event, we look forward to seeing you there!

*Only the single A100 option will be available publicly on OctoAI. The parallelized multiple A10G option is in private preview, and will not be available to everyone at launch. Please reach out to us if you are interested in using this capability.