On the Apple M1, Beating Apple’s Core ML 4 With 50% Model Performance Improvements

Co-Authors: Bing Xu, Lianmin Zheng, Jared Roesch, Sayce Falk

Apple’s release of an Arm-based chip, called the M1, was a seismic shift in the personal computing landscape. As part of Apple’s release of the M1, they touted its “Blazing Fast, On-Device Machine Learning” capabilities. Based on the Apple Neural Engine, Apple promised “up to 3.5x faster CPU performance, up to 6x faster GPU performance, and up to 15x faster machine learning.”

But, as many Machine Learning Engineers know, the actual performance of new ML-optimized hardware often lags far behind the promise. As a result, ML engineers may spend months hand-tuning their models to try to take advantage of what a new hardware target offers.

A big part of what we do at OctoML is to eliminate that months-long process by enabling superior model performance with automated optimization, even on new or emerging hardware.

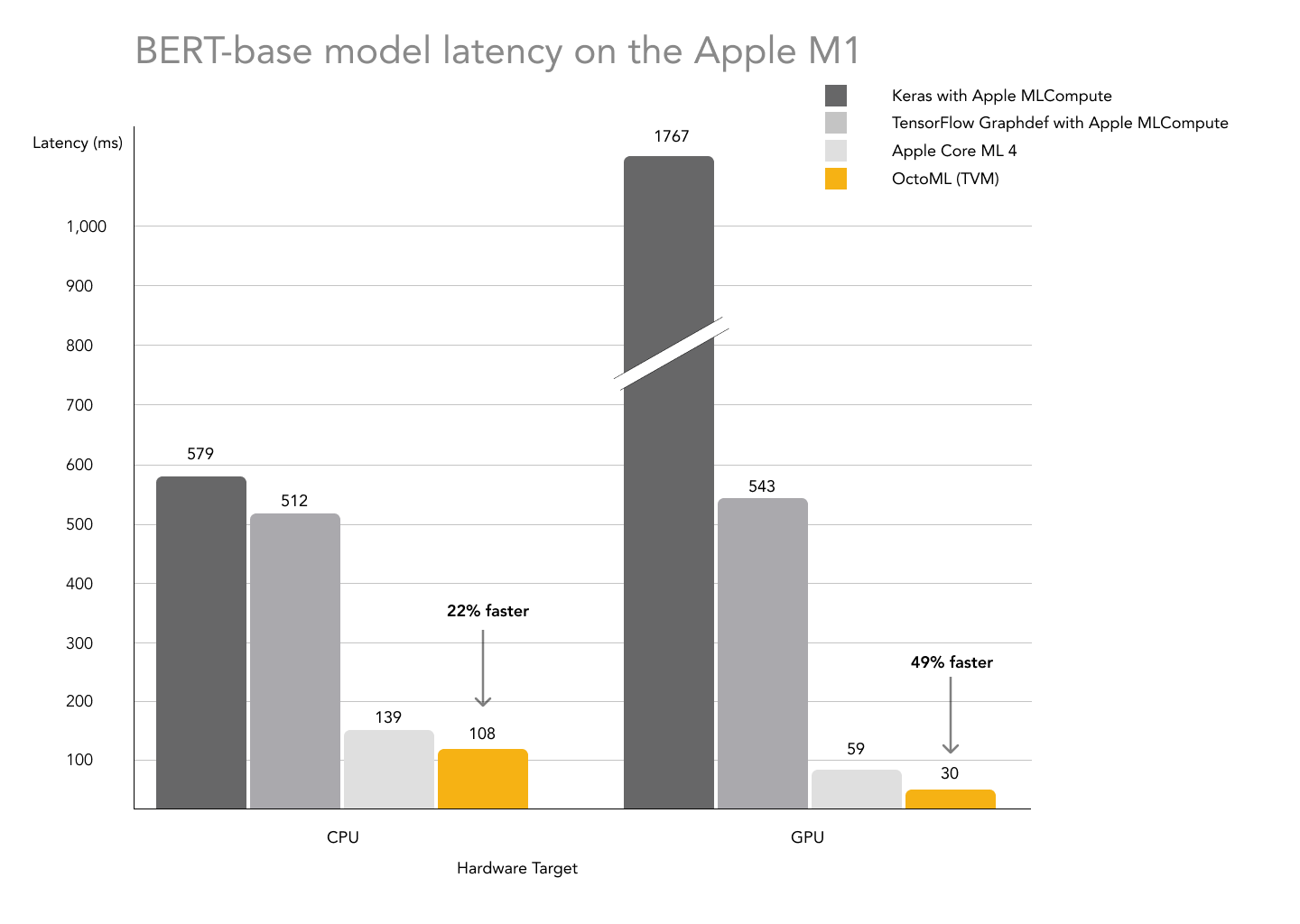

We recently got our hands on an M1 Mac Mini and, using TVM, were able to optimize and compile HuggingFace’s BERT-base model, a common NLP model used widely across the machine learning ecosystem. The results speak for themselves — a 49% performance improvement on the GPU and a 22% performance improvement on the CPU.

Delivering 49% performance improvements over Apple’s machine learning stack on the new M1

As a point of comparison, we also ran BERT-base through Keras and TensorFlow GraphDef, both with MLCompute. Both demonstrated essentially unusable results for production inferencing, with model latencies ranging from over 500 milliseconds to over 1,000 milliseconds.

The implications of this are clear — using TVM, we were able to beat Apple’s own CoreML performance on this model with just a few weeks of effort.

How did OctoML unlock this kind of machine learning performance on the M1? An automated, machine learning-based approach to model optimization. Here’s how:

Apache TVM Auto-Scheduler Effectively Searches For Best Results

First, the TVM auto-scheduler is able to use machine learning to search out effective CPU/GPU code optimizations automatically. Machine learning and compiler engineers can’t cover all possible optimizations for all possible models when writing kernel libraries and heuristic compilers by hand, and this is doubly true for new hardware — even when you fully control the kernel and the hardware!

The TVM auto-scheduler enables users to write simple math code to describe tensor computation language, and using machine learning to generate optimized code on different hardwares.

For example, the following code describes a matrix multiplication operation in TVM:

@auto_scheduler.register_workload

def mm(M, N, K):

a = te.placeholder((M, K), name="a")

b = te.placeholder((N, K), name="b")

k = te.reduce_axis((0, K), name="k")

c = te.compute(

(M, N),

lambda i, j : te.sum(a[i, k] * b[j, k], [k]),

)

return [a, b, c]To optimize a workload used in BERT on M1 GPU, we simply call the auto-scheduler in this way:

M, N, K = 128, 3072, 768

task = auto_scheduler.SearchTask(func=mm,

args=(M, N, K),

target=”metal")

task.tune(tune_option)Within a few minutes, the auto-scheduler is able to generate Metal GPU code almost 40% faster than the MPSMatrixMultiplication provided in MacOS for GPU matrix multiplication — 971 GFLOPS with the auto-scheduler vs 708 GFLOPS with MetalShaderPerformance.

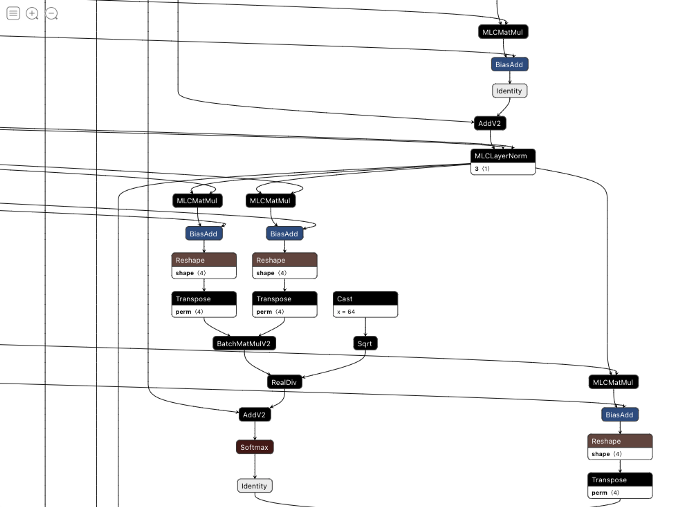

As a helpful counter-example, we dumped a block of BERT in the TensorFlow computation graph, and as you can see, there are many operators in this block that are not yet being converted to MLC operators, each of which would require manual development on the part of the Apple team. Covering all of these cases manually is extremely challenging.

BERT operators not converting to MLC operators

Apache TVM Fusing Graphs To Reduce Memory Demands

Second, TVM is able to fuse qualified subgraphs to reduce pressure on the M1’s memory bandwidth and directly generate code for the specified targets. Without TVM’s automation, Core ML optimizes to a fixed set of fusion patterns and certain subgraphs, which means that unless you happen to use a pattern already optimized by Apple (which can be hard to determine), then the resulting performance on your model can be suboptimal.

In most backends today, fusing only involves the simplest activation, such as convolution with ReLU. As an example, let’s show a new activation, hard_swish, which is a convolution layer on top of a gemm layer. It looks like this:

$y = x \cdot \frac{ReLU6(x+3)}{6}$In a traditional framework, that single activation would require five separate operations — gemm, broadcast add, relu6, broadcast div, and element wise multiplication.

With TVM, these five operations, plus gemm or a convolution operator, are fused into one with no manual effort. And, because of the TVM auto-scheduler, operator performance remains essentially unaffected. For example, if we put a hard_swish activation on top of the gemm workload above, like this:

@auto_scheduler.register_workload

def swish_mm(M, N, K):

a = te.placeholder((M, K), name="a")

b = te.placeholder((N, K), name="b")

k = te.reduce_axis((0, K), name="k")

c = te.compute(

(M, N),

lambda i, j : te.sum(a[i, k] * b[j, k], [k]),

)

def hard_swish(x):

x_plus_3 = te.compute(

x.shape,

lambda i, j: x[i, j] + 3.0

)

relu6 = tvm.topi.clip(x_plus_3, 0., 6.)

return te.compute(

x.shape,

lambda i, j: relu6[i, j] * x[i, j] * 0.1666667

)

d = hard_swish(c)

return [a, b, d]As a result, we obtain a single fused operator with 875 GFLOPS, or roughly 24% faster than standalone MPSMatrixMultiplication (this does not include the running time of the remained 5 operators). In practice, if the deep learning model is converted to TVM Relay, fusing will be performed automatically during compiling.

Conclusion

We think this is only the beginning of the performance improvement story for M1 chips. On the CPU side, we are using LLVM 11 as a backend, which is not able to generate high quality FMA instructions. On the GPU side, we think there is potential to enlarge the search space to make the auto-scheduler even better at finding optimizations. And, in the next year, we’ll also be developing a training solution which uses both TVM and auto-scheduling, potentially targeting a wider range of hardware, including but not limited to the M1 CPU and GPU.

Here’s the public repo where we’ve open-sourced our benchmarking process — check it out, share your thoughts, and join the TVM community if you’d like to contribute to superior model inferencing performance.

If your team is interested in sponsoring further M1-based development, or extending TVM’s formidable capabilities for your hardware or ML applications, get in touch!

And for teams looking for programmatic access to model optimization, OctoML’s Octomizer is now in Early Access — sign up here to indicate your interest.