We’re excited to announce the OctoML Profiler – a new tool that empowers developers to assess and reduce the compute needs of deep-learning-based applications to avoid costly over-provisioning.

Test your deep learning applications with powerful optimizations and popular hardware

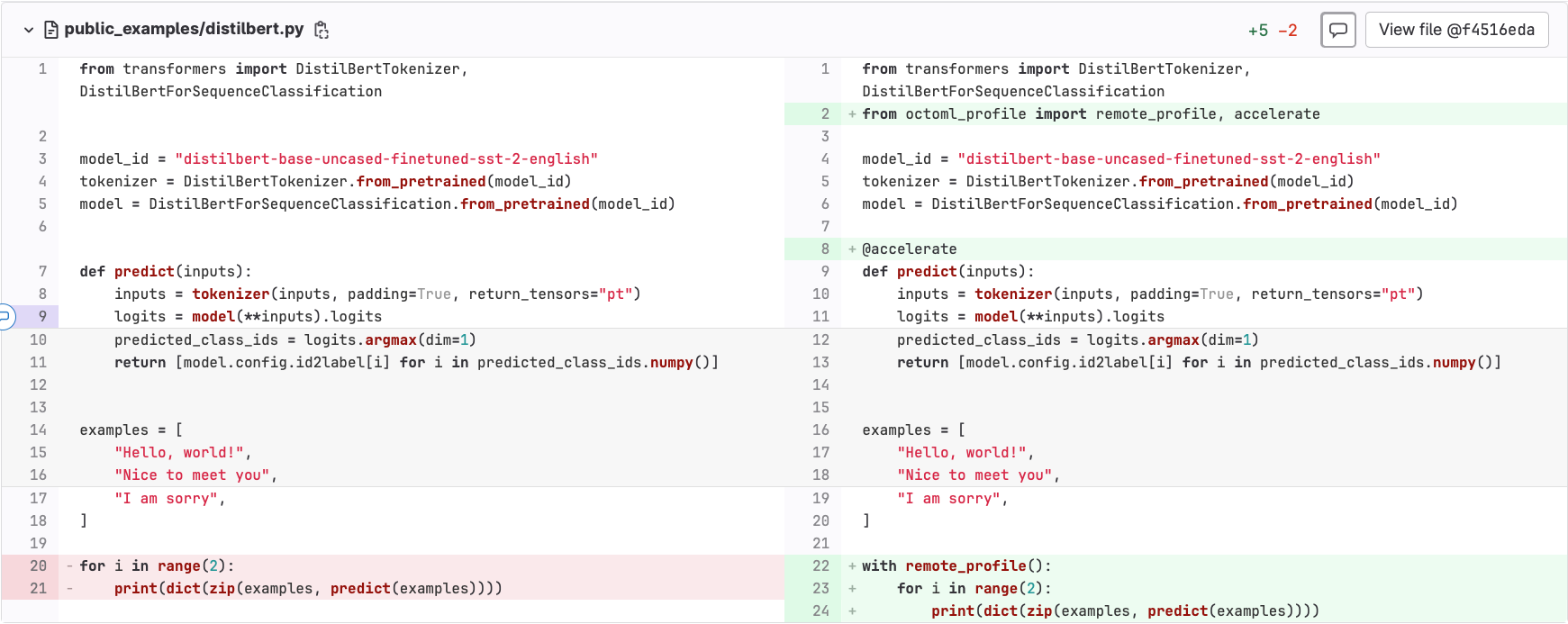

The OctoML Profiler does just what the name suggests: leverages the latest PyTorch 2.0 compiler technology to automatically offload models to cloud devices to generate a ‘profile’ of your application's model. The Profiler uses real inputs on a variety of runtimes and hardware targets to help you discover where your application runs best. Previously, acquiring this level of insight would require manual investigation and analysis from trained machine learning engineers, spinning up different hardware targets, optimizing code for each and piecing together a side-by-side comparison. But now, by adding only three lines of code to any PyTorch inference, users can quickly compare how an optimized version of that code will perform on different popular GPUs and CPUs.

Profile 1/1 ran 1 time with 10 repeats per call:

Segment Samples Avg ms Failures

===============================================================

0 Uncompiled 1 0.901

1 Graph #1

r6i.large/torch-eager-cpu 10 33.475 0

g4dn.xlarge/torch-eager-cuda 10 5.581 0

g4dn.xlarge/torch-inductor-cuda 10 4.781 0

2 Uncompiled 1 0.125

3 Graph #2

r6i.large/torch-eager-cpu 10 0.020 0

g4dn.xlarge/torch-eager-cuda 10 0.098 0

g4dn.xlarge/torch-inductor-cuda 10 0.207 0

4 Uncompiled 1 0.095

---------------------------------------------------------------

Total uncompiled code run time: 1.121 ms

Total times (compiled + uncompiled) and on-demand cost per million inferences per backend:

r6i.large/torch-eager-cpu (Intel Ice Lake) 34.616ms $1.21

g4dn.xlarge/torch-eager-cuda (Nvidia T4) 6.800ms $0.99

g4dn.xlarge/torch-inductor-cuda (Nvidia T4) 6.110ms $0.89During profiling, model inference on your real inputs are run remotely in the cloud.

Predictive end-to-end performance is critical for Generative AI

Code and model are strongly interwoven when building applications with complex generative models. In a world before OctoML Profiler, if an LLM-based application developer wanted to see how much acceleration they could achieve on different GPU and CPU hardware choices, they would have to:

Export their model and load it into several different non-PyTorch runtimes

Adapt their code to those runtimes

Manually spin up various hardware instances for testing

Perform one-by-one benchmarking on those instances

Even after all this, it’s still difficult to make apples-to-apples comparisons.

With OctoML Profiler, developers can get the same optimized results with a three-line code change. Let’s say a developer was working with Google’s Flan-T5 to build an application. Using OctoML Profiler they can iteratively test performance offered by the following combinations in about five minutes:

Model variant alterations (Flan T5 small, base, large, XL*) and other code changes

Acceleration libraries (CUDA, TRT, Default ONNX CPU library, TVM*)

Hardware targets (Ice lake, Graviton, Tesla T4, A10G)

The best part is they wouldn’t need to leave their dev environment to do it.

Why does insight into generative model performance matter to developers?

The answer lies in our recent analysis of GPT-J configurations where we saw massive variability in compute requirements (from “runs fast on commodity GPUs” to “basically unusable”) and cost (up to 14x more expensive). The bottom line: nobody wants to be the creator of an unusably slow application that is 14x more expensive than it needs to be.

As AI applications just become “applications'', developers will see the boundaries blur between deep learning models and application code. Excitingly, this will result in more sophisticated applications, but it also means that developers must be armed with new tools to optimize AI software to control costs and keep performance high. The OctoML Profiler makes it much faster to find the perfect sweet spot between model accuracy, cost, and performance.

Try OctoML Profiler

With only three lines of code, find out how much your PyTorch application can be accelerated on various GPU and CPU hardware targets.

Easily examine the balance between accuracy, performance, and cost for your deep learning code.

Stay in your favorite coding environment and on your developer laptop. No need to provision hardware or tackle dependencies.

The OctoML Profiler is currently in early access beta and available free for everyone on March 23 with documentation to get started. Sign up for the upcoming live demo where our team will walk through how to get the most out of the OctoML Profiler.

Related Posts

Today, we’re excited to announce the results from our second phase of partnership with Microsoft to continue driving down AI inference compute costs by reducing inference latencies. Over the past year, OctoML engineers worked closely with Watch For to design and implement the TVM Execution Provider (EP) for ONNX Runtime - bringing the model optimization potential of Apache TVM to all ONNX Runtime users. This builds upon the collaboration we began in 2021, to bring the benefits of TVM’s code generation and flexible quantization support to production scale at Microsoft.

OctoML is investing in a PyTorch 2.0 + Apache TVM integration because we believe that a PyTorch 2.0 integration will provide users with a low-code approach for utilizing Apache TVM.