OctoML ≔ Easier machine learning

Performance, portability, and ease of use. Pick all three.

Machine learning and deep learning (ML/DL) are making large impacts across the computing field and the horizon is bright with the rapidly growing capabilities they add to an engineer’s toolbox. But despite significant academic progress and a few high visibility deployments, the road to adding ML into real products today is paved with expensive specialists who have to navigate a complex and churning software/hardware landscape which still often leads to time to market delays and cost overruns. Simply put: machine learning is still too hard.

So why the disconnect? How are companies like Google, Facebook, Apple and Microsoft able to create and deploy ML products that delight us while the vast majority of developers are still struggling? In a word: talent. Companies like these are able to attract, afford, and build expensive machine learning research, engineering and ops teams that allow them to brute force past the difficulties that everyone must face when deploying machine learning today:

ML Pain #1: Machine learning libraries and services are low level and do not sufficiently abstract away complexity.

While open source ML tools I mentioned (and the hundreds I didn’t) attempt to make the various flows of ML application development easier, they often require nuanced understanding of the underlying algorithms and computational details. And when these tools are composed together the abstractions are often leaky, and the promised ease-of-use and one click deployment often fail to materialize. This leads to one-off implementations and workflows that are only implementable by ML experts. We need more powerful abstractions and automation in our ML systems in order to make things simpler enough for most developers or we are going to continue needing our ML “Formula 1 drivers” to do every oil change and tire rotation.

ML Pain #2: Machine learning computations are typically slow and not hardware portable.

As explained in the excellent Machine learning systems are stuck in a rut paper, modern machine learning systems all the way from hardware to software have overfit on making a small set of common machine learning patterns, which causes performance and usability of these systems to suffer whenever you depart from this common set of benchmarks. Time and time again this bites people when they see those great ResNet-50 numbers from your favorite hardware or software provider, and then the performance vanishes (or crashes completely) once you apply a few tweaks or try to move to another similar hardware platform for deployment.

One common source for both of these pains can be traced back into the core layers of the machine learning stack: all modern machine learning today is built on top of fragile, inflexible foundations. Specifically, the set of high performance kernels that reside at the center of libraries like Nvidia’s cuDNN, Intel’s MKLDNN, and similar kernels in the guts of TensorFlow, PyTorch, and Scikit-learn. We’ll dive more into the reasons the traditional way these kernels are written, optimized, and debugged is holding back ML progress in a future blog post, so stay tuned.

It is hard to experiment with front-end features [to make machine learning easier], because it is painful to match them to back ends that expect calls to monolithic kernels with fixed layout. On the other hand, there is little incentive to build high quality back ends that support other features, because all the front ends currently work in terms of monolithic operators.

Machine learning systems are stuck in a rut. Barham & Isard, HotOS'19

A few years ago, a few of us set out to rebuild the ML software stack from the ground up to fix the root and symptoms of these problems. By establishing and channeling the design tenants of automation, cross-platform compatibility and usability with a composable core the Apache TVM project is now in use by most major deep learning companies, powers every Amazon Alexa wake word detection, and enables 80+ fold improvements in Facebook speech models in use today. By building a deep learning compiler with an ever growing community Amazon, Facebook, Microsoft and others have been able to produce cross platform, high performance code for their workloads with less effort than writing low level kernels the old fashioned way.

Seeing these great use cases of TVM, we realized we could and needed to go further up the stack to expose these benefits and capabilities to even more developers. Which led us to the founding of OctoML and the creation of our first product:

OctoML

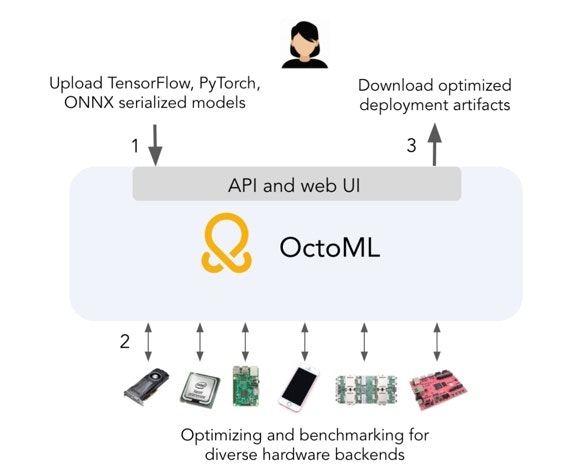

OctoML is a software as a service (SaaS) product designed to make it easy for anyone to deploy ML models as part of their applications. A developer/data scientist simply uploads a machine learning model (TensorFlow, PyTorch, ONNX, etc), and we optimize, benchmark, and package that model across a number of hardware platforms and application language runtimes. OctoML makes it easy to:

Amplify your data science team by reducing their turnaround time from model training to model deployment

Lower ML cloud operating costs

Or drive cloud costs to zero by enabling datascientists to deploy more models to edge, mobile, and AIoT devices.

Use OctoML to compare performance of a model across various cloud CPU and GPU instance types and to evaluate the device sizing requirements needed to deploy your models on ARM mobile or embedded processors for improving data privacy and potential hardware (BOM) cost reductions. Then, you can choose from a variety of deployment packaging formats such as Python wheel, shared library with C API, serverless cloud tarball, Docker image with gRPC or REST wrapper, Maven Java artifact, or others.

Once you’ve received your binary in hand, you are free to deploy your model using whatever deployment machinery you currently use, and to further automate the procedure using the Octomizer API to optimize/build your model as part of your application’s existing CI/CD pipeline.

This enables the users we’ve talked to avoid the weeks or months of effort it typically takes to get a trained model into production across a variety of devices. Saving you the hassle of worrying about (or trying to fix) performance, operator, or packaging inconsistencies across platforms. And opening the door for deployments closer to the edge to save compute cost, latency, or improve user privacy and data security.

And this only scratches the surface as we more fully leverage the “ML applied to ML” compilation procedure we are using under the hood to improve performance, memory footprint, and disk footprint of models on an ongoing basis. And Octomizer is continuously improving as we add more hardware, more benchmarks, more packaging formats, and faster optimization routines. Stay tuned for future blog posts where we dive into concrete use cases and examples of these benefits.

OctoML is currently under private beta but if you’re interested in trying it out or hearing about future updates and releases, make sure to sign up here.

Related Posts

Autoscheduling enables higher performance end to end model optimization from TVM, while also enabling users to write custom operators even easier than before.

At OctoML, we love working with teams that are changing our world through the application and productization of deep learning models.