OctoML and AMD partner to speed-up ML deployments from cloud to edge

Authors: Thierry Moreau and Juan Antonio Carballo

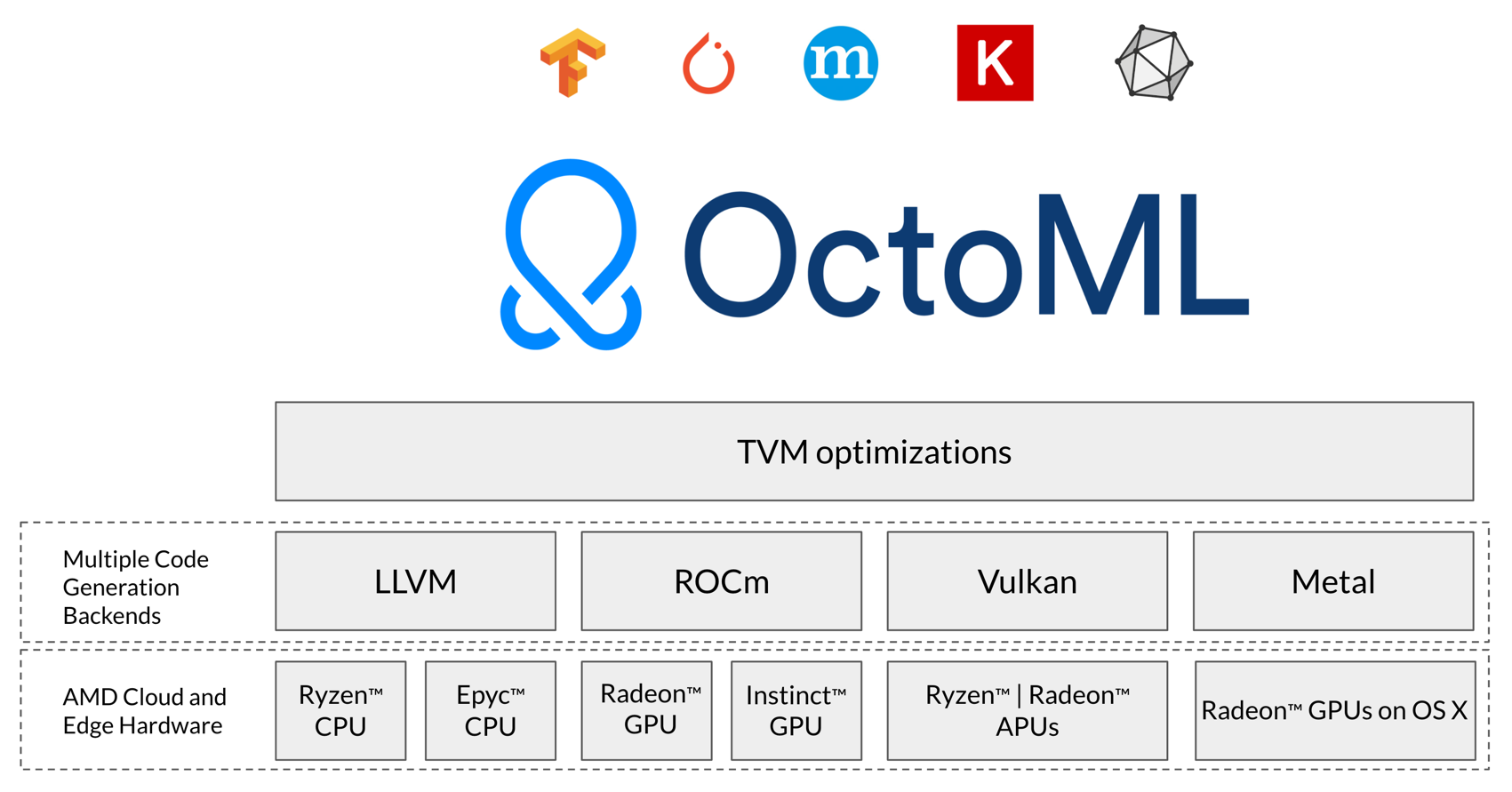

Today we are incredibly excited to share our collaboration with AMD around both Apache TVM and our commercial OctoML Machine Learning Deployment Platform. The addition of AMD as a key player in our growing OctoML ecosystem is a major win for our collective customers, users and communities that want to deploy next-generation ML applications easily on AMD CPUs, GPUs, and APUs. With Apache TVM running on AMD’s full suite of hardware, ML models can run anywhere from the cloud to the edge—including servers, laptops, gaming PCs, embedded systems (e.g. automotive and industrial products), and more.

AMD’s commitment to Apache TVM, the open source stack for ML performance and portability, provides important validation of our mission to make high performance ML more accessible to a wider community. Now our joint AMD and OctoML customers can be confident that all their models, regardless of the ML framework they were trained in, will always be high performing on a chosen inference target. TVM also simplifies deployment by leveraging multiple code generation backends depending on the target hardware and operating system. Via one common interface, TVM allows customers to quickly optimize and deploy any model to production.

But deployment flexibility is not enough. Machine learning teams also demand world-class performance. As part of our collaboration, we extended TVM to exploit novel AMD hardware features that accelerate deep learning such as XDLOps on the Radeon Instinct MI100 GPUs, and dual INT8 pipes on AMD EPYC Milan server CPUs. When benchmarking an MLPerf workload on EPYC Milan CPUs, we achieved 93% of the theoretical single-core FP32 maximum throughput. In addition, on AMD Radeon Instinct MI100 GPUs, we achieved over 30TFlops on FP32 transformer-type workloads. For AMD customers, this means getting state of the art performance from their AMD-based inference hardware.

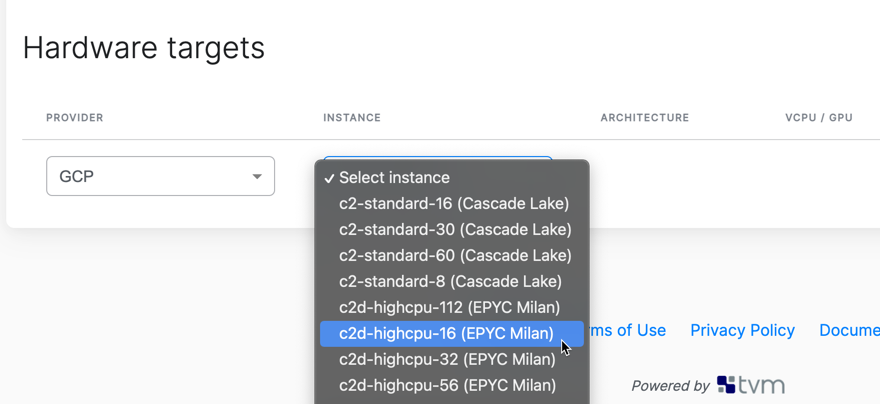

OctoML UI showing EPYC Milan CPUs as hardware target options

We’re also excited to share that the OctoML Machine Learning Deployment Platform now supports the AMD EPYC Milan CPU across multiple leading cloud providers. EPYC Milan instances provide high core count and microarchitecture improvements over the previous generation, EPYC Rome, delivering high performance when serving AI/ML applications in the cloud.

The OctoML platform is the only solution that offers customers a unified, ML deployment lifecycle that automates model performance acceleration, enables comparative benchmarking across cloud and edge stacks and provides ready-for-production packaging of accelerated models across different production environments. By selecting the ideal deployment target based on our comprehensive benchmarking, customers also save on inference costs by getting the best price to performance ratio for their models.

If you are exploring AMD as a hardware vendor for your ML deployment, sign up for an early access trial of OctoML.

Related Posts

The Call For Proposals for the fourth annual TVMcon (Dec 15-17) is open till October 15. We encourage you to submit your machine learning acceleration talk!

The OctoML Machine Learning Deployment Platform now supports inferencing targets from the cloud to the edge, including hardware from leading industry vendors like AWS, GCP, NVIDIA, AMD, Arm, and Intel.