Leveraging block sparsity with Apache TVM to halve your cloud bill for NLP

Intro

The natural language processing (NLP) community has been transformed by the recent performance and versatility of transformer models from the deep learning research community. To make this point concretely, just look at the ability for BERT

(one of the most well known transformer models) to read a paragraph of text and answer free form questions

about that text at greater than human level performance And beyond question answering, these same transformer models have been shown to be industry leading across many NLP domains including sentiment analysis, language translation, summarization, and text generation.

All of this together explains why many industries are being transformed or heavily impacted by the ability of computer programs to read, understand, manipulate, and generate human language.

While the research results have been exciting to watch, for a while it was difficult to actually getting your hands on these models and use them in practice. Until our friends at HuggingFace showed up to lead the pack in supplying high quality implementations of these models and some of the surrounding machinery (eg: tokenizers).

## Transformer models production performance challenges

But these models are typically large and require large amounts of computation making them expensive to deploy into production and often adding significant latencies in query response times.

Especially when you consider that each of these models contains hundreds of millions of parameters and you want to cater to millions billions in the case of Roblox of user requests at a time. This has led to many efforts including quantization, pruning, distillation, and batching to improve execution performance and model size for these large transformer models.

One way to improve the performance of these models is through optimization coupled with compilation. So instead of executing each operation in the deep learning network in sequence, we instead analyze the network as a whole and benefit from optimizations such as operator fusion and generate code specific for a particular network structure.

The Apache TVM project was designed to do exactly this in order to improve performance AND make it easier to run a network on any class of hardware so that you can “build once and run anywhere”.

Another popular approach to improving model speed is pruning: where the less important weight values of a network are replaced with zeros which are more easily compressed in memory and on disk and can theoretically be skipped during execution. Indeed, HuggingFace recently published their PruneBERT model which was pruned to 95% sparsity during fine tuning. This means that only 5% of the weights are non-zero, while still retaining nearly 95% of BERT-base’s accuracy in SQUAD V1.1.

Unfortunately, most deep learning frameworks and execution engines are not designed to benefit from this type of optimization, and indeed, if you load up PruneBert into an existing inference engine, you’ll find that it performs exactly the same, because it treats your sparse network as a dense network. And even in the cases where it can support sparse operations, these kernels are typically only implemented for a few layer types and a few hardware platforms.

Efficient sparse models with compilation

Sparse networks is where compilers like TVM again shine because we can use code generation to benefit from sparsity on more hardware platforms more easily.

TVM applies a few tricks to PruneBERT to leverage its sparsity to speed up computation. The first step is to identify which layers in the model are sparse enough to be worth converting to a sparse matrix multiply. However, it is very difficult to speed up unstructured sparsity due to how values are loaded into cache; when the 0s in a sparse tensor are randomly distributed, they end up getting loaded when adjacent values are. TVM instead converts compatible layers to a *block sparse*, representation where entire chunks of a tensor have all zero values. This allows entire tiles to be skipped during computation which is quite a bit faster. For more details on how this works, check out the sparse tutorial on the TVM website

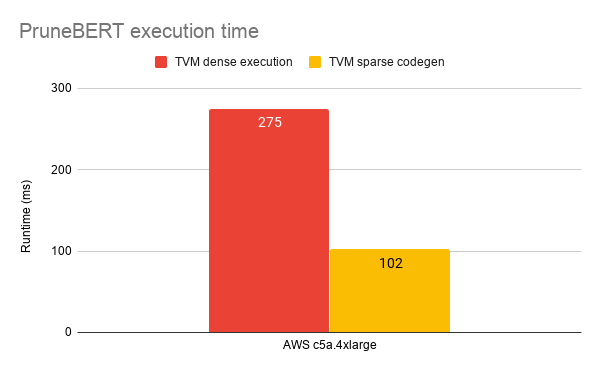

And indeed as you can see below, TVM can improve PruneBERT performance 2.7x when compared to an TVM compiled dense model on an AWS c5a.4xlarge instance.

Cost savings

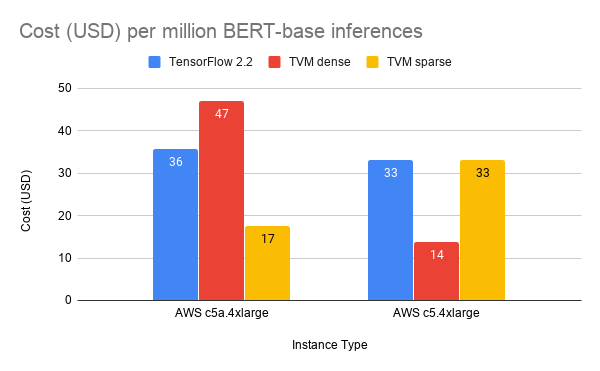

What’s nice about performance savings of models like these are that they translate immediately into cost savings as well. Here we take a look at the cost of running BERT-base-uncased c5 and c5a instance types in the cloud and show the savings as compared to dense compiled and dense uncompiled (TensorFlow and ONNX-Runtime) alternatives.

And if you run the numbers, using these PruneBERT performance gains, this translates immediately into 2.7x cost savings with the cost of a million BERT inferences on a c5a.4xlarge instance reduced from $47 to $17

Next steps

We also compared the performance of TVM with TensorFlow 2.2 and included an additional Intel based instance type in the comparison. As you can see below, running PruneBERT on AMD Epyc based c5a.4xlarge using the block sparse kernel support in TVM is still 2x cheaper than running the same model through TensorFlow 2.2.

On Intel based c5.4xlarge instances the story is more complicated. While the performance of TVM is still much better than TensorFlow 2.2 (greater than 2x better performance and cost) we only see this using the dense compilation path and not the block sparse kernels. We plan on improving the sparse kernels for Intel architectures so that we can apply AutoTVM or even the [upcoming Autoscheduling](****) capabilities to get these compatible with the highly tuned Intel MKL based GEMM kernels.On Intel based c5.4xlarge instances the story is more complicated. While the performance of TVM is still much better than TensorFlow 2.2 (greater than 2x better performance and cost) we only see this using the dense compilation path and not the block sparse kernels. We plan on improving the sparse kernels for Intel architectures so that we can apply AutoTVM or even the upcoming Autoscheduling capabilities to get these compatible with the highly tuned Intel MKL based GEMM kernels.

Summary

It was a blast working with the HuggingFace on this project to demonstrate speedups to the transformer models that are changing the landscape of what is possible. If this piqued your interest, please feel free to follow along at home by following the TVM docs on how to compile your own sparse model You can also check out the work of our friends at AWS on improving performance of (dense) BERT inference using TVM and Thomas Viehmann’s work on an initial path towards accelerating training with TVM.

If you’re interested in getting these kinds of performance improvements and cost savings automatically for your business, sign up here to hear about our services and get in line to try out the OctoML Platform for automated model optimization.