How 4X Speedup on Generative Video Model (FILM) Created Huge Cost Savings for WOMBO

Generative AI is the hottest workload on the planet, but it’s also the most compute intensive, and therefore expensive to run. This puts startups building generative AI businesses in a tricky position. Not only must they deliver killer product experiences that grab attention and market share – they need to make the economics work too. To lower compute costs, generative AI models need to run faster and more efficiently on a more diverse set of hardware.

WOMBO: an OctoML customer story

WOMBO makes popular consumer apps for content creation using generative AI. Their apps use ML models like stable diffusion to help people create fun videos and images to share online.

Nearly 75 million people across more than 180 countries downloaded the app, making WOMBO one of the fastest-growing consumer apps in history. Like any generative AI startup, user growth translates to higher compute costs. With a fleet of GPUs nearing capacity, any model efficiency gains were a top priority.

One model running in production was the open source model FILM, which predicts and generates intermediate frames between two existing frames in a video sequence. For premium WOMBO users, FILM generates a video clip showing their “transformation” into a celebrity or historical figure. The more frames you have in between the images, the better the final video, but the more costly and time consuming it becomes to generate. Model optimizations across hardware can help WOMBO better balance user experience (faster, higher quality video) and cost considerations.

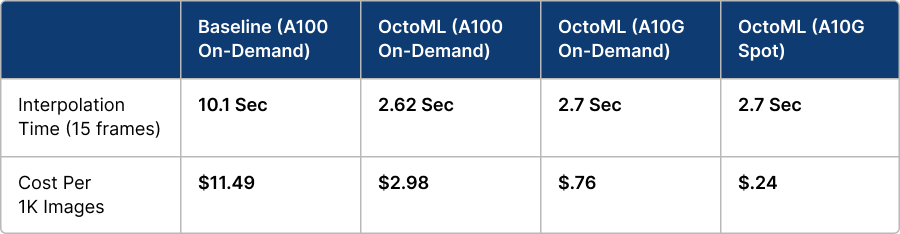

OctoML ran a series of experiments to optimize FILM on two different GPUs: NVIDIA A100 and A10G. We used the OctoML platform to compare a baseline version of FILM (TensorFlow) with several other optimized configurations.

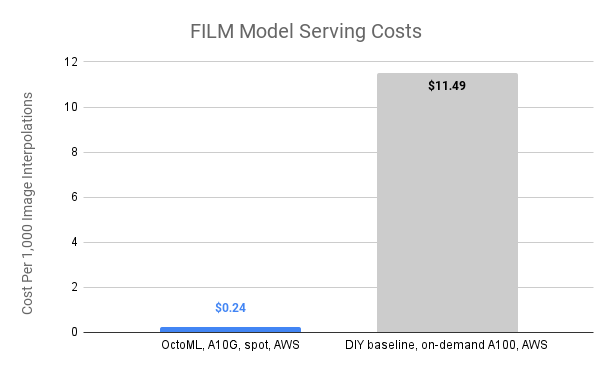

Cut model serving costs by 98% compared to baseline

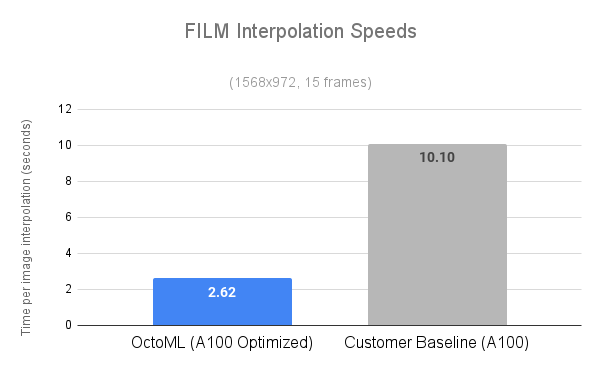

3.9x speedup on FILM model over baseline configurations

Reduced image-to-image interpolation (AKA transformation) time from 10.1 seconds to 2.6 seconds

Better speed makes for a nice user experience, but these efficiency gains also slash the compute cost per 1,000 image interpolations from $11.95 to $0.24. Supposing 10,000 clips are created each day, that’s the difference between annual model serving costs of $43,617.50 or $876.

How OctoML Optimized FILM Workloads

OctoML's platform provides an automated pipeline to optimize ML models for various hardware configurations, making it an ideal choice for enhancing the FILM model's performance on NVIDIA GPUs.

One key aspect of the optimization process was integrating the FILM model with the TensorRT backend for ONNX. TensorRT is a high-performance deep learning inference optimizer and runtime library developed by NVIDIA. By using the TensorRT backend for the ONNX Runtime, WOMBO could fully harness the capabilities of NVIDIA GPUs and achieve substantial speedups.

Notably, the data show major cost benefits to running this workload to an A10G instance. Not only is there a drastic reduction in cost, but the runtimes are nearly the same. A10Gs are also abundant in many global regions, and more readily available than A100s and similar supersized GPUS.

The integration process involved converting the FILM model to the ONNX format and using OctoML's platform to optimize the model for the specific target GPUs, the NVIDIA A10G, and A100. OctoML applied various optimization techniques, such as Kernel Tuning, Layer and Tensor Fusion, and quantization to maximize performance on the target hardware. OctoML was also able to provide the optimized version of the FILM model with dynamic image shape support which was an important requirement for WOMBO.

Once the optimizations were completed, OctoML and WOMBO.ai collaborated on testing the optimized FILM model to ensure it met the performance and quality requirements. This step involved benchmarking the model on NVIDIA A10G and A100 GPUs, comparing the results with the original implementation, and verifying that the dynamic image shape support was working as intended.

Summary

Optimization of FILM leads to a better user experience as users receive their artwork in a shorter amount of time.

The capacity increase helps the platform accommodate a growing user base and provide a more responsive and seamless experience.

By utilizing fewer resources to achieve the same or better performance, WOMBO.ai can manage its infrastructure costs more effectively and increase margins.

With more efficient resource usage and faster processing times, WOMBO can handle more users without compromising the quality of AI-generated artwork.

If you want to unlock these kinds of benefits without becoming an ML expert, sign up for early access to the OctoML AI compute service. We’re building an efficient compute layer that’s as easy to use as OpenAI, but flexible to run with any model. Sign up for early access here.