From GANs to Stable Diffusion: The History, Hype, & Promise of Generative AI

In this article

In this article

Emerging deep learning technologies have sparked public debates about a new era of automation: Will self-driving cars replace human drivers? Can image segmentation and classification models diagnose tumors better than doctors? Are speech-to-text and text-to-speech models going to change the face of or usher in a new kind of customer service?

The latest ML technology to grab attention is generative AI. The past couple of years have seen a meteoric rise of text-to-image models such as OpenAI's DALL-E 2, Google Brain's Imagen, Midjourney and Stable Diffusion. The initial wave of use cases for these models revolved around artistic image generation with millions of early adopters trying out various text prompts to see what the AI could dream up. But as the technology matures, we will see entirely new implementations of this groundbreaking technology. In our new blog series covering generative AI, today we’ll start with a quick technical introduction to image generation models.

Look for our upcoming articles that will cover generative AI topics.

What are generative models?

There are several types of unsupervised artificial neural networks that can all generate data (text, audio, images, etc) from the latent, or hidden, representations that they learn, such as:

Autoencoders

Generative adversarial networks (GANs)

Diffusion models

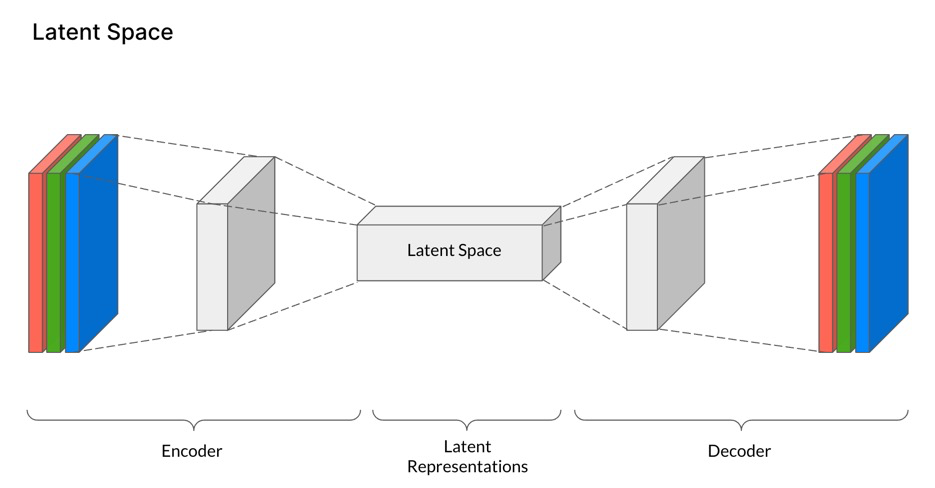

Latent representations encode a meaningful internal representation of externally observed events by reducing the dimensionality of the input data into simpler representations. Transforming a large image into latent space is like reducing the width and height of the image and compressing it down to a 64 x 64 abstract representation. This makes the representation in compressed, latent space more convenient to process and analyze. The goal of training is to extract the most relevant features from the original image into hidden representations in latent space.

Autoencoders

The first generative models of the past decade used autoencoders and even today some of the most powerful generative models use autoencoders stacked inside their deep neural networks. Autoencoders are made of two parts: first an encoder maps the input data to a code, then a decoder reconstructs the original data from the code. Optimal autoencoders perform as close to perfect reconstruction as possible. Generative autoencoders trained on images of human faces would generate new life-like faces.

GANs

Next came the era of GANs. First proposed by Ian Goodfellow in 2014, just two years later Yann Lecun, Chief AI Scientist at Meta, said it was “the most interesting idea in the last 10 years in machine learning”. GANs, which are composed of dueling neural networks - one network, the generator, tries its best to generate realistic images and another network, the discriminator, tries to determine whether the image is real or fake.

The back and forth processing required for GANs was time consuming - even in 2017 it could take days for a GAN to generate a realistic face. Another drawback of GANs is that they are notoriously difficult and sensitive to train, suffering from failure to converge and mode collapse, where the generator starts preferring to generate only one or a few outputs that it knows have historically fooled the discriminator. GANs are still an active field of research and some recent developments such as new cost functions, experience replay to reduce overfitting and mini-batch discrimination to address mode collapse, have alleviated some of the difficulties of deploying them. Nowadays GANs have a wide range of use cases including super resolution, face aging, background replacement, next frame prediction for videos and more.

Diffusion models

Diffusion models are the latest incarnation of generative models - they can generate a wider variety and higher quality of images than GANs. The original idea was inspired by nonequilibrium thermodynamics. As stated in the 2015 paper introducing diffusion models, “The essential idea… is to systematically and slowly destroy structure in a data distribution through an iterative forward diffusion process. We then learn a reverse diffusion process that restores structure in data, yielding a highly flexible and tractable generative model of the data.” The goal of these models is to learn the latent or hidden structure of an input dataset by modeling the way data diffuses through the latent space.

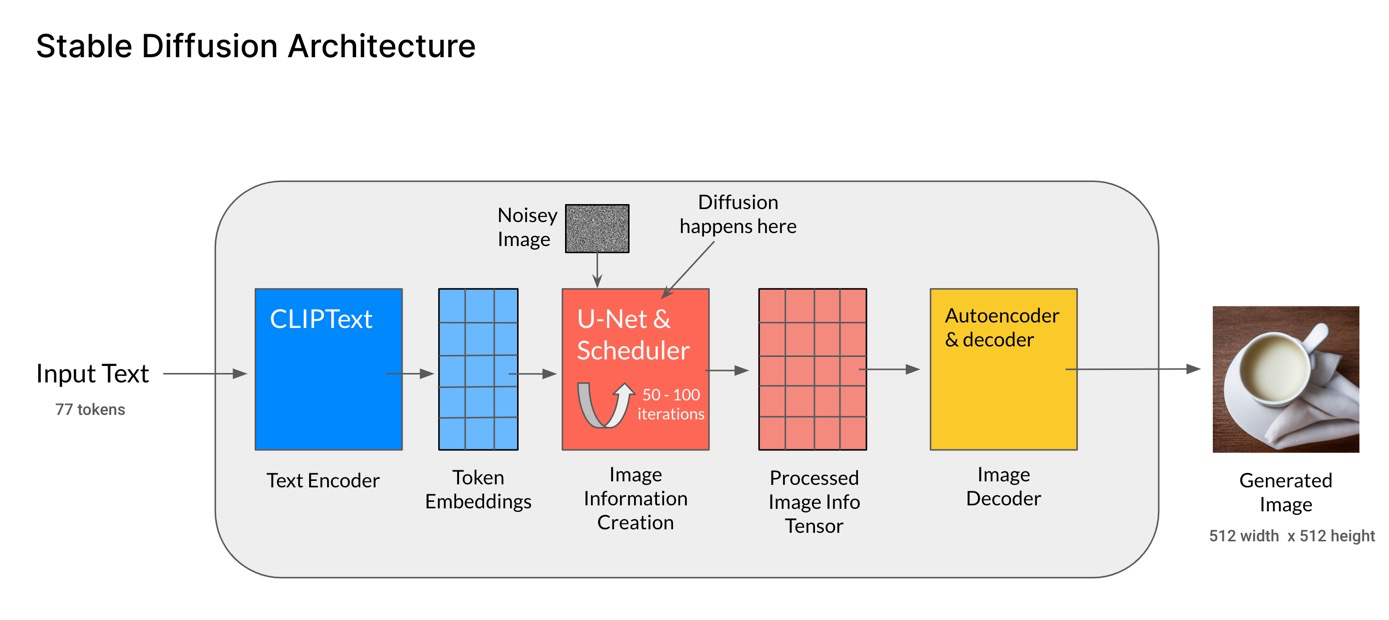

When applied to computer vision, diffusion models are trained to denoise images corrupted with gaussian noise–noise with the property density function equal to the normal (bell shaped) distribution. In the initial training steps, diffusion models progressively add noise to the image and wipe out details until the image becomes pure noise. Then they learn to reverse the corruption process and re-synthesize the image by gradually denoising it till a clean image is produced. Denoising diffusion models like Stable Diffusion are therefore trained to add and remove small amounts of noise from images in repeated steps. During inference or image generation, the model considers the text prompt, and starting with a randomly generated noisy image in latent space (by default it is 64 pixels x 64 pixels), works to gradually denoise it until a predefined number of steps is reached, which is typically 50 - 100. At the end an image decoder will transform the denoised 64x64 latent image into a 512 x 512 human viewable image. The key to accelerating diffusion models, therefore, is to focus on optimizing the U-Net model where most of the processing happens.

Taking a step back, the fundamental idea behind diffusion models is similar to a drop of milk diffusing in a cup of tea. A diffusion model is trained to learn how to reverse this. It starts from a mixed state with the milk in the tea and learns how to slowly unmix the milk from the tea.

Image output from Stable Diffusion for the prompt “a drop of milk diffusing into a cup of tea” with 52 steps and guidance scale of 7.5

By 2022, diffusion models started stealing headlines with their uncanny ability to generate sophisticated, high quality images. One of the most important breakthroughs that contributed to diffusion models surpassing GANs’ ability to generate images was the introduction of latent diffusion models in December 2021, where the diffusion happens in latent space instead of pixel space. These types of diffusion models use an autoencoder to compress the training images into a much smaller latent space, where diffusion happens, then the autoencoder is used to decompress the final latent representation, which generates the output image. By re-running the repeated diffusion steps in latent space, the latest generation of diffusion models reduce the generation time and cost significantly.

Beyond generating impressive images from text prompts, diffusion models can also be guided via additional input images to accomplish new AI applications. For example, outpainting, extends an image beyond its borders, or inpainting, fills in masked out areas of an image with something else based on a text prompt.

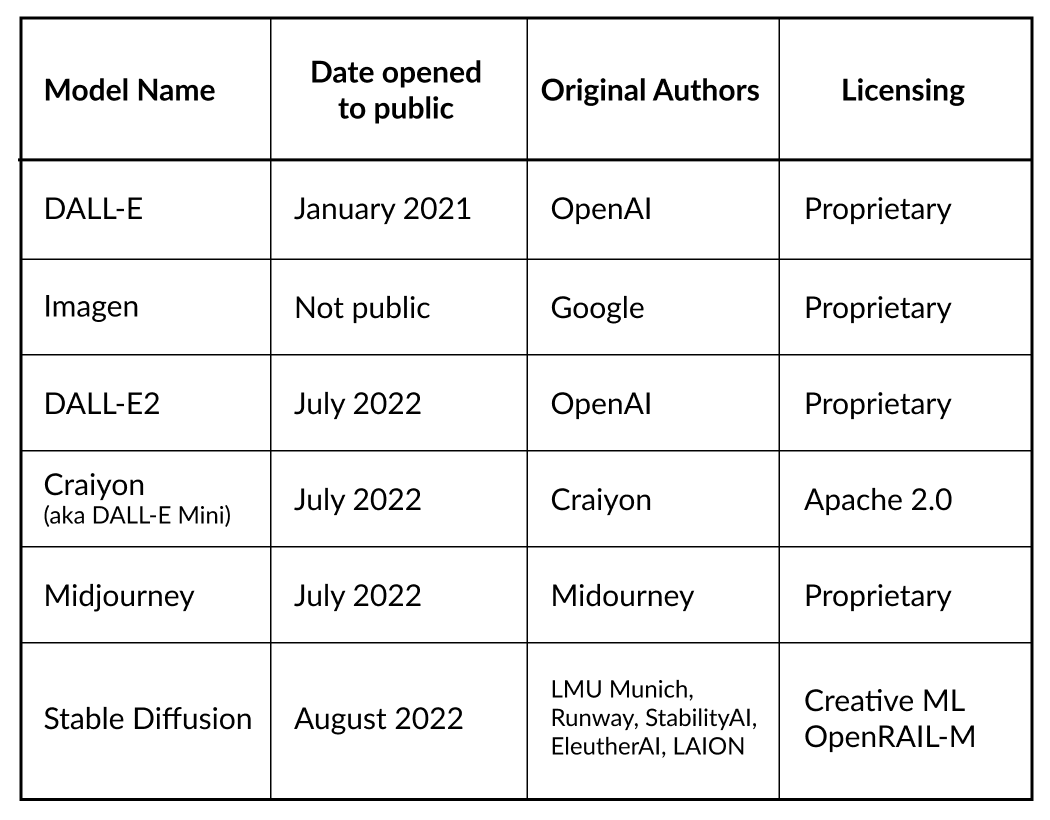

By far, the most famous latent diffusion model is Stable Diffusion, which was open sourced in August 2022 from a collaboration between LMU Munich, Runway, StabilityAI, EleutherAI and LAION.

Explosion of generative AI text-to-image models

Most of the new image generation models use diffusion, including DALL-E 2, Imagen and Stable Diffusion. In fact, Google’s Imagen uses a cascading series of diffusion models to first map the input text prompt into a 64x64 image, then apply a couple of super-resolution models to upscale the image to 256x256 and 1024x1024.

The table below tracks the rapid progress of generative image models:

A key difference between these models is whether they are proprietary or open source. Most are proprietary and require a contractual agreement and pricing structure with the creator of the model. These models provide their customers with APIs to call into the model; the actual image generation takes place on servers owned or managed by the publisher. Just earlier this week, OpenAI launched the DALL-E API for developers to programmatically integrate DALL-E into their applications.

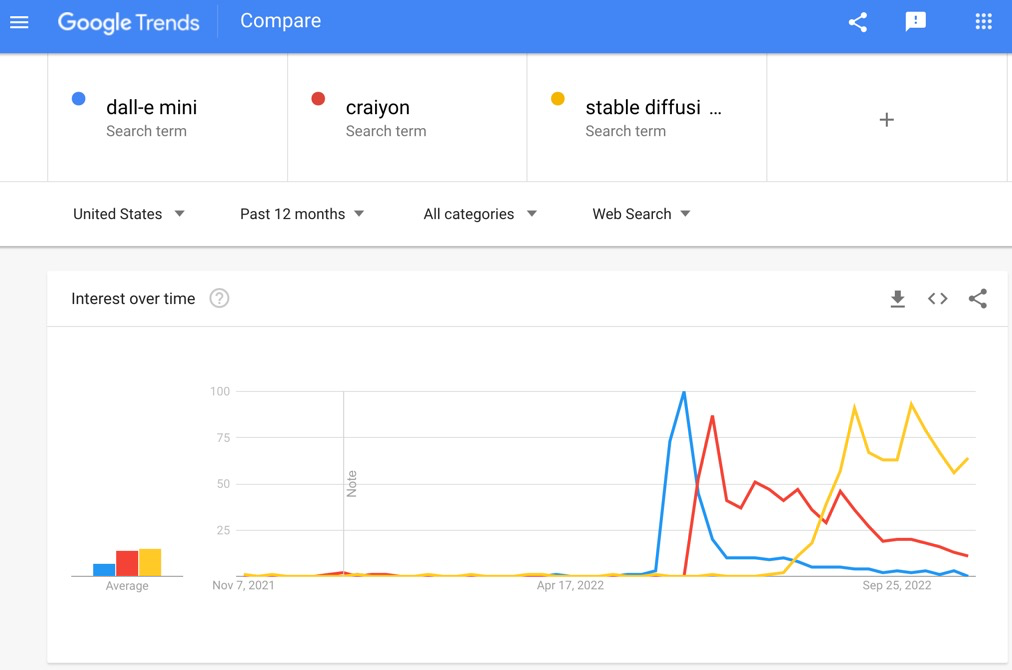

Craiyon and Stable Diffusion stand out in the table as the two open source contenders. Developers interested in integrating image generation models into their applications have shown significant interest in the open source variants.

Furthermore, updated versions of older models or newer models with better results can quickly dethrone previous models. For example, only a couple of months after Stable Diffusion’s v1.4 release, an updated version of the model was released to great fanfare: Stable Diffusion v1.5, which was trained with 370,000 more steps. Additionally the latest Diffusers package v0.7 includes performance updates such as Fp16 and Flash Attention, a technique that allows for much faster image generation. Other times new model architectures can overtake the mind share of previous models. Here is a Google Trends chart showing the rise and fall of dall-e mini and craiyon searches this summer, followed by the rise of stable diffusion searches this fall:

Overcoming technical limitations of image generation models

Importance of training data

Each diffusion model’s training set, model architecture and language component makes them excel in some use cases but struggle in others. Text-to-image models are particularly very data hungry, requiring massive datasets. One of the few ways to gather such a large dataset is to scrape the non-curated web for images with paired text, like the LAION-400M dataset does using the Common Crawl web data’s random web pages crawled between 2014 to 2021. LAION’s datasets are used by Imagen (400 million images) and Stable Diffusion (5 billion images). Upgrading data sets with new model version releases or training with more steps on the same data is one of the primary contributors to the newer model’s prowess; Training for Stable Diffusion v1.4 was resumed from the v1.2 checkpoint with an additional 225,000 steps, while Stable Diffusion v1.4 was also resumed from the v1.2 checkpoint but with an additional 595,000 steps.

Such an approach to gather 413 million image-text pairs comes with inherent limitations and biases - the internet contains toxic language, nude or violent imagery, harmful social stereotypes. As a consequence these harmful biases may have been encoded into the models. This doesn’t necessarily restrict adoption of these models, since safety filters can be added for text prompts (limiting the model’s output) and models can be retrained on custom data sets that are country specific or industry specific that can remove some of the biases for the model’s intended use case.

Craiyon, which was trained on a single TPU for 3 days using only 30 million images from Conceptual and YFCC100M datasets, creates less photo-realistic images than the larger models. Midjourney has not published any details about which datasets were used to train its generative model, and recently came under fire for potentially using copyrighted artists’ work in their training set. When asked about training data sets by Forbes in Sept 2022, Midjourney’s CEO replied: “It’s just a big scrape of the Internet. We use the open data sets that are published and train across those. And I’d say that’s something that 100% of people do. We weren’t picky.” OpenAI announced a strategic partnership in Oct 2022 with Shutterstock and OpenAI’s CEO, Sam Altman, stated that “The data we licensed from Shutterstock was critical to the training of DALL-E”.

Model architecture

The architectural choices and design decisions at every stage of the model creation process can also bring their own biases or preferences into the final created image. For example, DALL-E 2, Midjourney, Craiyon, Stable Diffusion and Imagen all use variants of OpenAI’s pre-trained CLIP neural network to select the best images from the randomly generated samples by scoring them . CLIP was trained on OpenAI’s newly created dataset of 400 million (image, text) pairs collected from a variety of publicly available sources on the Internet. The CLIP paper devotes several pages to discussing the model’s biases, such as 16.5% of male images were misclassified into classes related to crime, compared to 9.8% of female images, CLIP also attached some labels that described high status occupations disproportionately: more often men were classified as as ‘executive’ and ‘doctor’ and out of the only four occupations that it attached more often to women, three were ‘newscaster’, ‘television presenter’ and ‘newsreader’.

Be aware of pros and cons of various models

Some of the inherent biases in these models have been responsibly pointed out by the creators of the models themselves. Craiyon mentions in their limitations and biases that its model tends to output people who we perceive to be white, while people of color are underrepresented; Faces and people are not generated properly (think 6 fingers or 3 knees) and animals are usually unrealistic; OpenAI has completed some of the most extensive research into DALL-E 2 biases. DALL-E 2 tends to produce images that overrepresent white people and western concepts (wedding and restaurant prompts generate western styles of both). Gender stereotypes are also present, where flight attendants are represented as women, while builders are represented as men. Most other models such as Imagen and Stable Diffusion echo and publish similar biases. Model developers are looking at creative solutions such as re-training models with specialized datasets such as country/industry specific data to remove some of these biases.

The current generation of diffusion models can also struggle to understand the nuances of some language prompts, such as the layered composition of certain concepts and relationships between objects. Some of the discovered limitations of DALL-E 2) include requesting more than three objects, negation, numbers and connected sentences - all of which may result in mistakes and object features applied to the wrong object. The model also has difficulty rendering text into the image, which often appears as dream-esque gibberish.

The creators of Google’s Imagen have acknowledged flaws in its image generation with counts. Although Imagen can easily generate a “zebra on a field”, but cannot count properly to generate “10 zebras on a field”. Imagen also fails at understanding positional relationships. On the other hand, Imagen excels at merging concepts such as generating a hybrid “capybara and zebra”.

When deciding which generative image model to select for your use case, experimenting with various prompts for each model can provide guidance on which model to select. Midjourney, for example, is highly lauded for its ability to create fantasy and sci-fi stylized images with dramatic lighting that looks like rendered video game art. Imagen, on the other hand, strives for photorealism. Over the past few months, many users have posted about their experiments with using the same prompt with different models, such as this comparison between Craiyon and Stable Diffusion.

Being aware of the nuances of each model offers opportunities for application developers to overcome these limitations and differentiate their offerings in the market from the competition.

Next up in our generative AI series

We hope this primer on image generation has sparked your interest in this emerging field. If you’re interested in deploying models like Craiyon or Stable Diffusion to production, contact us to discuss how OctoML can help you package your trained model into a Docker container and accelerate it for peak inference performance and ML infrastructure cost savings.