Apache TVM democratizes efficient machine learning with a unified software foundation. OctoML is building an MLops automation platform on top of it.

Machine learning models are an integral part of modern applications, from games to productivity tools, personalized medicine and drug discovery. While there has been significant progress in core machine learning techniques and tools for data management and model creation, there is still a significant gap between model creation and deployment in production.

We often hear in our customer interactions that “data scientists have to be paired with software engineers to deploy ML models effectively”. That is seen as a significant pain point that leads to longer time-to-deployment and higher costs. As a parallel to DevOps — the process of managing the process of software development and deployment — “MLops” refers to the process of creating, training, optimizing, packaging, integrating and deploying machine learning models. A good DevOps process enables teams to update and deploy applications in minutes, in comparison, it often takes months to deploy ML models… MLops needs attention.

First, ML models aren’t just code, they are composed of high-level specifications of the model architecture, plus a significant amount of data in the form of parameters that are derived during model training. These high-level specs need to be carefully translated into executable code and parameters need to be placed in the memory hierarchy strategically to maximize reuse and performance. That creates significant dependencies on the ML framework (e.g., TensorFlow, PyTorch, MxNet, Keras,…), the lower level system parameters and the code infrastructure. To make things more complicated, ML workloads are very compute hungry, making performance tuning a necessary step. The end result is that taking one model into production in one HW platform requires significant manual work and careful tuning.

Second, ML models are hard to port. ML software stacks are significantly fragmented at the data science framework level (TensorFlow, PyTorch, MxNET, Keras, …) and at the systems software level needed for production deployment (nVidia cuDNN, ARM ComputeLib/NNPack, Intel’s MKL-DNN). The portability problem is only getting harder because of the fast growing set of hardware targets to consider: microcontrollers, mobile/server CPUs, mobile/server GPUs, FPGAs, and ever growing number of ML accelerators. Each one of these hardware targets today require manual tuning of low level code to enable reasonable performance. And that has to be redone as models evolve. The largest tech companies on earth solve this problem by throwing resources at it, but that’s not a sustainable solution for them — or a possible solution for most.

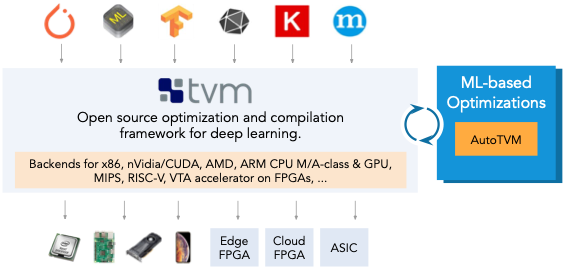

Apache TVM: A foundation for building a model once and running anywhere

Apache TVM aims to solve the exact problem of making models portable automatically, i.e., no hand tuning. The “magic” is in its use of machine learning to optimize code generation — since it can’t rely on human intuition and experience to pick the right parameters for model optimization and code generation, it *searches* for the parameters in a very efficient way by predicting how the hardware target would behave for each option.

Apache TVM has been in deployment in major systems for several years and enjoys a thriving community of 410+ contributors from major software and services companies, hardware vendors and academia. TVM has shown to be effective in deploying models at scale, in multiple platforms and with performance gains ranging from 3x to 85x (see talks from Amazon, Facebook and Microsoft during the last <a href="https://tvmconf.org/" target="_blank">TVM Conference</a>!

Chances are that applications you use daily have ML models optimized and compiled with TVM already. While TVM is powerful, it is still fairly sophisticated for broad use. We want to simplify the use of TVM and democratize efficient machine learning.

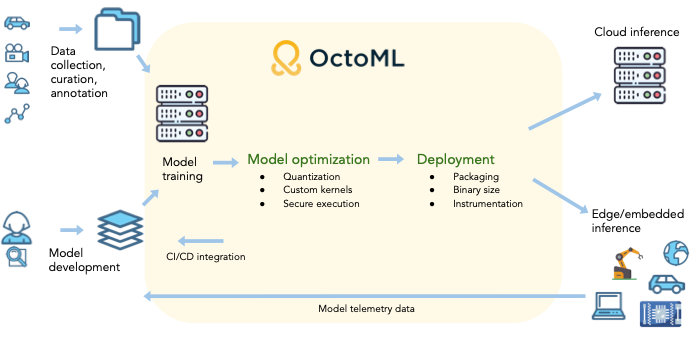

Making portable and efficient ML accessible to everyone via the Octomizer MLops automation platform

We are building the OctoML platform around TVM to make it very easy for any data engineer to optimize, compile and deploy models in edge devices and in the cloud. This enable users to:

Make ML applications viable by making models meet hardware resource constraints for edge deployment or reducing cloud bills.

Accelerate time-to-deployment of ML models via automated optimization, packaging and deployment.

Benchmarking their models against a large collection of hardware targets.

Reduce dependence on specific providers with enhanced portability.

While OctoML is starting with model optimization and deployment, we are planning on building future support for CI/CD integration, model instrumentation for deployment monitoring, and training job optimization, aiming at a full suite of services to bridge model creation to deployment. This directly complements existing model creation tools and MLops platforms that focus on data+model management or just on deployment management.

Reach out to info@octoml.ai to explore how we can enable your ML use goals.

Related Posts

Optimizing and deploying machine learning workloads to bare metal devices today is difficult, and Apache TVM is laying the open source foundation to making this easy and fast for anyone.

Autoscheduling enables higher performance end to end model optimization from TVM, while also enabling users to write custom operators even easier than before.