Mixtral 8x22B is now available on OctoAI

We are excited to share that Mistral’s new Mixtral 8x22B is now available on OctoAI. Its predecessor, Mixtral 8x7B, has been one of the most popular open source models on the platform and our customers are already experimenting with the new 8x22B base model to evaluate it for their use cases.

You can run inferences against the Mixtral 8x22B base model on OctoAI using /completions REST API or using the OpenAI SDK. If you have a fine tuned version that you would like to deploy, please contact us. We are monitoring the community fine tunes that are already being released and will be on the lookout for the best new offerings to host on OctoAI.

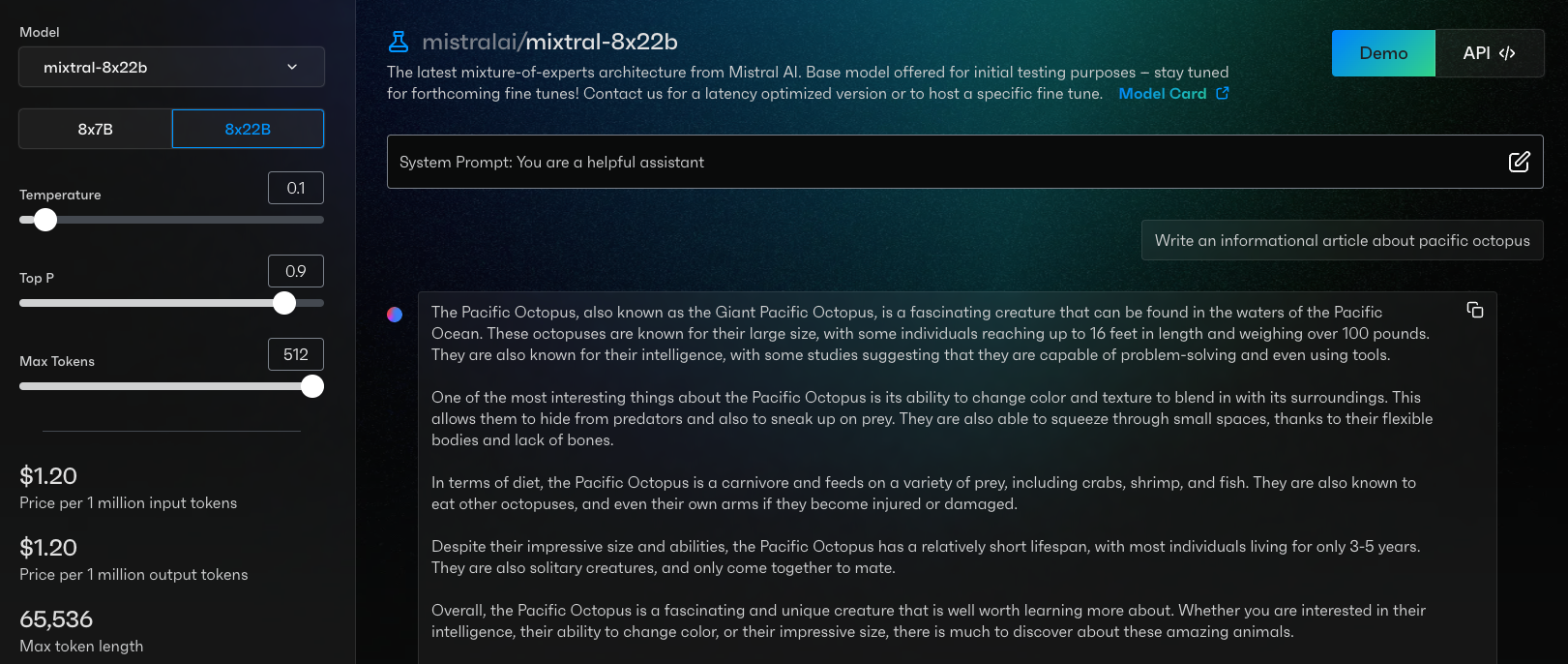

Prompting with legacy completions API

The Mixtral 8x22B model shared by Mistral this week includes the base model and the base pre-trained weights. This is not fine-tuned for instruction following or chat behavior, or for working with chat templates. The model may not always respond in a predictable manner to Chat Completions APIs, which have become the defacto API format used for newer applications built to use large language models (LLMs). A better option would be to use the legacy Completions API, and provide the model the completion prompt to build on in the “prompt” attribute.

curl -X POST "https://text.octoai.run/v1/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer <YOUR_OCTOAI_API_TOKEN> \

--data-raw '{

"model": "mixtral-8x22b",

"prompt": "The octopus is fascinating because"

}'from openai import OpenAI

client = OpenAI(

api_key="<YOUR_OCTOAI_API_TOKEN>”,

base_url="https://text.octoai.run/v1",

)

completion = client.completions.create(

model="mixtral-8x22b",

prompt="The octopus is fascinating because"

)

print(completion)During early evaluation, early evaluators have already observed that with effective prompting of the base, you can achieve outcome and interactions quite close to Mixtral 8x7B Instruct in a number of scenarios. This is a good indication of the quality improvement the new model will bring in the coming days, and validation of the buzz that the release has generated!

Effective prompting to optimize /completions responses

The following are ways in which any developer can effectively use the Mixtral-8x22-base today. These build on practices and lessons from the Hyperwrite AI team, who have been among the earliest adopters and evaluators of the new model:

- Treat this model as a “continuation” engine. The model is tuned to complete text that it sees. So if you want to know about the Roman conquest of Gaul, instead of asking “When did the Romans conquer Gaul?”, try prompting it with “The Romans conquered Gaul in the year”.

- Use formatting it is likely to have seen from training data. For instance, if you’re trying to have the model write emails, prompt with the plaintext email formatting that gmail creates.

- Provide examples to the model. Mixtral-8x22B has done well on leaderboards because many of these tests prompt the model with multi-shot instructions. For best results, do the same yourself. Give the model many examples and then begin a new example, for it to complete.

- Lead the model. To ensure the model responds with what you want, you may want to include a separator in all of your examples, and then end your prompt with the separator.

For example, a good prompt for revising text might look like:

Revising text – a guide for aspiring editors.

Here are some great examples where text has been revised properly:

Original: “Mixtral is great base model.”

Revised: “Mixtral is a great base model.”

–

Original: “How about if we just move that entire segment to one blurb after the first image - we can discuss APIs, curl and prompting in one go.”

Revised: “Let's consolidate the content by moving that entire segment into a single blurb following the first image. This will allow us to concisely discuss APIs, curl, and prompting in one cohesive section.”

–

Original: “{PUT_YOUR_TEXT_HERE}”

Revised: “Notice how the prompt ends with a quotation mark, signaling to the model that it should start revising the text. From there, add a stop token ‘“‘, and you’ve built a working text revising prompt!

At Hyperwrite AI, we are constantly evaluating the latest advancements in open-source language models to ensure we are delivering the best possible solutions to our customers. In our early testing, Mixtral 8x22B has demonstrated impressive capabilities across a range of benchmarks and real-world applications. Its strong performance is a testament to the rapid progress happening in the open-source AI community.

By sharing our learnings and best practices around effective prompting and evaluation of these base models, we hope to enable more developers to harness their potential and build transformative products. Mixtral 8x22B represents an exciting leap forward, and we look forward to seeing the novel use cases and innovations it unlocks as it becomes more widely accessible through platforms like OctoAI.

Matt Shumer, CEO and Co-founder @ Otherside AI

Another leap for open source mixture of experts

Mistral “dropped” its new Mixtral 8x22B earlier this week, kicking off a flurry of discussions and excitement in the community. The Mixtral 8x7B’s performance and ability to outperform GPT 3.5 in certain areas was a major milestone for the momentum of open source models, and builders have been eagerly waiting for more.

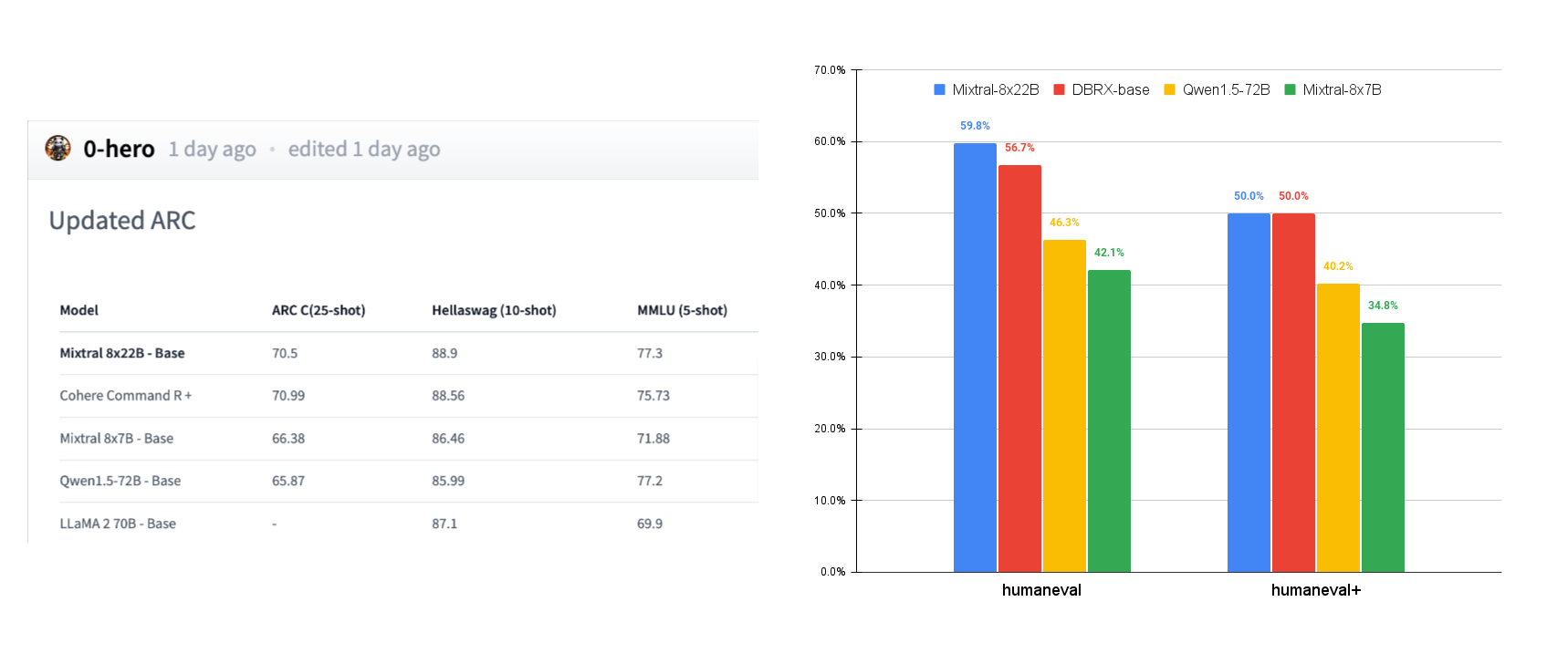

Early results shared by the community highlight the new Mixtral 8x22B showing improvements over the Mixtral 8x7B base as well as other recent model releases like DBRX and Qwen, across multiple benchmarks including ARC, MMLU, and HumanEval — an early indicator of the improvement that the new model brings to the open source world.

Try Mixtral 8x22B on OctoAI today!

You can get started with Mixtral 8x22B today at no cost, with a free trial on OctoAI. You’re also welcome to join us on Discord to engage with the team and community, and to share updates about your new AI powered applications. We look forward to hearing from you on our channels!